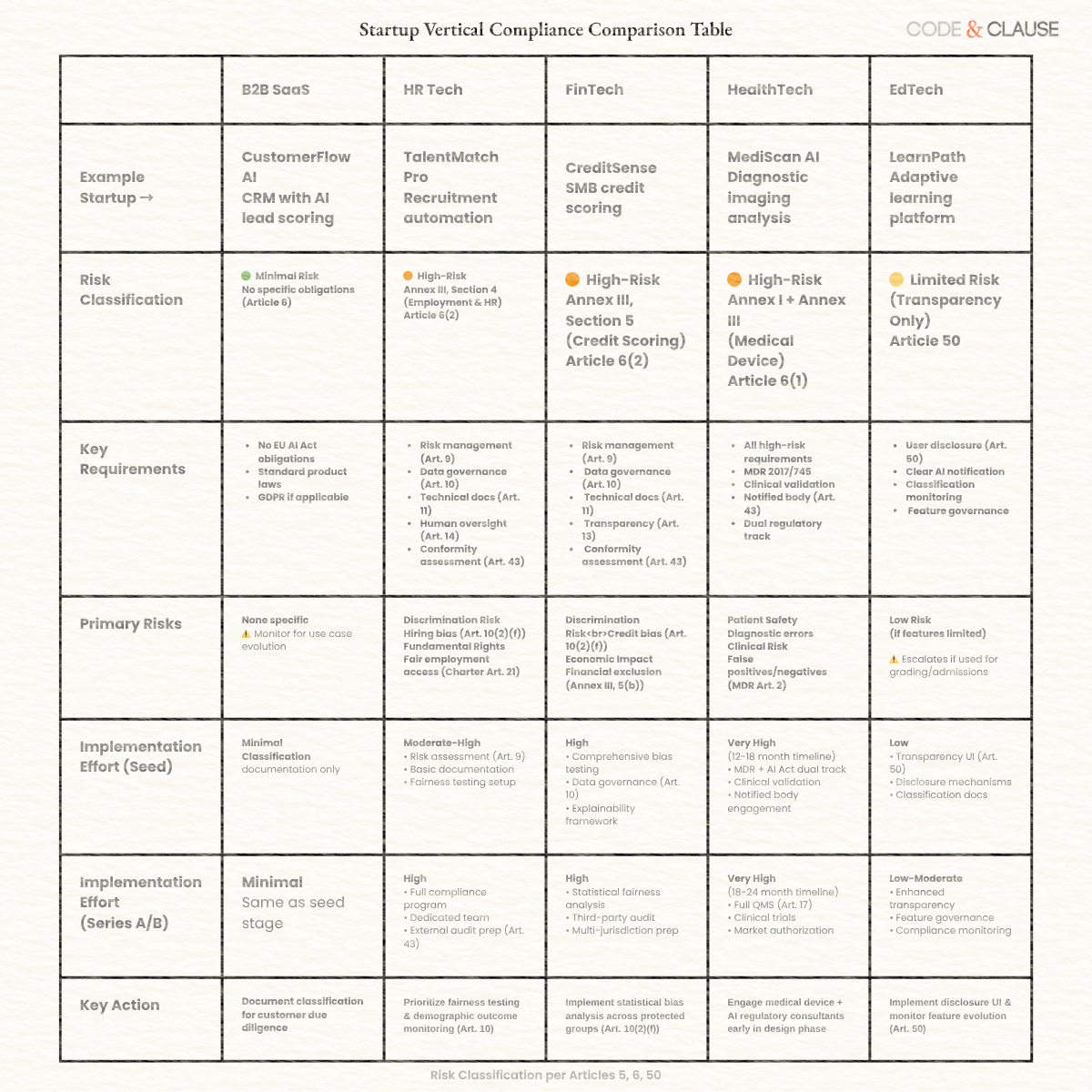

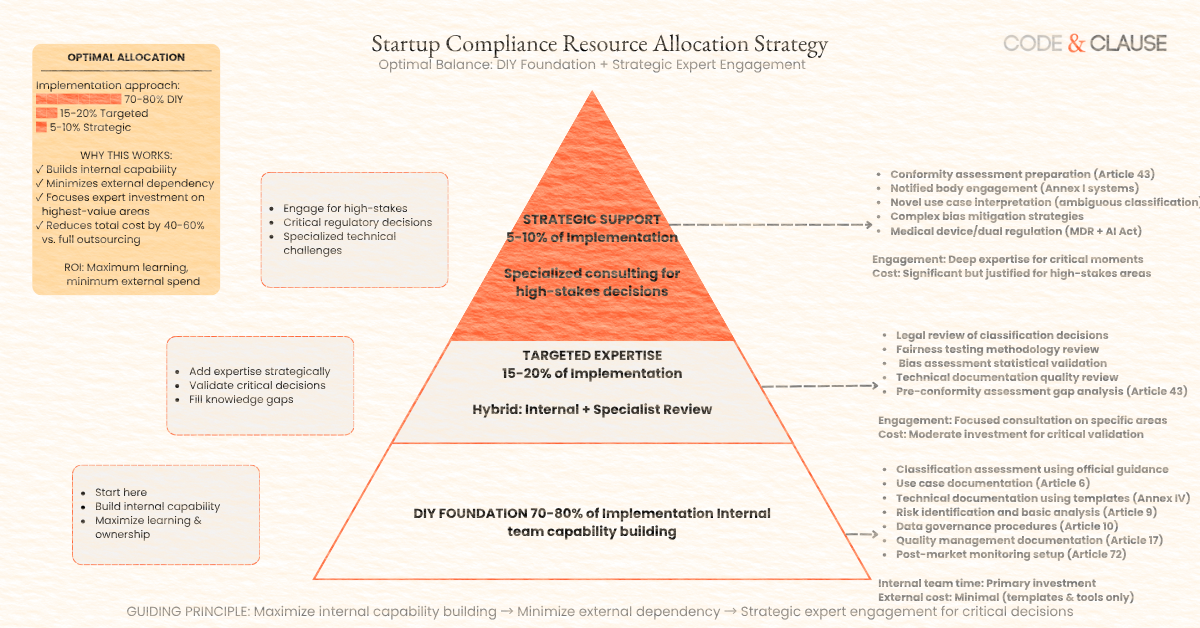

Is your AI startup actually high-risk under the EU AI Act, or are you over-investing in unnecessary compliance based on misclassification? Most B2B SaaS and EdTech startups fall into minimal or limited risk categories, while HR tech and FinTech face comprehensive requirements—but knowing the difference requires systematic evaluation using Annex I and III frameworks, not guesswork. This technical guide provides the classification decision tree, implementation roadmaps (4-6 months for high-risk systems), vertical comparisons with effort estimates, and the resource allocation pyramid showing how to achieve compliance with 70-80% internal work and strategic expert engagement for critical validation only.

Share this on:

Introduction: The Startup Regulatory Reality

The European Union represents a market of 450 million consumers with combined GDP exceeding €18 trillion¹—an opportunity no ambitious AI startup can afford to ignore. However, AI compliance for startups under the EU AI Act (Regulation (EU) 2024/1689) introduces comprehensive requirements that many founders perceive as insurmountable barriers to market entry².

This guide provides startup founders and technical teams with a comprehensive framework for navigating EU AI Act compliance efficiently. Drawing on specific regulatory requirements, conformity assessment procedures, and practical implementation strategies, this analysis equips startups to enter European markets while maintaining lean operations and limited budgets.

The following sections address classification methodology, common compliance mistakes, detailed implementation roadmaps segmented by startup maturity, vertical-specific scenarios, and resource optimization strategies grounded in actual regulatory text rather than generalized compliance advice.

What is AI Compliance for Startups?

AI compliance for startups refers to meeting EU AI Act regulatory requirements based on system risk classification. Most startups (80%) fall into minimal or limited risk categories requiring only basic transparency obligations. High-risk systems in employment, credit, or healthcare domains must implement comprehensive risk management, technical documentation, and conformity assessment procedures before EU market entry. The 70-80% DIY implementation approach enables resource-constrained startups to achieve compliance through internal capability building with strategic expert validation.

Why EU Market Access Demands Strategic Compliance

The Market Opportunity

European artificial intelligence markets present substantial growth opportunities for startups across multiple sectors. The global AI governance market is projected to grow from $227.6 million in 2024 to $1.418 billion by 2030, representing a 35.7% compound annual growth rate⁴. While this represents worldwide growth, the European Union accounts for a significant portion given its regulatory leadership position and early AI Act implementation. European enterprises and public sector organizations are accelerating AI governance investments to meet compliance requirements, creating substantial market opportunities for compliant AI solutions.

For startups, European expansion offers several strategic advantages beyond market size. European enterprises demonstrate willingness to adopt AI solutions that demonstrate regulatory compliance, creating competitive differentiation for compliant startups versus non-compliant competitors. Additionally, EU AI Act compliance increasingly serves as a prerequisite for enterprise procurement processes, venture capital due diligence, and strategic partnership discussions.

Enforcement Timeline and Regulatory Reality

The EU AI Act establishes a phased implementation timeline with different provisions becoming applicable at specific dates⁵:

- February 2, 2025: Prohibited AI practices (Article 5) become enforceable

- August 2, 2025: General-purpose AI model obligations (Chapter V) apply

- August 2, 2026: High-risk AI system requirements (Title III) become fully applicable

- August 2, 2027: Requirements for AI systems as safety components apply

For startups planning European market entry, the August 2026 deadline for high-risk systems represents the critical compliance milestone. Systems classified as high-risk must complete conformity assessment procedures before placement on the EU market⁶. With approximately ten months remaining until this deadline as of October 2025, startups must begin compliance activities immediately to allow sufficient implementation time. Well-planned compliance programs typically require 4-6 months for comprehensive implementation, making current timing critical for market access by the enforcement date.

Penalty Framework and Risk Assessment

Article 99 of the EU AI Act establishes administrative fines up to €35 million or 7% of total worldwide annual turnover (whichever is higher) for the most serious violations (prohibited AI practices), up to €15 million or 3% of turnover (whichever is higher) for high-risk system non-compliance, and up to €7.5 million or 1.5% of turnover (whichever is higher) for other violations⁷.

Startups frequently discount these penalty amounts based on revenue size, reasoning that percentage-based fines create minimal exposure for early-stage companies. This analysis overlooks several critical factors. First, fines calculated as percentage of turnover establish minimum rather than maximum penalties—regulators may impose the higher of the fixed amount or percentage calculation. Second, non-compliance creates market access barriers independent of fines. High-risk AI systems lacking required conformity assessment cannot be legally placed on EU markets⁸. Third, non-compliance creates due diligence obstacles during funding rounds, acquisition discussions, and partnership negotiations.

Compliance as Competitive Advantage

Early compliance implementation creates several strategic advantages for startups. First-mover compliant startups can capture enterprise customers whose procurement requirements mandate AI Act conformity, establishing market position before competitors achieve compliance. Compliance documentation supports sales cycles by addressing regulatory objections that might otherwise delay or prevent customer adoption.

Additionally, systematic compliance implementation builds operational capabilities that scale with business growth. Risk management systems, technical documentation frameworks, and data governance procedures required for AI Act compliance provide foundational infrastructure supporting business operations beyond regulatory requirements. Startups implementing these systems early avoid costly remediation cycles as they scale.

Understanding AI System Classification for Startups

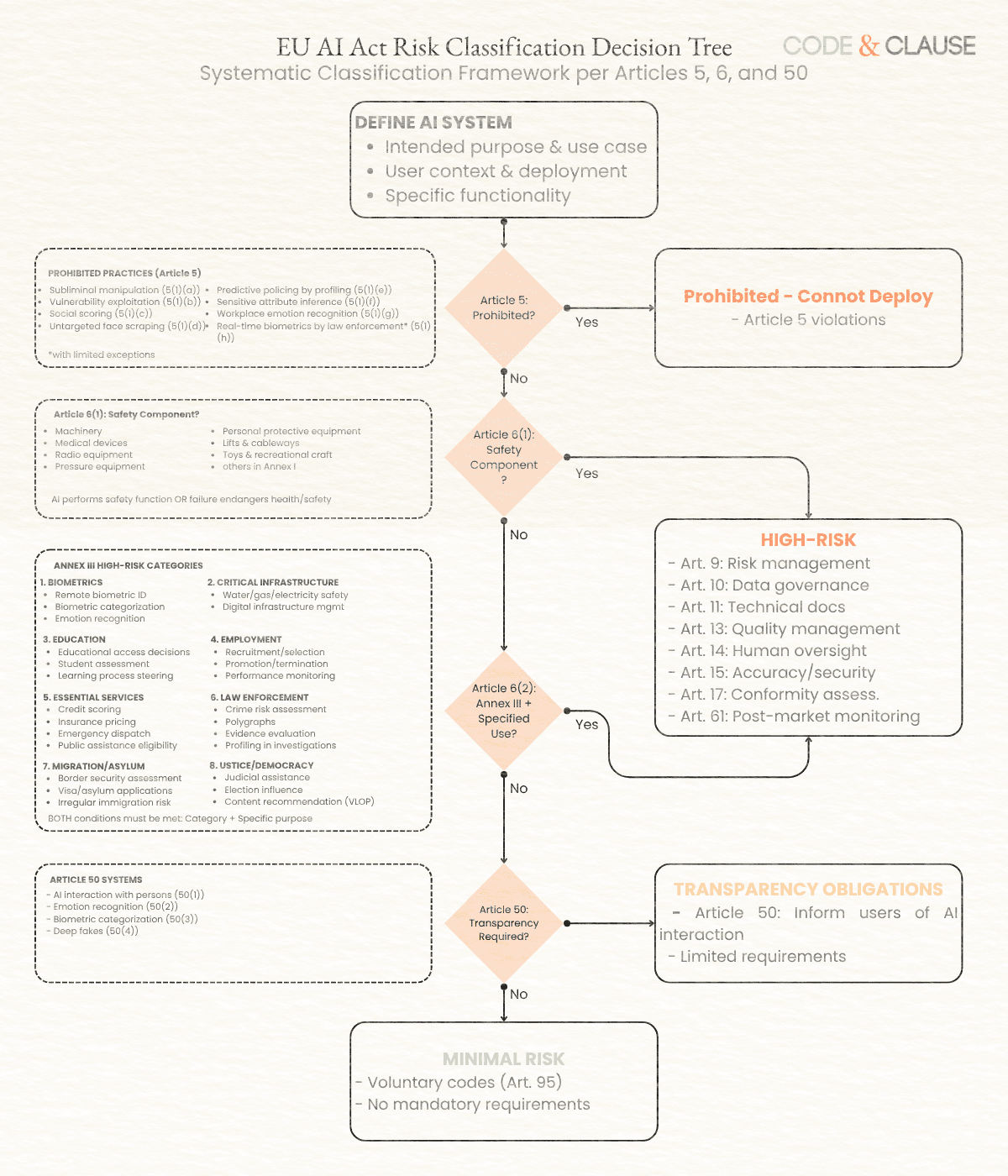

Accurate classification represents the foundational step in EU AI Act compliance. Classification determines applicable requirements, conformity assessment procedures, and resource investment needed for market entry. Misclassification creates either unnecessary compliance burden (over-classification) or regulatory exposure and market access barriers (under-classification).

The Four-Tier Risk Framework

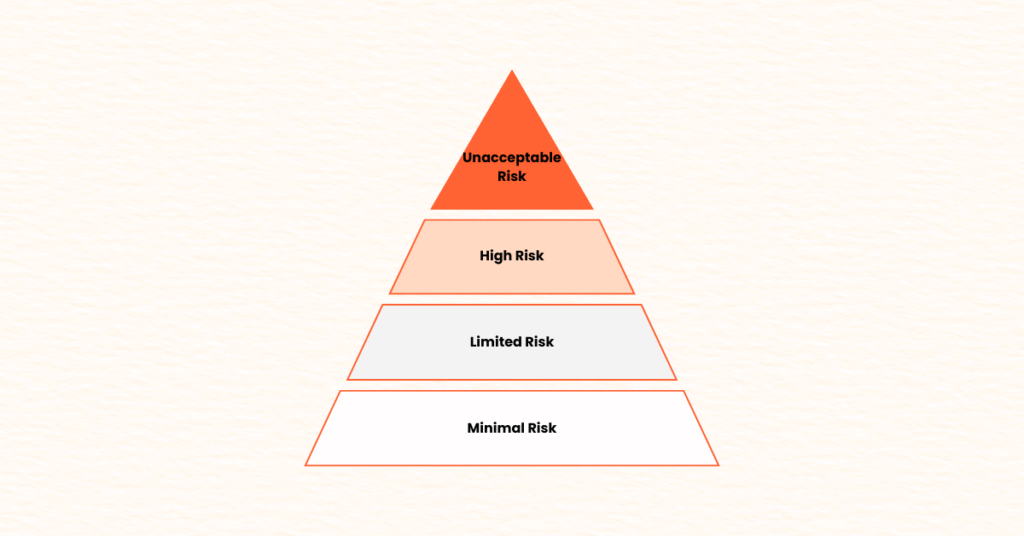

The EU AI Act establishes four risk categories, each with distinct regulatory obligations⁹:

Unacceptable Risk (Prohibited): AI systems posing unacceptable risks to fundamental rights are banned under Article 5. These include social scoring systems, manipulation of vulnerable persons, real-time remote biometric identification in public spaces by law enforcement (with limited exceptions), and biometric categorization inferring sensitive attributes¹⁰. Startups must ensure their systems do not fall within these prohibited categories regardless of other compliance measures.

High Risk: AI systems classified as high-risk face comprehensive regulatory requirements including risk management systems (Article 9), data governance (Article 10), technical documentation (Article 11), record-keeping (Article 12), transparency (Article 13), human oversight (Article 14), accuracy and robustness (Article 15), quality management (Article 17), and conformity assessment procedures (Article 43)¹¹. High-risk classification applies through two pathways: systems serving as safety components in regulated products (Annex I) or systems falling within specified use cases (Annex III).

Limited Risk: AI systems with limited risk face only transparency obligations under Article 50. This includes AI systems interacting with natural persons (chatbots), emotion recognition systems, biometric categorization systems, and systems generating synthetic content¹². Transparency requirements mandate disclosure that users are interacting with AI systems, enabling informed decision-making about continued interaction.

Minimal or No Risk: AI systems not falling into the above categories face no specific EU AI Act obligations. This includes the majority of AI systems deployed across various applications. Organizations may voluntarily adopt codes of conduct (Article 95) but face no mandatory requirements¹³.

Classification Pathway 1: Annex I Safety Components

Annex I establishes that AI systems serving as safety components in products covered by specific Union harmonization legislation are automatically high-risk¹⁴. This pathway primarily affects startups developing AI for regulated sectors including medical devices, automotive systems, aviation equipment, machinery, and certain electrical equipment.

The classification requires three conditions: first, the AI system must function as a safety component (meaning its failure or malfunction compromises product safety). Second, the product containing the AI system must be subject to third-party conformity assessment under applicable Union harmonization legislation. Third, the AI system must actually be used within products undergoing such assessment.

Most software-focused startups do not fall under Annex I classification unless explicitly developing AI for integration into regulated hardware products. However, startups in health technology, automotive technology, or industrial automation should carefully evaluate this pathway.

Classification Pathway 2: Annex III High-Risk Use Cases

Annex III defines eight categories of high-risk AI systems based on use case and application area¹⁵. Understanding these categories is essential for startup classification.

Biometrics (Annex III, Section 1)

Remote biometric identification systems, biometric categorization according to sensitive attributes (race, political opinions, sexual orientation, etc.), and emotion recognition systems in workplace and education contexts. Startups developing facial recognition, voice authentication, or emotion analysis capabilities should carefully assess this category.

Critical Infrastructure (Annex III, Section 2)

AI systems managing or operating critical digital infrastructure, road traffic, or water, gas, heating, and electricity supply. Infrastructure-focused startups fall within this category when systems could cause serious harm through service disruption.

Education and Vocational Training (Annex III, Section 3)

Systems determining access to educational institutions, assessing learning outcomes, monitoring exam integrity (proctoring), or detecting plagiarism. EdTech startups using AI for admissions, grading, or assessment typically classify as high-risk under this provision.

Employment and Workers Management (Annex III, Section 4)

AI systems for recruitment, selection, task allocation, performance monitoring, and termination decisions. HR technology startups using AI in any employment lifecycle stage generally fall within high-risk classification.

Essential Private and Public Services (Annex III, Section 5)

Systems evaluating creditworthiness, insurance pricing and risk assessment, emergency response prioritization, and public benefits eligibility. FinTech startups providing credit scoring or insurance products typically face high-risk classification.

Law Enforcement (Annex III, Section 6)

Risk assessment for criminal offense probability, polygraph systems, and evidence reliability evaluation. This category primarily affects government contractors rather than commercial startups.

Migration, Asylum and Border Control (Annex III, Section 7)

Systems assessing security risks from persons entering territory, examining asylum applications, or detecting fraudulent documents. Similar to law enforcement, this primarily affects specialized government contractors.

Justice and Democratic Processes (Annex III, Section 8)

AI assisting judicial decision-making or legal research, and systems influencing electoral outcomes. This category affects legal technology startups and platforms involved in political communication.

The Critical 80/20 Rule for Startups

Analysis of startup AI applications reveals that approximately 80% of startup AI systems fall into minimal risk or limited risk categories rather than high-risk classification. This distribution reflects the reality that most B2B SaaS applications, consumer applications, and productivity tools do not involve the specific high-risk use cases defined in Annex III.

💡 Key Insight: Most startups should approach classification assuming minimal or limited risk until evidence indicates otherwise, rather than defaulting to high-risk assumptions that drive unnecessary compliance investment.

Common startup AI applications that typically avoid high-risk classification include:

- Customer relationship management with AI-powered recommendations

- Marketing automation and content generation

- Productivity tools with AI assistance

- Data analytics and business intelligence

- Internal process automation

- Customer service chatbots (limited risk with transparency requirements only)

- Development tools and code assistance

Understanding this distribution enables startups to approach classification with appropriate perspective. Rather than assuming high-risk classification by default, startups should systematically evaluate actual use cases against specific Annex III criteria.

Classification Methodology for Startups

Startups should implement systematic classification using the following framework:

Step 1: Document Intended Use Cases

Comprehensively document all intended use cases for the AI system, including primary use cases, secondary applications, and reasonably foreseeable misuse scenarios. Article 6 classification depends on intended purpose as determined by the provider¹⁶. Documentation should specify:

- Decisions made by or with AI system assistance

- Level of human involvement in decision-making

- Population affected by system outputs

- Potential impacts on individuals’ rights and interests

- Integration context with other systems or processes

Step 2: Evaluate Annex III Applicability

Systematically evaluate each Annex III category against documented use cases. For each category, assess whether the system:

- Operates within the specified domain (employment, education, credit, etc.)

- Performs the specified function (assessment, allocation, monitoring, etc.)

- Affects natural persons’ access to opportunities or services

- Could reasonably cause significant harm if malfunction occurs

This evaluation should document reasoning for applicability or non-applicability of each Annex III category, creating an audit trail supporting classification decisions.

Step 3: Assess Material Influence

Article 6(3) provides that AI systems in Annex III categories qualify as high-risk only when intended to produce output materially influencing outcomes¹⁷. Systems performing purely auxiliary functions or narrow tasks not materially affecting outcomes may escape high-risk classification.

The “material influence” assessment requires evaluating whether decision-makers typically rely on AI outputs or exercise independent judgment. If the AI system provides information that decision-makers routinely evaluate critically and frequently disregard, material influence may be absent. However, if decision-makers typically follow AI recommendations without substantial independent analysis, material influence exists.

Step 4: Cross-Functional Validation

Classification decisions should undergo cross-functional review involving:

- Technical teams understanding system capabilities and limitations

- Legal counsel interpreting regulatory requirements

- Business stakeholders defining use cases and applications

- Product managers understanding customer deployment contexts

This validation process identifies classification blind spots and ensures comprehensive evaluation.

Step 5: Documentation and Regular Review

Maintain comprehensive classification documentation including use case descriptions, Annex III evaluation, material influence assessment, and review approvals. Establish quarterly classification reviews to assess whether system evolution or new use cases affect classification status.

Common Classification Errors Affecting Startups

Error 1: Assuming B2B Tools Avoid High-Risk Classification

Startups frequently assume that B2B applications automatically fall outside high-risk categories. This assumption is incorrect. HR technology sold to enterprises remains high-risk under Annex III Section 4 regardless of B2B sales model¹⁸. Similarly, B2B credit assessment tools classify as high-risk under Annex III Section 5. Classification depends on use case and impact, not customer type.

Error 2: Confusing System Simplicity with Risk Level

Technical complexity does not determine risk classification. Simple rule-based systems can be high-risk if they operate in Annex III use cases, while sophisticated neural networks may be minimal risk if applied outside high-risk domains¹⁹. Classification focuses on application context and potential harm rather than technical sophistication.

Error 3: Overlooking Material Influence Through Human Oversight

Implementing human review does not automatically eliminate high-risk classification. If human reviewers typically accept AI recommendations without thorough independent analysis, material influence persists²⁰. Meaningful human oversight requires trained personnel with authority and information to exercise genuine judgment, not merely procedural review steps.

Error 4: Ignoring Use Case Evolution

Startups often classify systems based on initial narrow use cases without considering feature expansion or customer deployments in adjacent domains. A productivity tool initially avoiding high-risk classification may migrate toward high-risk status as features expand into employee monitoring or performance evaluation. Regular classification review prevents this drift.

The Startup Compliance Trap: Five Critical Mistakes

Startups approaching EU AI Act compliance frequently make predictable mistakes that either waste resources on unnecessary activities or create genuine regulatory exposure. Understanding these patterns enables more efficient compliance approaches.

Mistake 1: Assuming Startup Size Exempts Compliance

The EU AI Act contains no startup exemption, no revenue threshold, and no employee count exclusion²¹. High-risk AI systems require full compliance regardless of provider size. This regulatory design reflects the principle that AI system risk to individuals depends on system characteristics and use cases rather than provider size.

The regulation does provide support mechanisms for small-scale providers and startups through national competent authorities offering guidance and technical support. However, these support mechanisms facilitate compliance rather than exempting organizations from requirements.

Startups delaying compliance based on size assumptions face compressed implementation timelines as European expansion approaches, creating rushed implementations that miss critical requirements or incur unnecessary costs through expedited consulting engagements.

Mistake 2: Hiring Enterprise Consultants Prematurely

Large consulting firms typically charge substantial fees for compliance advisory services optimized for enterprise clients with complex organizational structures, extensive legacy systems, and risk-averse compliance cultures. These approaches often prove inefficient for startups with simpler technical architectures, limited organizational complexity, and resource constraints.

Startups engaging Big Four consultants before internal assessment and planning frequently encounter proposals for comprehensive programs including extensive stakeholder interviews, detailed current-state assessments, multi-year roadmaps, and governance frameworks exceeding startup needs. While these programs provide value for complex enterprises, they often represent over-engineering for startups with focused products and lean teams.

More efficient approaches involve startups conducting initial classification and gap assessment internally using available guidance documents, then engaging specialized consultants for targeted support in specific technical areas like conformity assessment preparation or technical documentation review.

Mistake 3: Over-Engineering Compliance Infrastructure

Startups sometimes implement compliance programs modeled on enterprise approaches, creating extensive documentation systems, formal committee structures, and comprehensive process frameworks that exceed regulatory requirements and startup operational capacity.

The EU AI Act requires systematic approaches to risk management, quality management, and documentation, but does not mandate specific organizational structures or process formality levels. Startups can implement compliant systems using lean methodologies appropriate to organizational size and complexity.

Over-engineered compliance creates several problems. First, extensive documentation requires ongoing maintenance consuming development resources. Second, formal processes slow product iteration and feature development. Third, compliance overhead becomes difficult to justify to investors focused on product development and growth metrics.

Proportionate implementation focuses on meeting regulatory substance while using minimal viable process frameworks that scale with organizational growth.

Mistake 4: Delaying Compliance Until Funding Rounds

Some startups deliberately delay compliance activities until funding rounds or major customer opportunities create immediate pressure. This approach creates several risks. First, compressed timelines increase costs through rushed implementations and emergency consulting engagements. Second, compliance gaps discovered late may require architectural changes expensive to implement in production systems. Third, compliance readiness affects investor due diligence and customer procurement decisions.

Early compliance planning—even before full implementation—enables architectural decisions that facilitate future compliance, avoiding costly retrofitting. For example, implementing logging infrastructure, documentation templates, and data governance frameworks during initial development costs far less than retrofitting these capabilities into production systems.

Mistake 5: Treating Compliance as Purely Legal Exercise

Startups sometimes delegate EU AI Act compliance entirely to legal teams without technical team involvement. This approach fails because AI Act requirements extend deeply into technical architecture, development processes, and operational procedures.

Article 11 technical documentation requirements demand detailed system architecture descriptions, model specifications, training data characteristics, and validation methodologies²². Legal teams cannot produce this documentation without substantial technical team involvement. Similarly, Article 9 risk management systems require ongoing technical team participation in risk identification, analysis, and mitigation²³.

Effective compliance requires cross-functional collaboration with legal teams interpreting requirements, technical teams implementing controls, and business stakeholders validating that compliance approaches support business objectives.

Comprehensive Compliance Implementation Roadmap

The following roadmap segments implementation by startup maturity level, recognizing that seed-stage startups face different resource constraints and priorities than Series A or B companies. Both paths ultimately achieve compliance but with different pacing and resource allocation.

Pre-Implementation: Classification and Scoping (2-4 weeks)

All startups regardless of maturity level should begin with systematic classification and scoping before investing in detailed compliance activities.

Activities:

1. Use Case Documentation: Create comprehensive documentation of all intended use cases, including primary applications, secondary features, and customer deployment contexts. This documentation should specify what decisions the AI system makes or influences, who is affected, and what potential harms could result from system failures or biases.

2. Annex III Evaluation: Systematically evaluate applicability of each Annex III category to documented use cases. Document reasoning for each category’s applicability or non-applicability, creating an audit trail supporting classification conclusions.

3. Material Influence Assessment: For systems potentially falling within Annex III categories, assess whether the system produces outputs materially influencing outcomes. Evaluate typical human involvement levels, decision-maker reliance on system outputs, and practical authority to override or disregard system recommendations.

4. Classification Documentation: Compile classification rationale documentation including use case descriptions, Annex III analysis, material influence assessment, and final classification determination with supporting reasoning.

5. Legal Review: Engage legal counsel with EU AI Act expertise to review classification documentation and validate conclusions. This review should identify classification uncertainties requiring additional analysis or conservative assumptions.

Time Investment: 40-80 hours depending on system complexity and use case variety

Outcome: Definitive classification determination guiding subsequent compliance activities

Path A: Seed Stage / Pre-Revenue Startups (Months 1-6)

Seed-stage startups typically operate with 5-20 employees, limited funding, and focus on product-market fit validation. Compliance implementation should focus on building foundational capabilities that scale with growth while meeting immediate regulatory requirements.

Phase 1: Foundation and Documentation Framework (Months 1-2)

For Limited Risk Systems:

Limited risk systems face only transparency obligations under Article 50. Seed-stage startups should implement:

1. User Disclosure Mechanisms: Design and implement clear disclosure that users are interacting with AI systems. For chatbots, this includes prominent notification before or during first interaction. For emotion recognition or biometric categorization, disclosure must occur before processing²⁴.

2. Transparency Documentation: Document disclosure mechanisms, user interface elements, and notification delivery methods. This documentation demonstrates compliance during customer procurement reviews or investor due diligence.

3. Monitoring Implementation: Establish basic monitoring confirming disclosure delivery and tracking user interactions with transparency information.

Time Investment: 20-40 hours

For High-Risk Systems:

High-risk classification requires more extensive foundation work:

1. Technical Documentation Framework: Establish documentation structure aligned with Annex IV requirements. Create templates for system architecture documentation, model cards, data governance records, and risk assessment documentation²⁵. Initial documentation need not be comprehensive but should establish frameworks that populate as development proceeds.

2. Version Control System: Implement systematic version control for all compliance documentation using tools like Git, enabling audit trails showing documentation evolution and decision-making history.

3. Risk Management Framework: Establish basic risk management procedures including risk identification methodology, analysis criteria, and documentation templates. Initial risk register should identify obvious technical risks (model bias, performance degradation) and use-case risks (individual harm scenarios).

4. Data Governance Procedures: Document data collection sources, establish data quality standards, and implement basic data lineage tracking showing data flow from acquisition through preprocessing to model training²⁶.

Time Investment: 80-120 hours

Phase 2: Core Implementation (Months 3-4)

For High-Risk Systems:

1. Detailed Technical Documentation: Complete Annex IV technical documentation including:

- System architecture with component specifications

- AI model technical specifications and model cards

- Training, validation, and testing data descriptions

- Performance metrics and validation results

- Known limitations and failure modes

2. Risk Assessment Execution: Conduct comprehensive risk assessment following Article 9 requirements:

- Systematic risk identification across technical, use-case, and compliance domains

- Impact and probability analysis with quantitative metrics where possible

- Risk mitigation strategy development with specific technical controls

- Residual risk assessment after mitigation implementation²⁷

3. Human Oversight Implementation: Design and implement human oversight mechanisms per Article 14 requirements, ensuring oversight personnel have appropriate training, authority to override system outputs, and access to information enabling informed judgment²⁸.

4. Quality Management Basics: Establish quality management procedures covering development processes, testing methodologies, and change management. Seed-stage startups should focus on documenting actual practices rather than creating extensive formal procedures that may not reflect reality²⁹.

Time Investment: 120-160 hours

Phase 3: Pre-Deployment Validation (Month 5)

1. Internal Conformity Assessment: Conduct internal review of compliance readiness against all applicable requirements. High-risk systems intended for Annex III use cases undergo internal conformity assessment based on internal control (Annex VI) rather than third-party assessment³⁰.

2. Gap Analysis: Identify remaining compliance gaps and develop remediation plans with specific timelines and accountability.

3. Documentation Review: Comprehensive review of all compliance documentation ensuring completeness, accuracy, and consistency. Address documentation gaps before market entry.

Time Investment: 40-60 hours

Phase 4: Ongoing Compliance (Month 6+)

1. Post-Market Monitoring: Implement monitoring systems tracking AI system performance, user outcomes, and potential adverse impacts. Article 15 requires systematic monitoring throughout system operational lifetime³¹.

2. Incident Reporting Procedures: Establish procedures for identifying, documenting, and reporting serious incidents per Article 62 requirements³².

3. Documentation Maintenance: Implement regular documentation review cycles ensuring updates reflect system changes, monitoring findings, and operational learnings.

Time Investment: 10-20 hours monthly

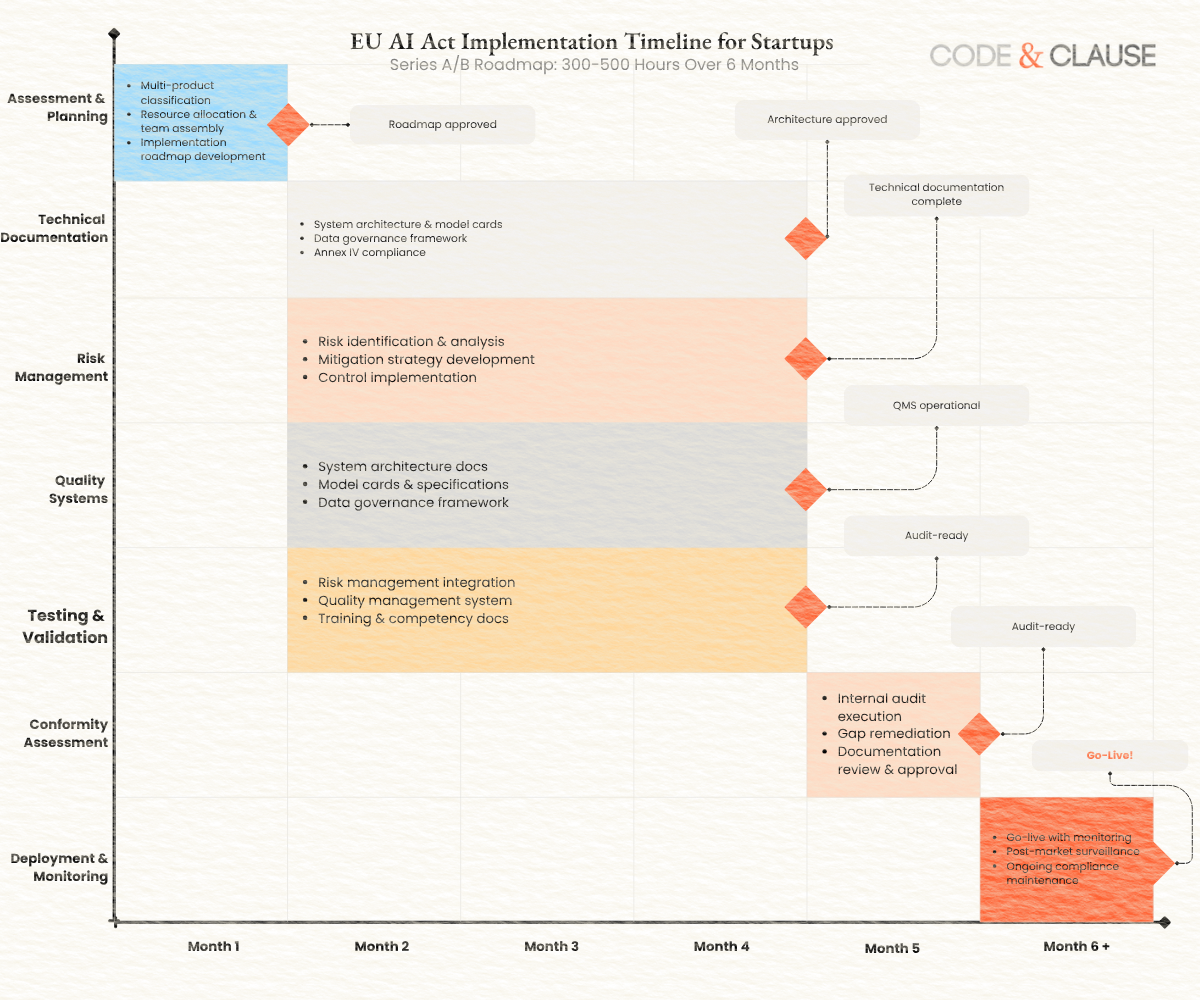

Path B: Series A/B Funded Startups (Months 1-6)

Later-stage startups typically have 20-100 employees, established products, customer traction, and more resources available for compliance investment. Implementation can proceed more rapidly with greater thoroughness.

Phase 1: Comprehensive Assessment and Planning (Month 1)

1. Multi-Product Classification: Assess classification for all product lines, features, and customer deployment scenarios. Series A/B startups often have multiple products or extensive feature sets requiring individual classification evaluation.

2. Resource Planning: Allocate dedicated resources for compliance implementation including technical leads, compliance coordinators, and external specialist support where needed.

3. Stakeholder Engagement: Conduct stakeholder workshops with engineering, product, legal, and business teams establishing shared understanding of compliance requirements and implementation approach.

4. Implementation Roadmap: Develop detailed implementation roadmap with specific deliverables, timelines, dependencies, and accountability assignments.

Time Investment: 60-80 hours

Phase 2: Parallel Implementation (Months 2-4)

Later-stage startups should pursue parallel implementation across multiple compliance domains:

1. Technical Documentation: Teams simultaneously complete technical architecture documentation, model documentation, and data governance documentation, coordinating to ensure consistency.

2. Risk Management: Dedicated risk management workstream conducts comprehensive risk assessment including technical risk analysis, use-case impact evaluation, and mitigation strategy development.

3. Quality Management System: Establish formal quality management system per Article 17 requirements including quality policy, procedures documentation, and management review processes³³.

4. Testing and Validation: Conduct comprehensive testing including accuracy testing, robustness testing, bias assessment, and adversarial testing where appropriate³⁴.

5. Human Oversight Design: Implement robust human oversight mechanisms with formal training programs, decision-making authority frameworks, and override procedures.

Time Investment: 200-300 hours across teams

Phase 3: Conformity Assessment Preparation (Month 5)

1. Documentation Compilation: Assemble complete technical documentation packages per Annex IV requirements, organized for efficient auditor review.

2. Internal Audit: Conduct formal internal audit against all applicable requirements, documenting compliance evidence and identifying any remaining gaps.

3. External Review: Engage specialized consultants or legal counsel for independent compliance review providing external validation before market entry.

Time Investment: 80-120 hours

Phase 4: Deployment and Monitoring (Month 6+)

1. Post-Market Monitoring Infrastructure: Implement comprehensive monitoring infrastructure including automated performance tracking, user outcome monitoring, bias detection, and incident management systems.

2. Continuous Improvement: Establish regular review cycles incorporating monitoring findings into risk assessment updates, documentation revisions, and system improvements.

3. Regulatory Engagement: Monitor regulatory guidance developments, participate in industry associations, and maintain awareness of enforcement actions and interpretation evolution.

Time Investment: 20-40 hours monthly

💡 Quick Takeaway – Implementation Paths:

- Seed stage (limited risk): 60-100 hours total over 6 months

- Seed stage (high-risk): 240-340 hours total over 6 months

- Series A/B (high-risk): 360-500 hours total over 6 months (distributed across team)

Startup Scenarios by Vertical

Understanding compliance requirements within specific startup contexts provides practical guidance for classification and implementation planning. The following scenarios represent common startup AI applications with representative classification analysis.

Scenario 1: B2B SaaS with AI-Enhanced Features

Example: CustomerFlow AI, a Series A customer relationship management platform incorporating AI-powered lead scoring, email response suggestions, and meeting scheduling optimization.

Classification Analysis:

The AI features provide business productivity enhancement without affecting individual access to opportunities or services. Lead scoring assists sales teams but does not determine employment decisions, credit access, or other Annex III use cases. Email response suggestions and scheduling optimization serve auxiliary functions supporting human-directed activities.

Classification: Minimal risk (no specific EU AI Act obligations beyond general product safety and consumer protection laws)

Compliance Requirements:

- No EU AI Act-specific obligations

- Standard software product requirements apply

- Consider voluntary transparency about AI features in marketing materials

Implementation Approach:

- Document classification rationale for customer questions

- Monitor use case evolution if customers deploy system in adjacent applications

- Maintain awareness of potential high-risk applications customers might request

Scenario 2: HR Tech with Recruitment Automation

Example: TalentMatch Pro, a seed-stage recruitment platform using AI to screen resumes, rank candidates, and suggest interview questions based on job requirements and candidate profiles.

Classification Analysis:

Resume screening and candidate ranking directly fall within Annex III Section 4 covering AI systems for recruitment and selection of natural persons³⁵. The system performs tasks directly related to employment decisions affecting individuals’ access to economic opportunities. Even with human recruiters reviewing recommendations, the AI system materially influences outcomes by determining which candidates receive consideration.

Classification: High-risk (Annex III, Section 4)

Compliance Requirements:

- Full Article 9 risk management system

- Article 10 data governance including training data bias assessment

- Article 11 technical documentation per Annex IV

- Article 14 human oversight ensuring recruiters can override recommendations

- Article 15 accuracy and robustness testing including fairness metrics

- Internal conformity assessment per Annex VI

- Registration in EU database per Article 71³⁶

Implementation Approach:

- Prioritize bias assessment and fairness testing given discrimination risks

- Implement comprehensive audit logging showing human recruiter decisions

- Develop training programs for recruiter users covering system limitations

- Establish monitoring tracking demographic outcome patterns

- Budget 120-180 hours for initial compliance implementation (seed stage)

Ethical Considerations:

HR technology intersects directly with fundamental rights protected under the EU Charter of Fundamental Rights, particularly non-discrimination (Article 21) and right to fair working conditions (Article 31)³⁷. Beyond legal compliance, startups should consider:

- Whether training data represents diverse candidate populations across protected characteristics

- How algorithm design choices might systematically advantage or disadvantage demographic groups

- Whether outcome monitoring can detect emerging bias patterns before widespread harm occurs

- How transparency about AI use affects candidate trust and employer brand

Scenario 3: FinTech Credit Scoring

Example: CreditSense, a Series A startup providing AI-powered credit scoring for small business lending, analyzing alternative data sources beyond traditional credit reports.

Classification Analysis:

Credit scoring systems explicitly fall within Annex III Section 5(b) as AI systems intended to evaluate creditworthiness of natural persons or establish their credit score³⁸. While CreditSense serves business lending, small business credit often requires personal guarantees from business owners, bringing natural persons within scope.

Classification: High-risk (Annex III, Section 5)

Compliance Requirements:

- Comprehensive risk management addressing discrimination risks

- Extensive data governance given alternative data sources

- Rigorous bias testing across protected demographic characteristics

- Clear documentation of credit decision factors and weighting

- Human oversight by lending officers with credit expertise

- Post-market monitoring tracking lending outcomes by demographic groups

Implementation Approach:

- Engage legal counsel for GDPR intersection given personal financial data

- Implement statistical fairness testing using multiple fairness metrics

- Develop detailed model explainability showing credit decision factors

- Establish ongoing bias monitoring with quarterly fairness audits

- Consider third-party fairness audit before market launch

- Budget 200-300 hours for comprehensive compliance program (Series A)

Ethical Considerations:

Financial services AI systems affect individuals’ economic opportunities and financial security. Beyond compliance obligations:

- Assess whether alternative data sources systematically exclude or disadvantage underserved populations

- Consider whether credit scoring algorithms perpetuate historical lending discrimination patterns

- Evaluate transparency mechanisms enabling applicants to understand credit decisions

- Establish appeals procedures allowing individuals to challenge algorithmic determinations

Scenario 4: HealthTech Diagnostic Support

Example: MediScan AI, a Series B medical imaging startup providing AI analysis of diagnostic images (X-rays, MRIs) to assist radiologists in identifying potential pathologies.

Classification Analysis:

Medical device AI falls under dual classification. First, medical diagnostic AI systems constitute medical devices under Medical Device Regulation (EU) 2017/745³⁹. Second, they qualify as high-risk under EU AI Act Annex I as AI systems serving as safety components in medical devices subject to third-party conformity assessment⁴⁰.

Classification: High-risk (Annex I – medical device safety component)

Compliance Requirements:

- Full EU AI Act high-risk system requirements

- Medical Device Regulation compliance

- Coordinated conformity assessment addressing both regulations

- Clinical validation demonstrating diagnostic accuracy

- Risk management addressing both patient safety and AI-specific risks

- Post-market surveillance integrating MDR and AI Act requirements

Implementation Approach:

- Engage regulatory consultants with both MDR and AI Act expertise

- Conduct early dialogue with notified bodies regarding conformity assessment approach

- Implement quality management system satisfying both ISO 13485 and AI Act Article 17

- Plan 12-18 month regulatory pathway given medical device complexity

- Budget substantial resources given dual regulatory framework (300+ hours)

Scenario 5: EdTech Personalized Learning

Example: LearnPath, a seed-stage adaptive learning platform using AI to personalize content delivery, assess student understanding, and recommend learning resources.

Classification Analysis:

Educational AI classification depends on specific functionality. Annex III Section 3 designates as high-risk AI systems intended for determining access to educational institutions, assessing learning outcomes for steering learning paths, or monitoring exam integrity⁴¹. LearnPath’s content personalization and resource recommendations support learning but do not determine educational access or assess outcomes affecting educational progression.

Classification: Limited risk (personalization features) with careful monitoring for high-risk feature expansion

Compliance Requirements:

- Article 50 transparency obligations if system qualifies as limited risk

- Clear disclosure to students and educators about AI-driven personalization

- Documentation supporting classification rationale

- Regular review of feature additions for high-risk functionality

Implementation Approach:

- Implement student/educator notifications about AI use

- Document classification analysis for investor and customer due diligence

- Establish feature review process assessing new capabilities for classification impact

- Monitor customer deployments preventing use in high-risk contexts (grading, admissions)

- Budget 30-50 hours for transparency implementation and classification maintenance

💡 Quick Takeaway – Vertical Compliance Summary:

- B2B SaaS (minimal risk): Classification documentation only

- HR Tech (high-risk): Full compliance program, 120-180 hours (seed)

- FinTech (high-risk): Extensive bias testing, 200-300 hours (Series A)

- HealthTech (high-risk): Dual regulatory framework, 300+ hours, 12-18 months

- EdTech (limited risk): Transparency implementation, 30-50 hours

Resource Optimization: Free, Low-Cost, and Strategic Investment

Startups must balance compliance thoroughness with resource constraints. Strategic resource allocation focuses investment on areas providing compliance value while leveraging free and low-cost resources where appropriate.

Official Free Resources

EU AI Office Guidance

The European Commission’s AI Office publishes official guidance documents interpreting AI Act requirements⁴². These documents provide authoritative interpretation at no cost. Key guidance documents include:

- Guidelines on prohibited AI practices

- High-risk AI systems classification guidance

- Technical documentation templates and examples

- Conformity assessment procedures

Startups should monitor the AI Office website regularly for new guidance publications addressing emerging interpretation questions.

Access: https://digital-strategy.ec.europa.eu/en/policies/ai-office

National Competent Authority Support

Article 77 requires national competent authorities to provide guidance and support to AI system providers, particularly small-scale providers and startups⁴³. Many EU member states establish dedicated support programs including:

- Technical guidance on classification and requirements

- Template documentation and compliance tools

- Training programs and workshops

- Direct consultation for specific compliance questions

Startups should identify the competent authority in their target EU member state and explore available support programs. These resources provide jurisdiction-specific guidance complementing general EU-level documentation.

NIST AI Risk Management Framework

The US National Institute of Standards and Technology publishes the AI Risk Management Framework providing systematic risk management methodology⁴⁴. While developed for US context, the framework aligns substantially with EU AI Act risk management requirements under Article 9. NIST provides free resources including:

- Risk management playbook and implementation guidance

- Risk assessment templates and worksheets

- Cross-reference mapping to various regulatory frameworks

Startups implementing NIST RMF can leverage this work for EU AI Act compliance with jurisdiction-specific adaptations.

Access: https://www.nist.gov/itl/ai-risk-management-framework

Industry Association Resources

Industry associations increasingly publish AI governance resources including:

- Partnership on AI: Responsible AI implementation resources

- Future of Privacy Forum: AI privacy and fairness guidance

- IEEE: Technical standards for algorithmic bias and transparency

While not providing legal compliance advice, these resources offer practical implementation guidance addressing technical challenges common across jurisdictions.

Low-Cost Implementation Tools

Documentation Management

Startups can implement compliant documentation systems using affordable tools:

- Version Control: Git and GitHub provide free version control for documentation alongside code, ensuring audit trails and change history

- Collaborative Documentation: Tools like Notion, Confluence, or Google Workspace enable cross-functional documentation collaboration

- Document Generation: Markdown-based documentation enables automated generation from code comments and system metadata

Risk Management Software

Several platforms offer startup-friendly risk management capabilities:

- Generic risk management platforms provide structured risk registers, assessment workflows, and reporting

- Spreadsheet-based risk management using Google Sheets or Excel provides zero-cost alternative with adequate structure for seed-stage startups

Monitoring Infrastructure

Open-source monitoring tools enable compliance monitoring without substantial cost:

- Logging infrastructure using ELK stack (Elasticsearch, Logstash, Kibana) or cloud-native logging services

- Metrics collection using Prometheus and Grafana

- Alerting systems monitoring key risk indicators and performance thresholds

When to Engage Expert Support

Strategic expert engagement focuses investment on areas requiring specialized expertise while maintaining internal ownership of routine compliance activities.

Classification Uncertainty

Engage legal counsel with EU AI Act expertise when:

- Classification involves novel use cases without clear regulatory precedent

- System functionality straddles multiple Annex III categories

- Material influence assessment requires interpretation of ambiguous deployment contexts

- Classification affects major business decisions (market entry, funding, M&A)

Conformity Assessment Preparation

Consider conformity assessment consultants when:

- Approaching market entry with high-risk system requiring conformity assessment

- Internal team lacks experience with technical documentation at regulatory audit quality

- Timeline constraints require accelerated conformity assessment preparation

- Notified body engagement requires specialized procedural knowledge

Technical Implementation Challenges

Engage technical specialists for:

- Bias assessment and fairness testing requiring specialized statistical methodology

- Security and robustness testing beyond internal team capabilities

- Model explainability implementation for complex architectures

- Data governance for sensitive data types (health, financial, biometric)

Avoid Premature Expert Engagement

Defer expert engagement for:

- Initial classification and gap assessment (complete internally first)

- Standard technical documentation (leverage templates and internal knowledge)

- Basic risk assessment (establish internal capability before outsourcing)

- Routine compliance monitoring (maintain internal ownership)

DIY Implementation Approach

Startups with technical capability can implement substantial compliance work internally:

Phase 1: Self-Assessment (Internal)

- Classification using official guidance and legal team review

- Gap assessment against applicable requirements

- Resource planning and timeline development

Phase 2: Template-Based Implementation (Primarily Internal)

- Technical documentation using Annex IV templates

- Risk assessment using structured frameworks

- Quality procedures documenting actual practices

Phase 3: Targeted Expert Review (Hybrid)

- Legal review of classification and key compliance decisions

- Technical review of fairness testing methodology

- Pre-submission conformity assessment review

Phase 4: Ongoing Compliance (Internal with Periodic External Audit)

- Post-market monitoring and incident management

- Documentation updates and risk reassessment

- Annual external compliance audit for validation

This approach minimizes expert costs while ensuring critical decisions receive appropriate review.

Multi-Jurisdictional Compliance Considerations

Startups pursuing global expansion face compliance requirements across multiple jurisdictions. Understanding relationships between EU AI Act and other regulatory frameworks enables efficient multi-jurisdictional strategies.

United States AI Governance Landscape

The United States lacks comprehensive federal AI legislation comparable to the EU AI Act. Instead, US AI governance operates through sector-specific regulations, executive orders, and voluntary frameworks⁴⁵.

NIST AI Risk Management Framework

Voluntary framework providing systematic risk management methodology applicable across sectors. Many federal agencies reference NIST RMF in procurement requirements and regulatory guidance.

Executive Order on Safe, Secure, and Trustworthy AI (October 2023)

Establishes requirements for AI systems used by federal agencies and contractors⁴⁶. While primarily affecting government contractors, the order signals federal AI governance direction.

State-Level Legislation

Several US states propose or enact AI-specific legislation addressing employment AI (Illinois BIPA, New York City Local Law 144), algorithmic discrimination (Colorado SB 24-205), and automated decision systems (various states).

Key Example – Colorado SB 24-205: Colorado’s Artificial Intelligence Act establishes requirements for high-risk AI systems including algorithmic discrimination assessments, impact assessments, and consumer notification rights⁴⁷. The law demonstrates convergence toward EU AI Act-style risk-based regulation at state level.

Compliance Synergies with EU AI Act

Startups implementing EU AI Act compliance build capabilities supporting US requirements:

- Risk management systems satisfy NIST RMF expectations and Colorado SB 24-205 risk assessment requirements

- Bias testing and fairness assessments address Colorado algorithmic discrimination provisions and New York employment AI transparency requirements

- Technical documentation supports federal contractor obligations under Executive Order 14110

- Transparency mechanisms align with state disclosure laws requiring notification of AI use in employment, credit, and consumer contexts

- Data governance procedures developed for EU Article 10 requirements support US state privacy laws (California CPRA, Virginia CDPA) requiring data minimization and purpose limitation

United Kingdom AI Regulation

The UK pursues sector-based AI regulation rather than horizontal AI-specific legislation⁴⁸. Existing regulators (ICO for data protection, FCA for financial services, CQC for healthcare) incorporate AI governance within sector mandates.

AI Regulatory Principles

UK government guidance establishes cross-sectoral principles:

- Safety, security and robustness

- Appropriate transparency and explainability

- Fairness

- Accountability and governance

- Contestability and redress

Compliance Synergies with EU AI Act

EU AI Act implementation addresses UK regulatory expectations:

- Risk management (Article 9) satisfies safety and robustness principles

- Technical documentation (Annex IV) provides transparency and explainability evidence

- Bias assessment (Article 10 data governance) addresses fairness requirements

- Quality management (Article 17) demonstrates governance and accountability

- Human oversight (Article 14) enables contestability and redress mechanisms

Startups complying with EU AI Act can adapt documentation for UK sector regulators with supplementary materials addressing sector-specific requirements.

Canada Artificial Intelligence and Data Act (AIDA)

Canada’s proposed AIDA establishes requirements for high-impact AI systems⁴⁹. While not yet enacted, the framework resembles EU AI Act structure with risk-based approach and transparency requirements.

Proposed AIDA Requirements

- Impact assessments for high-impact systems

- Risk mitigation measures

- Transparency and explainability

- Human intervention rights

- Reporting obligations for adverse outcomes

Compliance Synergies with EU AI Act

EU AI Act compliance provides foundation for AIDA readiness:

- Risk assessment methodologies (Article 9) transfer directly to AIDA impact assessment requirements

- Technical documentation (Annex IV) satisfies AIDA transparency obligations

- Human oversight mechanisms (Article 14) align with AIDA human intervention rights

- Post-market monitoring (Article 72) supports AIDA adverse outcome reporting

Efficient Multi-Jurisdictional Strategy

Core Compliance Foundation

Implement comprehensive EU AI Act compliance as foundation addressing most stringent requirements globally. This approach creates compliance infrastructure adaptable to other jurisdictions.

The EU AI Act represents the most comprehensive AI-specific regulation currently in force. Organizations achieving EU compliance establish systematic capabilities that satisfy or exceed requirements in most other jurisdictions.

Jurisdiction-Specific Supplements

Add jurisdiction-specific elements as needed:

United States:

- NIST RMF mapping documentation showing how EU Article 9 risk management satisfies NIST framework

- State-specific disclosures for Colorado (algorithmic discrimination), New York (employment AI), California (data privacy)

- Federal contractor compliance documentation for Executive Order 14110 requirements

United Kingdom:

- Sector regulator engagement documentation

- Principle-based compliance mapping showing how EU requirements satisfy UK safety, transparency, fairness, accountability, and contestability principles

- Sector-specific supplementary documentation (FCA for FinTech, ICO for data protection, CQC for HealthTech)

Canada:

- AIDA impact assessment documentation (once enacted)

- High-impact system determination methodology

- Adverse outcome reporting procedures

Documentation Architecture

Structure compliance documentation with:

- Core technical documentation applicable across jurisdictions (system architecture, model specifications, risk assessments, testing results)

- Jurisdiction-specific annexes addressing unique requirements (state law disclosures, sector regulator submissions, national authority registrations)

- Cross-reference matrices mapping core documentation to jurisdiction requirements, enabling efficient evidence location during audits or regulatory inquiries

This architecture minimizes documentation duplication while ensuring jurisdiction-specific compliance demonstration. Startups maintain single source of truth for technical compliance evidence while producing jurisdiction-specific views for regulatory engagement.

💡 Quick Takeaway – Multi-Jurisdictional Efficiency: Comprehensive EU AI Act compliance provides 70-80% of requirements for US (federal + Colorado/NY/CA), UK sector regulation, and Canadian AIDA. Jurisdiction-specific supplements require 20-30% additional effort rather than parallel compliance programs.

Cost-Benefit Analysis and Investment Justification

Compliance investment requires business justification, particularly for resource-constrained startups. Understanding effort requirements, benefits, and return on investment supports informed decision-making.

Implementation Effort by Startup Stage and Classification

Seed Stage, Limited Risk

- Initial implementation: 40-60 hours

- Ongoing maintenance: 5-10 hours monthly

- Primary activities: Transparency implementation, classification documentation

Seed Stage, High-Risk

- Initial implementation: 200-300 hours

- Ongoing maintenance: 15-25 hours monthly

- Primary activities: Risk management, technical documentation, conformity assessment

Series A/B, High-Risk

- Initial implementation: 300-500 hours (distributed across team)

- Ongoing maintenance: 30-50 hours monthly

- Primary activities: Comprehensive compliance program, multiple product lines

These estimates assume internal implementation with targeted expert review. Full outsourcing to consultants typically doubles effective hours through knowledge transfer and coordination overhead.

Quantifiable Benefits

Market Access

EU market access represents the primary compliance benefit. For startups targeting European customers:

- Enterprise customers increasingly require AI Act compliance in procurement

- Channel partnerships often mandate regulatory compliance

- Public sector opportunities require demonstrated compliance

The value of market access depends on European revenue potential. Startups projecting substantial EU revenue should value market access at expected EU revenue over planning horizon.

Example: A FinTech startup projecting €2M EU ARR within 18 months values market access at €2M+ annually. Compliance investment enabling this access generates substantial return even with significant implementation effort.

Due Diligence Efficiency

Systematic compliance documentation streamlines due diligence during:

- Venture capital funding rounds

- Strategic partnership discussions

- Acquisition conversations

- Enterprise customer procurement

Efficient due diligence can accelerate funding timelines by 2-4 weeks, reduce legal costs by 30-50%, and improve deal certainty by addressing regulatory concerns proactively rather than reactively.

Operational Capabilities

Compliance implementation builds operational capabilities providing value beyond regulatory requirements:

- Risk management systems improve product quality and reliability by identifying technical issues before customer impact

- Technical documentation supports engineering onboarding and knowledge transfer, reducing onboarding time by 30-40%

- Data governance enhances data quality and ML model performance through systematic data quality monitoring

- Quality management procedures reduce defects and technical debt through systematic testing and validation

Competitive Differentiation

Early compliance creates competitive advantages:

- First-mover positioning in compliance-conscious markets, capturing customers before competitors achieve compliance

- Differentiation versus non-compliant competitors in enterprise sales cycles where procurement requires compliance evidence

- Premium pricing justification through demonstrated quality systems and risk management

- Partnership opportunities requiring compliant vendors, accessing distribution channels unavailable to non-compliant competitors

Return on Investment Framework

ROI Calculation

ROI = (Compliance Benefits – Compliance Costs) / Compliance Costs

Expressed as percentage showing return per unit of investment.

Example: Series A FinTech Startup (High-Risk Credit Scoring)

Implementation Effort:

- Initial implementation: 300 hours internal effort

- External legal review: 40 hours specialist consultation

- Ongoing compliance: 30 hours monthly

- Total Year 1: 340 hours initial + 360 hours ongoing = 700 hours

Quantifiable Benefits (Year 1):

- EU market access enabling €2M additional ARR

- Reduced customer procurement cycle time (2 months faster average, enabling 4 additional customers in Year 1)

- Improved data quality increasing model performance 3%, reducing false positives by 15%

- Investor confidence supporting higher valuation in Series B (estimated 10-15% valuation premium for compliance readiness)

Conservative ROI Calculation:

Primary benefit: EU market access generating €2M ARR with 70% gross margin = €1.4M gross profit

If 700 hours represents internal opportunity cost (alternative uses of engineering time), compliance generates:

- Direct revenue benefit: €1.4M

- Operational improvements: €50K (reduced customer acquisition costs, improved model performance)

- Total benefits: €1.45M

Even with conservative valuation of internal time, the return substantially exceeds investment, demonstrating strong positive ROI for startups with credible European market opportunities.

Investment Timing Strategy

Early Investment Rationale

Implement compliance early when:

- Targeting EU customers within 12-18 months

- Seeking venture funding from EU investors or US investors focused on European expansion

- Building in high-risk categories where compliance affects architectural decisions

- Market positioning emphasizes trust and responsible AI as competitive differentiators

Early implementation enables architectural decisions supporting compliance, avoiding expensive retrofitting of production systems.

Deferred Investment Rationale

Defer detailed compliance when:

- No near-term EU expansion plans (24+ months)

- Minimal risk classification requires only basic transparency

- Product-market fit remains uncertain with potential pivot likelihood

- Resources fully consumed by core product development for initial market validation

However, even when deferring implementation, startups should complete classification assessment to inform architectural decisions.

Optimal Approach

Most startups benefit from phased timing:

- Immediate (now): Classification and architectural decisions enabling future compliance

- Near-term (6-9 months before EU entry): Detailed implementation and documentation

- Ongoing (post-launch): Continuous compliance maintenance and improvement

This approach avoids premature investment while ensuring foundational decisions support efficient future compliance implementation.

Frequently Asked Questions: AI Compliance for Startups

Action Plan and Implementation Resources

Startups should implement systematic action plans translating compliance requirements into specific activities with clear timelines and accountability.

Immediate Next Steps (This Week)

Action 1: Conduct Initial Classification Assessment

Using classification methodology described earlier, conduct preliminary assessment of AI system risk category. Document use cases, evaluate Annex III applicability, and determine working classification.

Time Investment: 8-16 hours

Accountability: Technical lead + product manager

Output: Classification determination document

Action 2: Assess EU Market Timeline

Determine realistic timeline for EU market entry based on product roadmap, customer pipeline, and go-to-market strategy. This timeline drives compliance implementation urgency.

Time Investment: 4-8 hours

Accountability: CEO/founder + business development

Output: EU market entry timeline with key milestones

Action 3: Review Official Guidance

Access EU AI Office guidance documents and review materials relevant to startup’s classification and sector. Build foundational understanding of specific requirements.

Time Investment: 8-12 hours

Accountability: Compliance lead (or assigned founder)

Output: Annotated guidance documents with startup-specific notes

Action 4: Assemble Cross-Functional Team

Identify technical, legal, and business stakeholders who will participate in compliance implementation. Schedule initial kickoff meeting establishing roles and responsibilities.

Time Investment: 2-4 hours

Accountability: Project sponsor (typically CTO or COO)

Output: Compliance team roster with role definitions

30-Day Action Plan

Week 1:

- Complete classification assessment and documentation

- Engage legal counsel for classification review (if high-risk or uncertain)

- Develop high-level implementation roadmap

Week 2:

- Conduct gap assessment against applicable requirements

- Identify critical path activities and dependencies

- Allocate resources and establish timeline

Week 3:

- Begin technical documentation using templates

- Initiate risk identification workshops

- Establish documentation infrastructure (version control, templates)

Week 4:

- Complete initial technical documentation draft

- Document risk register with preliminary analysis

- Review progress and adjust timeline if needed

6-Month Implementation Checklist

Month 1: Foundation

- ☐ Classification finalized and documented

- ☐ Gap assessment completed

- ☐ Implementation roadmap approved

- ☐ Resources allocated

- ☐ Documentation infrastructure established

Month 2: Core Documentation

- ☐ Technical documentation (Annex IV) drafted

- ☐ Risk assessment initiated

- ☐ Data governance procedures documented

- ☐ Quality management framework established

Month 3: Risk Management

- ☐ Comprehensive risk identification completed

- ☐ Risk analysis and prioritization finished

- ☐ Mitigation strategies developed

- ☐ Control implementation begun

Month 4: Implementation

- ☐ Technical controls implemented

- ☐ Human oversight mechanisms deployed

- ☐ Monitoring infrastructure established

- ☐ Testing and validation executed

Month 5: Validation

- ☐ Internal conformity assessment conducted

- ☐ Documentation review completed

- ☐ Gap remediation finished

- ☐ External review obtained (if applicable)

Month 6: Deployment Readiness

- ☐ All compliance requirements satisfied

- ☐ Post-market monitoring operational

- ☐ Incident reporting procedures established

- ☐ EU market entry approved

Downloadable Resources

Startup Compliance Mini-Template (Coming Soon)

Purpose-built template package for startups including:

- Lean documentation framework optimized for startup scale

- Quick-start risk assessment methodology

- Cost-effective implementation approaches

- Vertical-specific guidance (HR tech, FinTech, EdTech, HealthTech)

Join waitlist for early access and launch notification.

Conclusion: Compliance as Strategic Foundation

EU AI Act compliance represents neither insurmountable barrier nor mere bureaucratic checkbox for startups. Instead, systematic compliance implementation builds operational capabilities supporting product quality, risk management, and market access objectives beyond regulatory requirements.

Critical Insights for Startup Founders and Technical Teams

Classification Drives Everything: Accurate classification determines resource requirements and implementation approach. Most startups fall into minimal or limited risk categories requiring substantially less investment than high-risk compliance. Invest time in thorough classification before beginning detailed implementation.

Proportionate Implementation Succeeds: Compliance frameworks scale from lean seed-stage approaches to comprehensive Series A/B programs. Startups should implement compliance proportionate to organizational maturity, avoiding both under-investment creating regulatory exposure and over-investment consuming resources better directed toward product development.

Early Architecture Decisions Matter: Compliance readiness depends significantly on architectural decisions around logging, data governance, and monitoring infrastructure. Implementing these capabilities during initial development costs far less than retrofitting production systems.

Internal Capability Building Optimizes Costs: Startups with technical teams can implement substantial compliance work internally using official guidance, templates, and frameworks. Strategic expert engagement focuses investment on classification validation, specialized testing, and conformity assessment preparation rather than comprehensive outsourcing.

Multi-Jurisdictional Synergies Exist: EU AI Act compliance builds capabilities supporting requirements in other jurisdictions including US NIST RMF, state-level AI laws (Colorado, New York, California), UK sector-based regulation, and emerging frameworks globally. Comprehensive EU implementation creates foundation for efficient global expansion.

Ethics and Fundamental Rights Connect: Compliance intersects with broader ethical considerations around fairness, non-discrimination, and respect for fundamental rights protected under the EU Charter of Fundamental Rights. The EU’s diverse population across 27 member states and 24 languages requires bias assessments considering varied demographic contexts. Startups addressing these ethical dimensions build trust with users, customers, and regulators beyond minimum compliance requirements.

The Path Forward

With approximately ten months remaining until the August 2026 deadline for high-risk systems, startups must begin compliance activities immediately to ensure market access to European opportunities. Systematic implementation over 4-6 months enables thorough compliance without compromising product development velocity.

For startups, the question is not whether to pursue compliance but how to implement efficiently, building compliance capabilities that strengthen product quality, risk management, and market positioning while satisfying regulatory requirements. This guide provides the technical framework, implementation methodology, and practical resources enabling startups to answer that question confidently.

The European Union’s €18 trillion market represents opportunity that ambitious AI startups cannot afford to sacrifice through avoidable compliance failures. Strategic compliance implementation transforms regulatory requirements from obstacles into competitive advantages, establishing operational excellence that serves business objectives while meeting regulatory expectations.

References

- International Monetary Fund, “World Economic Outlook Database,” October 2024

https://www.imf.org/en/Publications/WEO/weo-database/2024/October - Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 laying down harmonised rules on artificial intelligence, OJ L, 12.7.2024

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689 - Regulation (EU) 2024/1689, Article 6 (Classification rules for high-risk AI systems)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e1652-1-1 - Grand View Research, “AI Governance Market Size Report, 2024-2030,” 2024

https://www.grandviewresearch.com/industry-analysis/ai-governance-market-report - Regulation (EU) 2024/1689, Article 113 (Entry into force and application)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e8583-1-1 - Regulation (EU) 2024/1689, Article 6 and Article 43 (Conformity assessment)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e3616-1-1 - Regulation (EU) 2024/1689, Article 99 (Penalties)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e8111-1-1 - Regulation (EU) 2024/1689, Article 47 (EU declaration of conformity)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e3892-1-1 - Regulation (EU) 2024/1689, Article 6 and Recital 29

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e40-60-1 - Regulation (EU) 2024/1689, Article 5 (Prohibited artificial intelligence practices)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e1580-1-1 - Regulation (EU) 2024/1689, Title III, Chapter 2 (Requirements for high-risk AI systems)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2082-1-1 - Regulation (EU) 2024/1689, Article 50 (Transparency obligations for certain AI systems)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e4272-1-1 - Regulation (EU) 2024/1689, Article 95 (Codes of conduct for voluntary application)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e7992-1-1 - Regulation (EU) 2024/1689, Article 6(1) and Annex I

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e38-142-1 - Regulation (EU) 2024/1689, Article 6(2) and Annex III

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e38-143-1 - Regulation (EU) 2024/1689, Article 6(2)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e1652-1-1 - Regulation (EU) 2024/1689, Article 6(3)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e1652-1-1 - Regulation (EU) 2024/1689, Annex III, Section 4

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e38-143-1 - European Commission, “Guidelines on the Classification of High-Risk AI Systems,” 2024

https://digital-strategy.ec.europa.eu/en/library/guidelines-classification-high-risk-ai-systems - Regulation (EU) 2024/1689, Article 14 (Human oversight)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2575-1-1 - Regulation (EU) 2024/1689, no exemptions based on provider size

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689 - Regulation (EU) 2024/1689, Article 11 and Annex IV

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2330-1-1 - Regulation (EU) 2024/1689, Article 9 (Risk management system)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2082-1-1 - Regulation (EU) 2024/1689, Article 50(1)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e4272-1-1 - Regulation (EU) 2024/1689, Annex IV (Technical documentation)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e38-146-1 - Regulation (EU) 2024/1689, Article 10 (Data and data governance)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2197-1-1 - Regulation (EU) 2024/1689, Article 9(2)-(6)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2082-1-1 - Regulation (EU) 2024/1689, Article 14(4)-(5)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2575-1-1 - Regulation (EU) 2024/1689, Article 17 (Quality management system)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2763-1-1 - Regulation (EU) 2024/1689, Article 43 and Annex VI (Internal control)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e3616-1-1 - Regulation (EU) 2024/1689, Article 72 (Post-market monitoring by providers)