On November 19, 2025, the European Commission published the Digital Omnibus on AI proposal—fundamentally rewriting every AI compliance roadmap planned for 2026. If you’re building high-risk AI systems for the EU market, here’s what changed and why it matters:

Share this on:

What Changed:

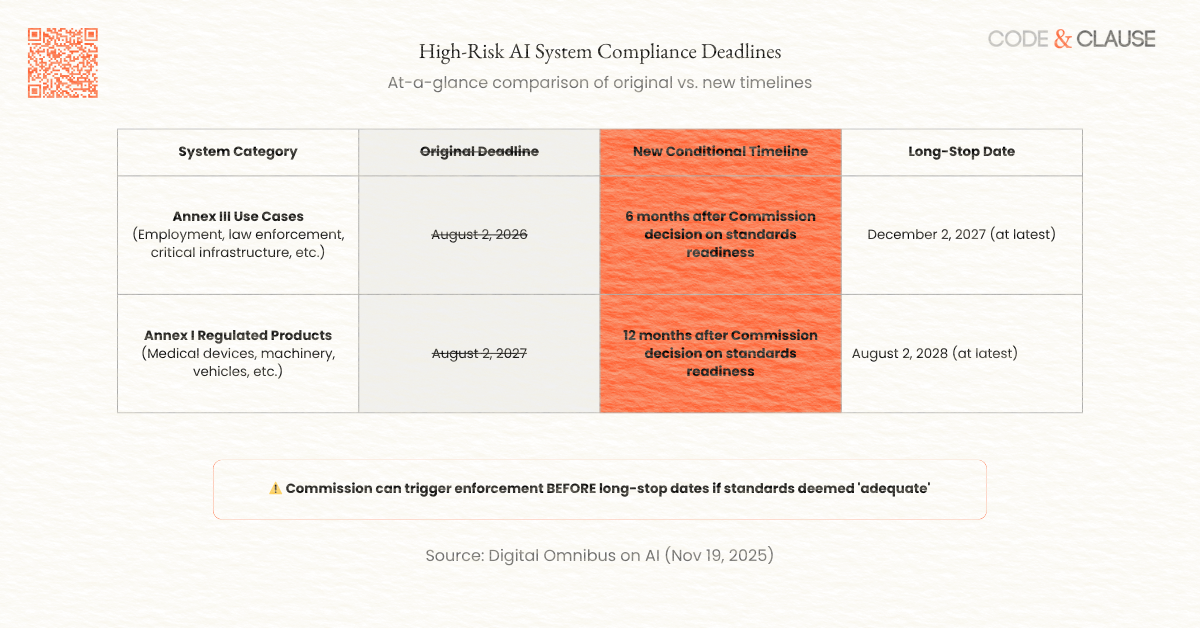

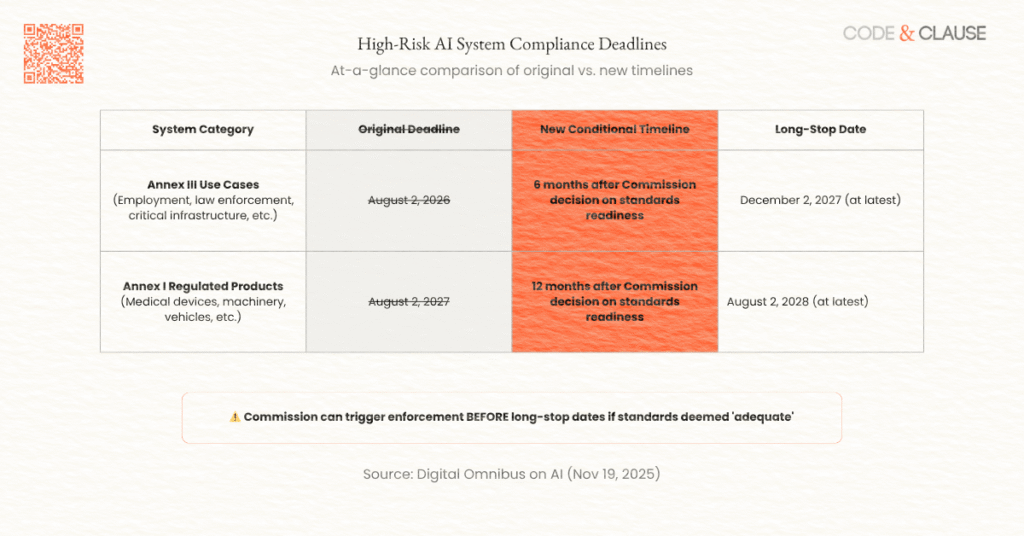

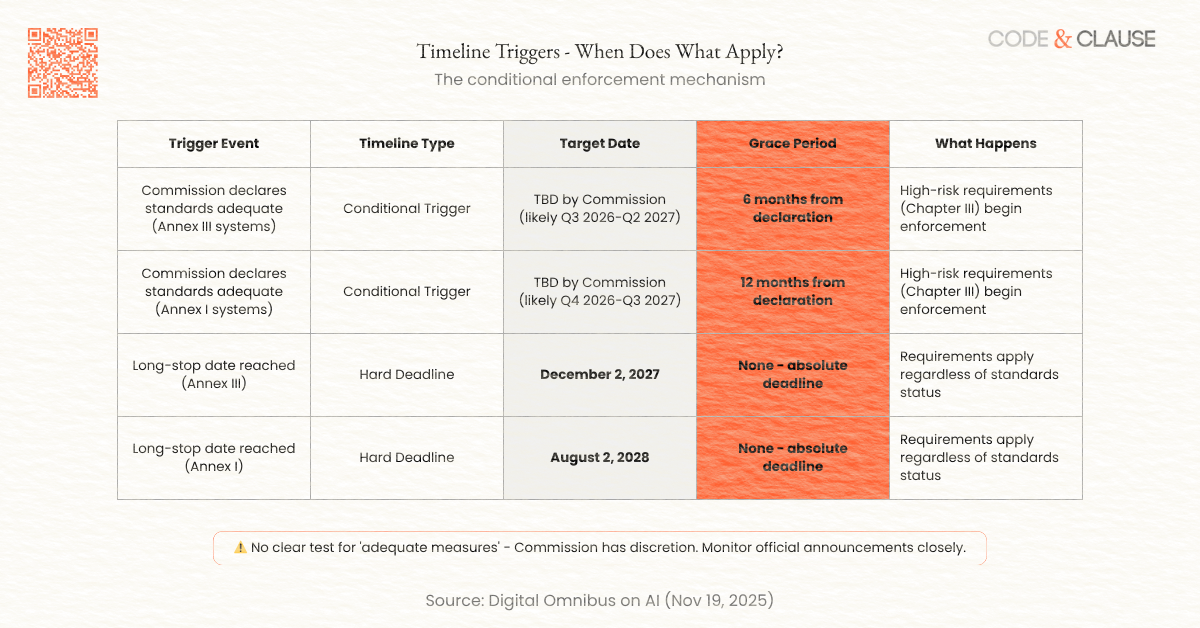

- High-risk AI compliance deadlines are no longer fixed dates—they’re now conditional on the readiness of supporting standards and guidance

- Original deadlines: August 2, 2026 (Annex III systems) and August 2, 2027 (Annex I regulated products)

- New conditional approach: Enforcement begins 6-12 months after the Commission declares standards are “adequate”

- New long-stop dates: December 2, 2027 (Annex III) and August 2, 2028 (Annex I)—even if standards aren’t ready

Why It Matters: The harmonized standards, common specifications, and implementation guidelines necessary for compliance aren’t ready. Forcing organizations to comply with unclear requirements would create massive legal uncertainty, litigation risk, and potentially stifle innovation. The Commission recognized this reality and adjusted the timeline accordingly—but the adjustment creates its own strategic complexities.

The Strategic Implication: Article 111(2) clarification creates a “compliance cliff”: if you place at least one unit of your high-risk AI system on the EU market before the deadlines apply, identical units can continue to be sold without retrofitting (provided design remains unchanged). This creates significant incentive for product launches before compliance kicks in—but also substantial risk if your system doesn’t actually comply.

Who This Affects:

- Every provider of high-risk AI systems (Annex III use cases)

- Manufacturers integrating AI into regulated products (Annex I)

- General-purpose AI model providers (separate timeline considerations)

- Deployers of high-risk AI systems (cascading timeline impacts)

- Startups and SMEs planning EU market entry

Your Immediate Action: Don’t assume full delay to 2027/2028. The Commission can trigger early enforcement if they determine standards are ready. Continue compliance preparation while monitoring standards development closely.

Table of Contents

- What Is the Digital Omnibus on AI?

- Timeline Changes Explained: What Actually Changed

- Strategic Implications for Different Stakeholders

- Beyond Deadlines: Other Key Changes

- What Hasn’t Changed (And Why That Matters)

- Real-World Implementation Scenarios

- Your Updated 2026-2028 Roadmap

- What to Monitor: Your Compliance Dashboard

- Conclusion: Strategic Positioning in Regulatory Uncertainty

- Frequently Asked Questions

What Is the Digital Omnibus on AI?

The Simplification Drive

The Digital Omnibus on AI (formally: “Proposal for a Regulation on the simplification of the implementation of harmonised rules on artificial intelligence”) is part of the European Commission’s broader Digital Omnibus package announced November 19, 2025.[^1] This package represents the EU’s commitment to reducing administrative burden across its digital regulatory landscape by at least 25% for all businesses and 35% for small and medium enterprises (SMEs) by 2029.

The AI-specific proposal introduces targeted amendments to Regulation (EU) 2024/1689 (the AI Act) aimed at ensuring its practical implementation aligns with the actual readiness of the regulatory ecosystem—including standardization bodies, national authorities, and market surveillance infrastructure.

[^1]: European Commission, “Digital Omnibus on AI Regulation Proposal,” November 19, 2025, https://digital-strategy.ec.europa.eu/en/library/digital-omnibus-ai-regulation-proposal

Why This Matters Now

The AI Act entered into force on August 1, 2024, following publication in the Official Journal on July 12, 2024.[^2]

Under the original Article 113 timeline:

- February 2, 2025: Prohibited AI practices (Chapter II) enforcement begins

- August 2, 2025: Governance rules and GPAI model obligations apply

- August 2, 2026: High-risk AI requirements (Chapter III) for Annex III use cases

- August 2, 2027: High-risk requirements for AI embedded in regulated products (Annex I)

The problem: By Q4 2025, it became evident that the supporting infrastructure for compliance—particularly harmonized technical standards developed through European standardization organizations (CEN, CENELEC, ETSI)—would not be ready by August 2026. Organizations reported needing a minimum of 12 months to achieve compliance with even a single technical standard, based on previous experience with medical device regulations and machinery directives.[^3]

Forcing compliance without clear standards would create:

- Legal uncertainty: What specific technical requirements must systems meet?

- Litigation risk: Non-compliance allegations based on unclear benchmarks

- Innovation friction: Overly cautious interpretations stifling AI development

- Enforcement inconsistency: 27 Member States potentially interpreting requirements differently

[^2]: Regulation (EU) 2024/1689, Official Journal L, 2024/1689, July 12, 2024 [^3]: Morrison Foerster, “EU Digital Omnibus on AI: What Is in It and What Is Not?” December 2, 2025

**LEGISLATIVE STATUS ALERT**

The Digital Omnibus on AI is currently a PROPOSAL (published November 19, 2025). It must be negotiated and approved by the European Parliament and Council before becoming law.

What this means:

- Timelines may shift during negotiations

- Specific provisions could be modified

- Final text may differ from current proposal

- We’ll update this analysis as negotiations progress

Current status: Trilogue negotiations expected Q1-Q2 2026

What’s NOT Changing

Critical clarification: This is timeline adjustment, not deregulation. The Digital Omnibus does not:

- Reduce the stringency of high-risk AI requirements

- Eliminate any prohibited AI practices

- Weaken fundamental rights protections

- Change the core risk-based approach

- Alter GPAI obligations (already in effect since August 2, 2025)

- Modify prohibited practices enforcement (in effect since February 2, 2025)

The Digital Omnibus adjusts when requirements apply, not what those requirements are. Organizations still face the same comprehensive obligations for risk management, data governance, technical documentation, transparency, human oversight, and robustness once the timelines activate.

Timeline Changes Explained: What Actually Changed

Original vs. New Deadlines: Comprehensive Comparison

Table 1: High-Risk AI System Compliance Deadlines

Key Understanding:

The “Commission decision” refers to a formal determination by the European Commission that “adequate measures in support of compliance” exist. These measures include:

- Harmonized standards: Technical specifications developed by CEN, CENELEC, or ETSI and adopted by the Commission

- Common specifications: Alternative technical specifications if standards aren’t available

- Implementation guidelines: Commission guidance documents clarifying obligations

Critical Detail: The proposal does not define a clear test for “adequate.” The Commission has significant discretion to determine when the regulatory ecosystem is ready, creating uncertainty around actual compliance timelines.

The Standards Readiness Problem

What Are Harmonized Standards?

Harmonized standards are technical specifications developed by European standardization bodies that, once published in the Official Journal, create a “presumption of conformity.” If an AI system meets harmonized standards, regulators presume it complies with corresponding AI Act requirements.

Current Status:

As of December 2025, the standardization process is significantly behind schedule:

- Multiple technical committees (TCs) active but standards still in draft phases

- Industry feedback indicates standards won’t be ready for widespread adoption until late 2026 or 2027

- Different standards needed for different high-risk use cases (employment, law enforcement, critical infrastructure, etc.)

- Each standard requires minimum 12 months for organizational implementation after publication

Why the Delay Occurred:

- Complexity underestimation: The AI Act’s technical requirements are unprecedented in scope

- Multi-stakeholder coordination: Balancing industry, civil society, academic, and regulatory input

- Resource constraints: Standardization bodies stretched across multiple EU regulatory initiatives

- Novel technical challenges: Many requirements (e.g., bias mitigation, explainability) lack established measurement approaches

Industry Perspective:

MedTech Europe, representing the medical device industry, publicly stated in November 2025 that even the proposed extension to December 2027/August 2028 may be insufficient. They recommended pushing full application to August 2029 to accurately reflect ecosystem readiness.[^4]

[^4]: MedTech Europe response to Digital Omnibus Package, November 20, 2025

Legacy System Grace Periods: The “Compliance Cliff”

The Most Strategic Change in the Digital Omnibus

Article 111(2) of the original AI Act stated that high-risk systems “placed on the market or put into service before” the compliance deadlines would only need to comply “if, as from that date, those systems are subject to significant changes in their designs.”

The Digital Omnibus clarifies and expands this provision with critical implications:

Clarification Added: If at least one unit of a high-risk AI system was lawfully placed on the EU market before the new rules apply, identical units of that system can continue to be placed on the market or put into service without triggering:

- Retrofitting requirements

- Additional conformity assessment procedures

- Technical documentation updates (for unchanged design)

Conditions: System lawfully on market before deadline

Design remains unchanged

Identical to original unit(s)

Exception: High-risk systems intended for use by public authorities must achieve full compliance by August 2, 2030, regardless of when placed on market.

Strategic Implication: The “Compliance Cliff”

This creates a significant market dynamic: Organizations face a choice between:

Option A: Launch Legacy System

- Place system on market in current form before deadlines

- Lock in ability to continue selling identical units indefinitely

- Avoid costly retrofitting or re-certification

- Risk: Current implementation may not actually comply when standards clarify requirements

Option B: Wait for Standards Clarity

- Delay product launch until standards and guidance available

- Build compliance from clear requirements

- Reduce risk of non-compliant design

- Risk: Competitors who chose Option A capture market share during your delay

Market Prediction: We anticipate a surge in product launches in late 2026/early 2027 as organizations rush to place “legacy” systems on market before December 2, 2027 long-stop date for Annex III systems. This compliance cliff will create significant competitive pressure.

Generative AI Watermarking Extension

Article 50(2) of the AI Act requires providers of AI systems generating synthetic audio, image, video, or text content to ensure outputs are marked in machine-readable format and detectable as artificially generated or manipulated.

Original timeline: Applies to all systems from August 2, 2026

Digital Omnibus change: Providers of generative AI systems placed on the market before August 2, 2026 receive a six-month grace period (until February 2, 2027) to implement technical marking and detectability requirements.

Strategic note: Systems placed on market after August 2, 2026 do NOT receive this grace period—they must comply immediately upon market entry.

What Triggers Early Enforcement?

The Commission retains authority to bring forward the application of high-risk requirements if it determines “adequate measures in support of compliance” exist—even before the long-stop dates.

Monitoring Indicators:

- CEN-CENELEC standardization committee progress reports

- Commission public consultations on draft standards

- AI Office statements on implementation readiness

- National authority capacity assessments

Risk: Organizations could face shortened preparation timelines if the Commission triggers early enforcement. A Commission decision in Q3 2026, for example, would mean Annex III requirements apply by Q1 2027 (6 months later)—well before the December 2027 long-stop.

Recommendation: Plan for multiple timeline scenarios. Don’t assume full delay to long-stop dates.

Strategic Implications for Different Stakeholders

For AI Product Managers

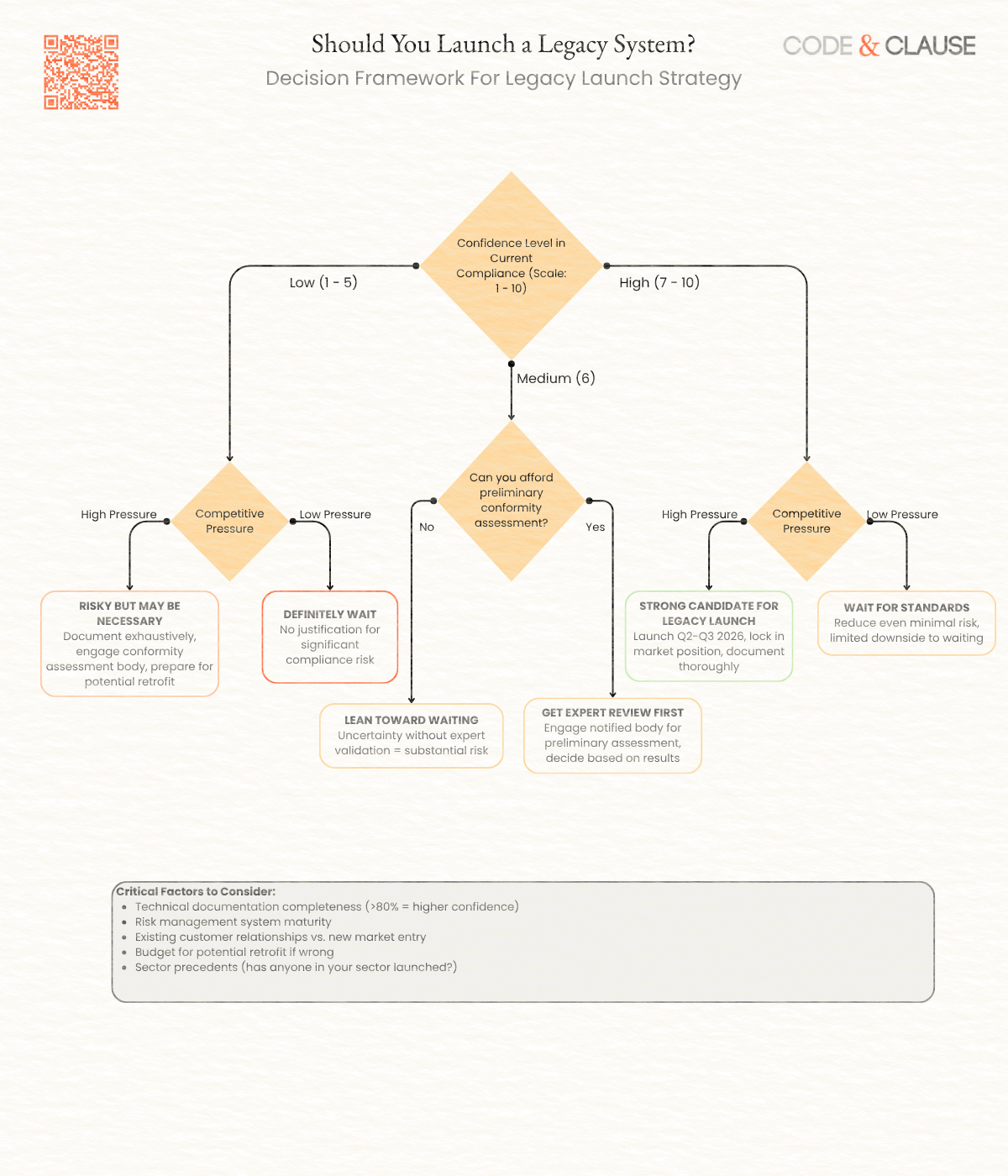

Immediate Decision Framework:

AI Product Managers face a complex strategic decision that will define product roadmaps for the next 24 months. The Digital Omnibus transforms compliance from a fixed-date project into a strategic business decision.

Key Questions to Ask:

- Compliance Confidence Assessment:

- How confident are you that your current AI system design would pass conformity assessment under likely final standards?

- Do you have comprehensive technical documentation already prepared?

- Have you conducted internal Article 9 risk assessments with documented mitigations?

- Is your data governance framework aligned with Article 10 requirements?

- Competitive Landscape Analysis:

- Are competitors likely to pursue legacy system launches?

- What market share could you lose during a 12-18 month delay waiting for standards?

- Do first-mover advantages outweigh compliance risk in your sector?

- Risk Tolerance Calibration:

- What are the penalties if a “legacy” system is later deemed non-compliant?

- Can your organization absorb potential enforcement actions and litigation?

- How risk-averse is your executive leadership and board?

Strategic Options Matrix:

| Scenario | Recommendation | Rationale |

|---|---|---|

| High compliance confidence + High competitive pressure | Consider legacy launch strategy | Lock in market position while risk is manageable |

| High compliance confidence + Low competitive pressure | Wait for standards | Reduce even minimal risk with limited downside |

| Low compliance confidence + High competitive pressure | Risky but may be necessary; document thoroughly | Competitive necessity may justify risk |

| Low compliance confidence + Low competitive pressure | Definitely wait | No justification for significant compliance risk |

Product Roadmap Adjustments:

- Q1 2026: Final decision on legacy launch vs. wait strategy

- Q2-Q3 2026: If pursuing legacy launch, accelerate to market before August 2026 for maximum grace period

- Q4 2026-Q1 2027: Monitor Commission standards declarations closely

- 2027: Implement whichever timeline materializes (conditional trigger or long-stop)

For Engineering Directors

Technical Implementation Impact:

The Digital Omnibus provides extended runway for technical compliance work—but creates uncertainty around exactly when that work must be complete.

Resource Allocation Strategy:

DON’T: Stop compliance infrastructure development

- Reallocate compliance engineering resources to other projects

- Assume full delay to 2027/2028 without contingency

DO: Continue building risk management systems per Article 9

- Develop comprehensive technical documentation per Article 11

- Implement data governance frameworks per Article 10

- Build modular compliance components that can accelerate final implementation

- Plan for multiple timeline scenarios in sprint planning

Testing Strategy Considerations:

The Digital Omnibus expands Article 60 provisions for real-world testing of high-risk AI systems. Engineering teams should:

- Evaluate regulatory sandbox participation (available from 2026)

- Design controlled testing protocols that generate compliance evidence

- Document testing results comprehensively for future conformity assessment

- Consider pre-market testing as risk reduction strategy

Technical Debt Management:

Extended timelines create temptation to deprioritize compliance work. Resist this. Standards development is ongoing and could materialize quickly. Organizations maintaining compliance momentum will be positioned to respond rapidly when the Commission triggers enforcement.

Vendor and Third-Party Coordination:

If your AI systems incorporate third-party components (libraries, APIs, GPAI models), ensure vendors are also adapting to new timelines. Update procurement contracts with:

- Compliance warranties aligned with actual enforcement dates

- Representations about standards conformance

- Indemnification provisions for non-compliance

For Startups and SMEs

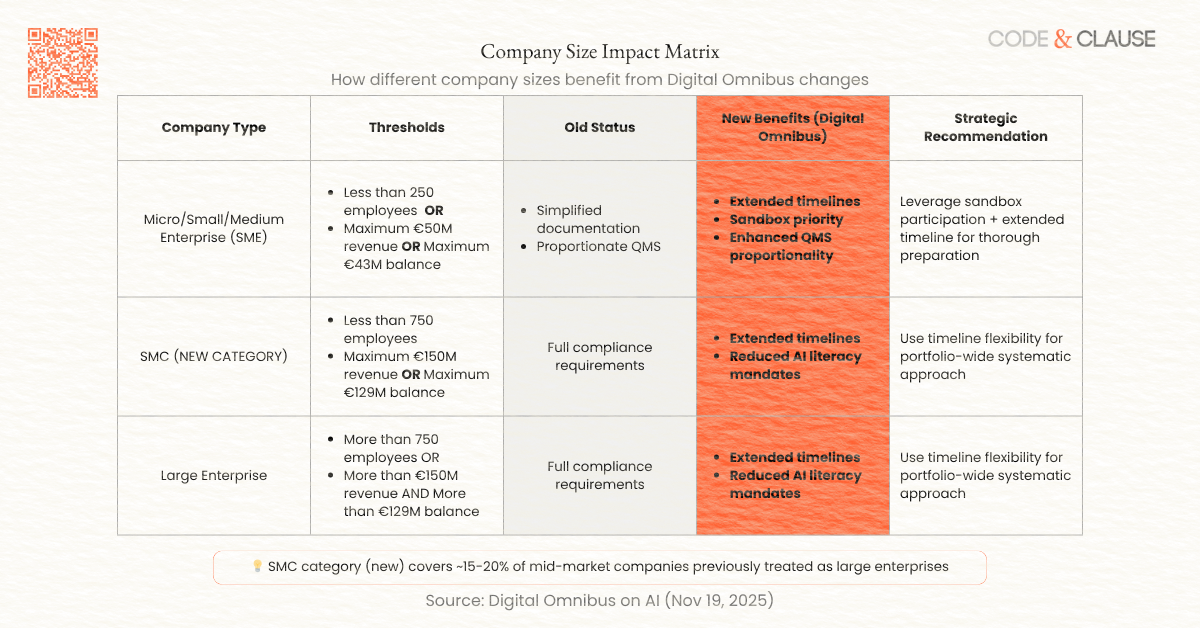

The SMC Opportunity:

The Digital Omnibus introduces “Small Mid-Cap” (SMC) as a new category between SME and large enterprise, with specific compliance benefits:

SMC Definition:

- <750 employees

- ≤€150 million net turnover OR ≤€129 million balance sheet total

New Benefits for SMCs:

- Simplified Technical Documentation: Reduced documentation requirements when demonstrating high-risk AI compliance (similar to existing SME benefits)

- Proportionate Quality Management Systems: QMS implementation proportional to company size rather than one-size-fits-all approach

- Penalty Caps: Member States must consider SMC economic viability when calculating and imposing penalties for non-compliance

Strategic Advantages:

Extended Timeline as Runway:

- Startups typically have limited compliance budgets and specialized legal resources

- Additional 12-18 months provides time to raise capital specifically for compliance infrastructure

- Can approach investors with clear regulatory timeline and compliance cost projections

Sandbox Access:

- New EU-level regulatory sandbox provisions specifically designed for SMEs and SMCs

- Controlled testing environment to validate compliance approaches before full market launch

- Reduced risk of costly compliance mistakes

Competitive Positioning:

- While large enterprises have compliance resources, they also have bureaucratic inertia

- Agile startups can potentially move faster once standards clarify

- First-mover advantages may matter less if standards aren’t clear yet

Startup-Specific Risks:

Cash Runway Considerations: Extended timeline means longer period before revenue from EU market. Model this in financial projections.

Investor Expectations: VCs evaluating EU market entry should understand compliance timeline uncertainty. Build scenario analysis into pitch materials.

Acquirer Due Diligence: If planning exit, compliance readiness will be scrutinized. Maintain thorough documentation even if enforcement is delayed.

For Enterprise AI Teams

Governance and Organizational Implications:

Large enterprises typically have multiple AI systems across various use cases—meaning different systems may face different timelines (Annex III vs. Annex I), different Member State authorities, and different conformity assessment pathways.

Board-Level Communication:

Your board and C-suite need updated timelines that acknowledge uncertainty:

Effective Board Briefing Elements:

- Scenario Planning: Present three timeline scenarios (early trigger, mid-range, long-stop) with probability assessments

- Budget Flexibility: Request compliance budget that can flex based on actual timeline materialization

- Risk Assessment: Quantify financial risk of different strategic paths (legacy launch vs. wait)

- Competitive Intelligence: Share what competitors are signaling about their strategies

- Regulatory Monitoring: Commit to quarterly updates as Commission provides more clarity

Cross-Functional Coordination:

AI Act compliance requires coordination across:

- Engineering: Technical implementation and documentation

- Legal: Regulatory interpretation and risk assessment

- Product: Market timing and feature prioritization

- Data Science: Bias mitigation and model governance

- Operations: Post-market monitoring and incident response

- Procurement: Vendor compliance verification

Timeline uncertainty makes this coordination MORE important, not less. Establish clear governance structure with empowered decision-making authority.

Multi-Jurisdictional Complexity:

Global enterprises must navigate:

- EU AI Act (with new uncertain timelines)

- US state AI laws (Colorado, California progressing independently)

- UK AI regulation (diverging from EU post-Brexit)

- Canada’s AIDA (Artificial Intelligence and Data Act)

- Singapore’s Model AI Governance Framework

The Digital Omnibus does NOT simplify this complexity—it’s EU-specific breathing room while other jurisdictions proceed independently.

Beyond Deadlines: Other Key Digital Omnibus Changes

Expanded Bias Mitigation Permissions

Original AI Act Approach:

Article 10(5) allowed providers of high-risk AI systems to process special categories of personal data (racial/ethnic origin, health data, biometric data, etc.) solely for detecting and correcting bias in training datasets.

Digital Omnibus Expansion:

The new Article 4a allows all AI providers and deployers—regardless of risk level—to process special category personal data for bias detection and correction, subject to appropriate safeguards.

Conditions:

- Must implement appropriate technical and organizational safeguards

- Must comply with data minimization principles

- Must ensure proportionality

- For high-risk systems not involving model training, derogation limited to dataset testing

Practical Impact:

This addresses a significant friction point: Organizations building AI systems often couldn’t test for demographic bias because accessing the necessary demographic data violated GDPR restrictions. The expansion enables:

- Broader fairness testing across all AI systems

- Proactive bias mitigation before systems become high-risk

- More comprehensive dataset quality assurance

GDPR Coordination:

The Digital Omnibus package also includes parallel GDPR amendments clarifying that “legitimate interests” may serve as lawful basis for AI development and training (under specific conditions). These coordinated changes aim to resolve the tension between AI innovation and data protection.

AI Literacy Obligations Shifted

Original AI Act Requirement:

Article 4 required providers and deployers to ensure “a sufficient level of AI literacy” of their staff and persons dealing with AI system operation and use.

Digital Omnibus Change:

This obligation is removed from providers and deployers and transformed into an obligation of the Commission and Member States to encourage (rather than mandate) AI literacy measures.

Practical Impact:

Financial: Organizations can reallocate planned training budgets to technical compliance infrastructure.

Operational: While no longer legally mandated, AI literacy remains operationally critical for:

- Effective human oversight (Article 14 requirements)

- Proper AI system operation and monitoring

- Cross-functional compliance coordination

- Risk management system effectiveness

Recommendation: Continue AI literacy initiatives as operational best practice, even though no longer legal requirement. Document training efforts as evidence of responsible AI governance.

Registration Requirements Eliminated for Certain Low-Risk Systems

Original AI Act Approach:

Article 6(4) required providers demonstrating an Annex III use case is “not high-risk” under Article 6(3) (because it doesn’t pose significant risk to health, safety, or fundamental rights) to still register that system in the EU database.

Digital Omnibus Change:

Registration requirement deleted for Article 6(3) out-of-scope determinations.

Still Required:

- Document the self-assessment

- Make assessment available to regulators upon request

- Maintain evidence of why system isn’t high-risk

Impact:

This removes administrative burden for “edge case” systems that technically fall within Annex III use cases but don’t actually pose high risks. Organizations no longer navigate database registration for systems they’ve determined don’t warrant it—but must thoroughly document the rationale.

Expanded Regulatory Sandboxes and Real-World Testing

New EU-Level Sandbox:

The Digital Omnibus introduces an EU-level regulatory sandbox alongside existing national sandboxes, creating a dual-layer structure.

Purpose:

- Allow testing of high-impact AI solutions under regulatory guidance

- Particularly beneficial for SMEs and SMCs

- Enable controlled innovation in real-world conditions for limited periods

- Provide regulatory feedback before full market launch

Broadened Article 60 Real-World Testing:

Original Article 60 permitted pre-market real-world testing of certain high-risk AI systems. The Digital Omnibus expands this to include:

- AI systems covered by Annex I product regulation (machinery, toys, radio equipment, vehicles, medical devices)

- Controlled live trials before full certification

- Subject to safeguards and oversight

Strategic Value:

Sandbox participation offers:

- Risk Reduction: Test compliance approaches with regulatory feedback

- Evidence Generation: Create documentation of real-world performance for conformity assessment

- Competitive Intelligence: Understand how authorities interpret requirements

- Market Validation: Prove business model viability while building compliance

Timeline: Sandboxes operational from 2026. Organizations should evaluate participation as part of 2026 compliance strategy.

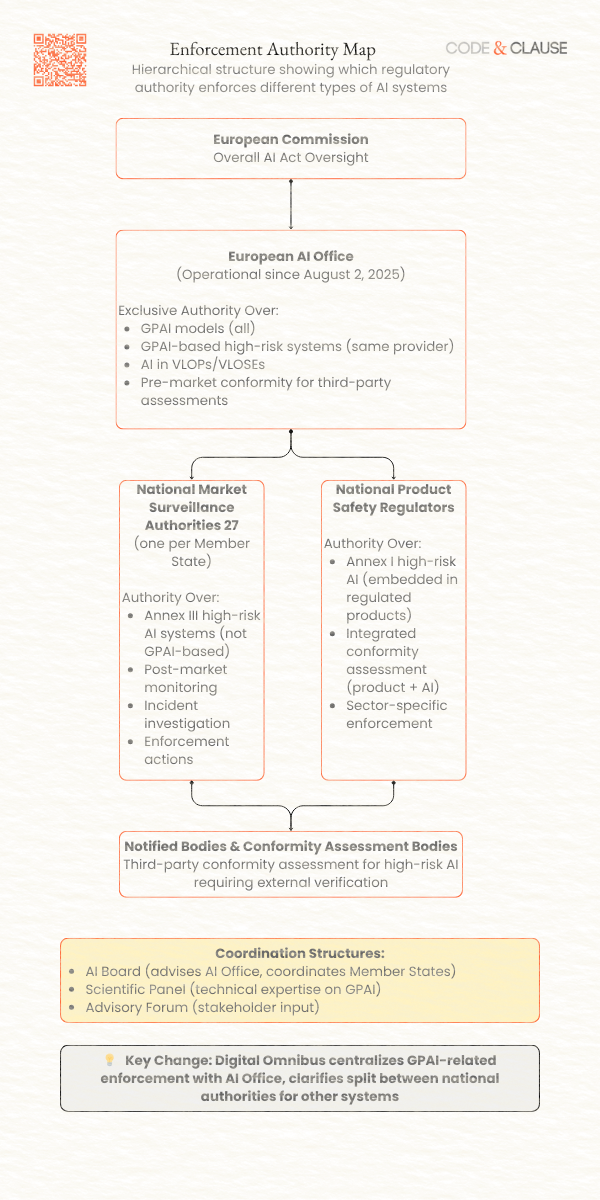

Centralized AI Office Enforcement

Enforcement Jurisdiction Clarification:

The Digital Omnibus grants the European Commission’s AI Office exclusive supervisory authority over:

- GPAI-based high-risk systems: Where both the general-purpose AI model and the high-risk AI system are developed by the same provider

- VLOP/VLOSE-integrated systems: AI systems that constitute or are integrated into Very Large Online Platforms (VLOPs) or Very Large Online Search Engines (VLOSEs) under the Digital Services Act

For all other high-risk systems:

- Annex I regulated products: National product safety regulators (machinery authorities, medical device notified bodies, etc.)

- Annex III use cases: National market surveillance authorities

Practical Impact:

Clarity: Organizations now know definitively which authority they’ll interact with for conformity assessment and market surveillance.

Consistency: Centralized AI Office oversight for GPAI-based systems should create more uniform interpretation across Member States.

Coordination: Enhanced cooperation requirements mean regulators investigating fundamental rights violations request information from market surveillance authorities rather than directly from businesses (reducing duplicate requests).

Pre-Market Conformity Assessment: Providers of high-risk systems under AI Office jurisdiction that require third-party conformity assessment may face pre-market testing by the Commission—a significant procedural change.

What Hasn’t Changed (And Why That Matters)

Core Requirements Remain Stringent

The Digital Omnibus adjusts when compliance is required, not what compliance entails. High-risk AI systems still must demonstrate:

Article 9 – Risk Management:

- Continuous, iterative risk identification and mitigation throughout system lifecycle

- Documented risk assessment addressing reasonably foreseeable risks

- Testing protocols demonstrating effective risk mitigation

- Regular review and updates as new risks emerge

Article 10 – Data Governance:

- Training, validation, and testing datasets subject to data governance and management practices

- Examination for possible biases and appropriate mitigation measures

- Relevant design choices, data collection processes, and data preparation

- Consideration of characteristics or elements particular to specific geographic, behavioral, or functional setting

Article 11 – Technical Documentation:

- Comprehensive documentation per Annex IV requirements

- Description of system, intended purpose, risk management system

- Detailed design specifications, datasets used, testing and validation

- Updated throughout system lifecycle

Article 12 – Record-Keeping:

- Automatic logging of events (automatically generated logs)

- Traceability of system operation throughout its lifecycle

- Appropriate logging capabilities considering intended purpose and risk level

Article 13 – Transparency:

- Clear information for deployers on system characteristics, capabilities, limitations

- Instructions for use enabling proper implementation

- Foreseeable unintended outcomes and sources of risks to health, safety, fundamental rights

Article 14 – Human Oversight:

- Appropriate human oversight measures designed into system

- Prevent or minimize risks arising from AI system use

- Ability to ignore, override, or reverse AI system output

- Ensure humans remain informed and can make informed decisions

Article 15 – Accuracy, Robustness, Cybersecurity:

- Appropriate levels of accuracy, robustness, and cybersecurity

- Performance consistent throughout lifecycle

- Resilience against errors, faults, or inconsistencies

- Technical robustness appropriate to intended purpose and risk level

None of these requirements have been weakened or simplified. Organizations waiting for standards still need systems capable of meeting these obligations once standards clarify specific technical implementations.

Already-Enforced Provisions Continue

Prohibited AI Practices (Chapter II) – In Effect Since February 2, 2025:

The following remain prohibited regardless of Digital Omnibus changes:

- Manipulative or deceptive AI that exploits vulnerabilities

- Social scoring by public authorities

- Real-time remote biometric identification for law enforcement (with narrow exceptions)

- Biometric categorization inferring sensitive attributes

- Emotion recognition in workplaces and educational institutions

- Untargeted scraping for facial recognition databases

- Predictive policing based solely on profiling

- AI-based risk assessment predicting criminal offense likelihood based on protected characteristics

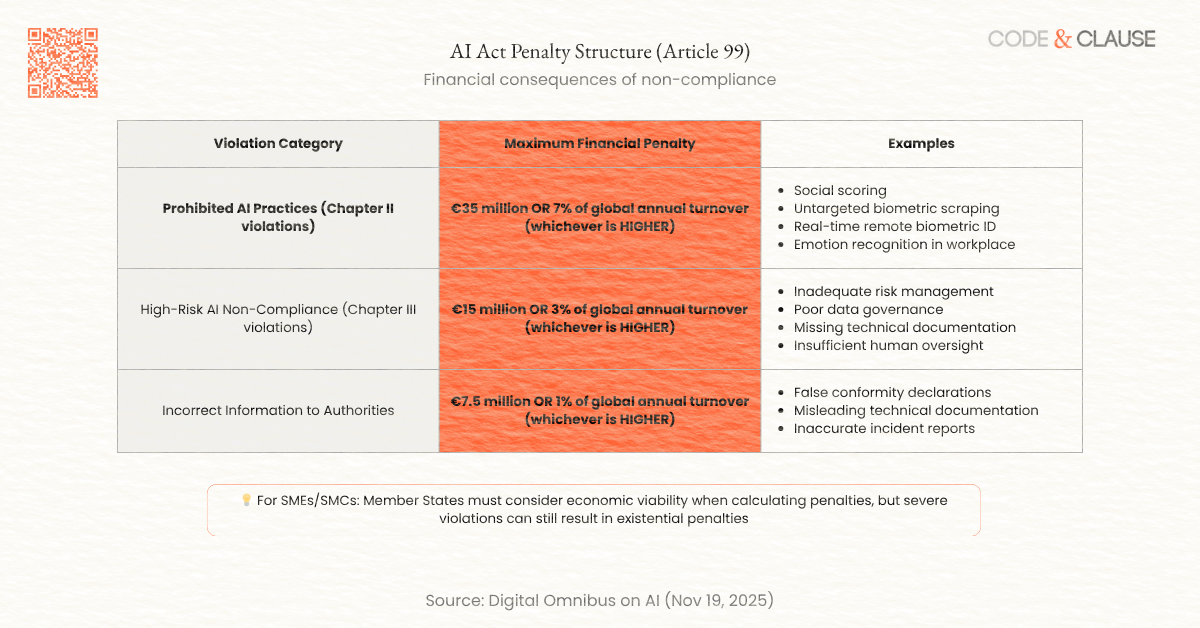

Enforcement: National authorities are actively monitoring these prohibitions. Non-compliance faces maximum penalties of €35 million or 7% of global annual turnover, whichever is higher.

GPAI Obligations (Chapter V) – In Effect Since August 2, 2025:

Providers of general-purpose AI models must comply with:

- Transparency obligations (Article 53)

- Copyright and data protection compliance (Article 53)

- Technical documentation requirements (Articles 53, Annex XI)

- For systemic risk models: Risk assessment and mitigation (Article 55)

- Incident reporting obligations

The Digital Omnibus does not alter GPAI timelines or requirements. Organizations providing foundation models are already operating under these obligations.

Penalties Remain Severe

Financial Penalties Under Article 99:

Whichever Is Higher: Penalties calculated as the greater of the fixed amount or percentage of turnover.

For SMEs and SMCs: Member States must consider economic viability when calculating penalties, but severe violations can still result in existential financial penalties.

Enforcement Reality Check:

While high-risk requirements are delayed, enforcement infrastructure is being built now:

- National market surveillance authorities designated by Member States (Article 70)

- AI Office operational since August 2, 2025

- Conformity assessment bodies being notified and authorized

- Cross-border enforcement cooperation mechanisms established

When compliance deadlines activate—whether through conditional trigger or long-stop dates—enforcement capacity will be ready.

International Compliance Still Necessary

The Digital Omnibus is EU-specific. Other jurisdictions are proceeding independently:

United States:

- Colorado AI Act (passed 2024, enforcement 2026)

- California AI regulations (various bills progressing)

- Federal AI governance proposals (uncertain under new administration)

- State-by-state patchwork creating compliance complexity

United Kingdom:

- Post-Brexit divergence from EU approach

- Principles-based framework rather than prescriptive rules

- Sector-specific regulators implementing AI guidance

- Pro-innovation posture but enforcement still developing

Canada:

- AIDA (Artificial Intelligence and Data Act) progressing through Parliament

- Risk-based approach similar to EU but with Canadian specifics

- Implementation timeline uncertain but moving forward

Singapore:

- Model AI Governance Framework (voluntary but influential)

- Industry-led standards development

- Regulatory pragmatism attracting AI investment

Multi-Jurisdictional Strategy:

Global AI providers cannot simply optimize for EU timelines. You need compliance strategies addressing:

- Baseline technical requirements common across jurisdictions

- Jurisdiction-specific variations and enhancements

- Documentation and evidence collection applicable to multiple regimes

- Governance structures coordinating multi-jurisdictional compliance

The Digital Omnibus provides EU breathing room, but the global compliance challenge remains complex and urgent.

Share this on:

Real-World Implementation Scenarios

The following scenarios illustrate how different organizations should approach the Digital Omnibus timeline changes. To help with your strategic decision, use this decision framework:

Scenario 1: The Mid-Market HR Tech Company

Company Profile:

- 400 employees, €60M annual revenue

- Primary product: AI-powered CV screening and candidate ranking system

- Classification: High-risk under Annex III (employment, workers management, access to self-employment)

- Geographic focus: 60% EU customers, 40% rest of world

Current State (December 2024):

- Original compliance target: August 2, 2026

- Invested €1.2M in compliance infrastructure (risk management system, documentation, testing)

- Internal assessment: 75% confident system would pass conformity assessment

- Competitive pressure: Two main competitors in European market

Digital Omnibus Impact:

Option A: Legacy Launch Strategy

- Accelerate product roadmap to place system on market before June 2026

- Lock in ability to sell current version through December 2027 minimum

- Continue enhancements as separate product versions after standards clarify

- Investment: €300K additional to accelerate launch

- Risk: If current implementation fails future conformity assessment, significant re-engineering required

Option B: Wait for Standards

- Maintain August 2026 target but don’t launch until standards available (likely Q2 2027)

- Build compliance from clear technical specifications

- Reduce risk of non-compliant design requiring retrofit

- Investment: €100K ongoing maintenance of readiness

- Risk: Competitors choosing Option A capture market share during 9-12 month delay

Code & Clause Recommendation:

Given 75% compliance confidence and moderate competitive pressure:

- Primary path: Pursue Option A (legacy launch) with comprehensive documentation

- Risk mitigation: Engage independent conformity assessment body for pre-market review

- Contingency: If pre-market review identifies significant gaps, pivot to Option B

- Timeline:

- Q1 2026: Independent pre-market assessment

- Q2 2026: Launch decision based on assessment results

- Q3 2026: Launch if pursuing Option A, or continue development if Option B

Scenario 2: The Medical Device Manufacturer

Company Profile:

- 2,500 employees, €450M annual revenue

- Primary product: AI-enhanced diagnostic imaging system (radiology)

- Classification: High-risk under both Annex I (medical device – MDR) and Annex III (healthcare diagnosis)

- Geographic focus: Global market, 40% EU sales

Current State:

- Already complies with Medical Device Regulation (EU 2017/745)

- AI Act creates additional layer of requirements

- Original AI Act timeline: August 2, 2027 (Annex I extended deadline)

- Invested €3.5M in AI Act preparation (on top of existing MDR compliance)

Digital Omnibus Impact:

Key Understanding: Long-stop date moves to August 2, 2028 (12 months extension for Annex I products)

Complexity: Dual regulatory pathways:

- MDR requirements: Unchanged, existing enforcement continues

- AI Act requirements: New timeline flexibility but ultimate long-stop by August 2028

Strategic Considerations:

- Conformity Assessment Coordination:

- The Digital Omnibus clarifies that when system is subject to both product regulation (MDR) and AI Act (Annex III), the MDR conformity assessment takes precedence

- Notified bodies conducting MDR assessment will also verify AI Act compliance

- Reduces duplicative conformity assessment procedures

- Legacy System Provision:

- Less strategically valuable for medical devices given rigorous MDR requirements already in place

- Incremental changes to diagnostic algorithms may trigger MDR “significant change” requirements anyway

- Legacy system provision most valuable for entirely new products, not iterative improvements

- Timeline Management:

- Continue building AI Act compliance in parallel with MDR updates

- Leverage extended timeline to integrate AI Act requirements into next MDR product certification cycle

- Avoid separate AI Act conformity assessment if possible by bundling into MDR process

Code & Clause Recommendation:

- Strategy: Integrate AI Act compliance into next MDR certification cycle

- Timeline: Plan for combined MDR/AI Act conformity assessment by Q2 2028

- Risk Management: Don’t treat AI Act as separate compliance track; embed into existing medical device quality management system

- Advantage: Extended timeline aligns well with typical medical device development and certification cycles (2-3 years)

Scenario 3: The AI Startup (<50 employees)

Company Profile:

- 35 employees (25 technical, 10 business/operations)

- Pre-Series A funding (€3M raised)

- Product: Conversational AI chatbot for recruitment screening

- Potential classification: High-risk under Annex III (employment decisions)

- Target market: Initially UK and US, EU expansion planned for 2026

Current State:

- Limited compliance budget (€150K total allocated)

- No dedicated legal/compliance team (using external consultants)

- High technical capability but limited regulatory expertise

- Original EU entry plan: Q3 2026 (aligned with initial high-risk deadline)

Digital Omnibus Impact:

Primary Benefit: Extended Runway

The conditional timeline provides 12-18 additional months to:

- Raise Series A with clear compliance cost projections

- Build internal compliance capability gradually

- Leverage SME-specific provisions and sandbox opportunities

- Learn from early enforcement patterns before EU market entry

Strategic Implications:

- Funding Narrative:

- Pitch to investors: “EU market entry delayed to 2027 pending standards clarity”

- Build detailed compliance budget into Series A pitch: €500K-750K for full EU readiness

- Frame as risk reduction: “We’ll enter EU market with clear compliance roadmap rather than regulatory uncertainty”

- Regulatory Sandbox Participation:

- Evaluate EU-level sandbox (available 2026) for SMEs

- Benefits: Regulatory guidance, controlled testing environment, reduced risk

- Investment: Time commitment from technical team, documentation requirements

- Potential outcome: Build relationship with AI Office, demonstrate compliance readiness to investors and customers

- Flexible Market Entry Timeline:

- Continue UK/US growth through 2026

- Monitor Commission standards development closely

- Accelerate or delay EU entry based on:

- Standards availability

- Funding status

- Competitive dynamics (are larger competitors entering aggressively?)

Code & Clause Recommendation:

- Primary strategy: Delay EU market entry to Q2-Q3 2027

- Interim actions:

- Apply to regulatory sandbox in Q1 2026

- Raise Series A with compliance funding included (Q2 2026)

- Build compliance infrastructure in H2 2026

- Launch EU pilot customers in regulatory sandbox environment

- Full EU market launch once standards available

- Advantage: Reduced cash burn during regulatory uncertainty, stronger position when entering EU market

- Risk management: Maintain optionality to accelerate if standards clarify earlier than expected

Scenario 4: The Global GPAI Provider

Company Profile:

- 8,000 employees globally

- Products: Foundation models (LLMs) and downstream applications

- Classification: GPAI with systemic risk (Article 51)

- Already compliant with GPAI obligations (in effect since August 2025)

Current State:

- Comprehensive AI governance infrastructure

- Multiple downstream applications, some high-risk under Annex III

- Complex regulatory coordination across 27 EU Member States

- Existing relationships with AI Office for GPAI oversight

Digital Omnibus Impact:

Centralized Enforcement Advantage:

For GPAI-based high-risk systems where the model provider and system provider are the same entity:

- Single supervisory authority: AI Office (not 27 different national authorities)

- Consistent interpretation: Centralized oversight reduces regulatory fragmentation

- Pre-market conformity assessment: May face Commission pre-market testing for third-party conformity assessment cases

Downstream Application Strategy:

Different timeline implications for different product lines:

- GPAI model obligations: Already compliant, no change

- High-risk systems based on GPAI: Conditional timeline, AI Office enforcement

- High-risk systems NOT based on GPAI: National authority enforcement, conditional timeline

- Lower-risk applications: Minimal requirements, transparency focus

Organizational Challenge:

Coordinating compliance across multiple product teams, each facing potentially different:

- Enforcement authorities

- Conformity assessment procedures

- Timeline activations (if Commission declares standards ready at different times for different use cases)

Code & Clause Recommendation:

- Governance structure: Centralized AI compliance office coordinating across product lines

- Dual-track approach:

- Continue GPAI compliance and AI Office relationship cultivation

- Product-specific compliance for high-risk applications, monitoring standards readiness by use case

- Strategic advantage: Leverage early compliance and AI Office relationship to influence standards development (through industry association participation)

- Risk management: Build modular compliance components reusable across product lines

- Timeline flexibility: Can leverage extended timelines to phase high-risk application launches rather than attempting simultaneous compliance across portfolio

Scenario 5: The Critical Infrastructure Provider

Company Profile:

- 1,200 employees, €180M annual revenue

- Primary product: AI-based predictive maintenance system for energy grid management

- Classification: High-risk under Annex III (critical infrastructure – energy, transport)

- Sector: Highly regulated utility sector with existing safety and reliability requirements

- Customer base: 15 European utility companies, 8 US utilities

Current State:

- Existing compliance with sector-specific regulations (electricity market directives, grid codes)

- AI system embedded in broader grid management platform

- Strong safety culture and documentation practices from energy sector requirements

- Original compliance target: August 2, 2026

Digital Omnibus Impact:

Sector-Specific Considerations:

Critical infrastructure has unique characteristics:

- Public safety implications: System failures could cascade to widespread power outages

- Regulatory overlap: EU Network Codes, national energy regulations, plus AI Act

- Risk aversion: Utilities extremely conservative about untested systems

- Long procurement cycles: 18-24 months from evaluation to deployment

Strategic Tensions:

- Legacy Launch Pressure:

- Utilities planning multi-year grid modernization projects scheduled for 2026-2027

- If company doesn’t launch by mid-2026, competitors with “legacy” systems may lock in contracts

- Missing procurement windows could mean 2-3 year revenue delays

- Compliance Confidence:

- Energy sector documentation standards already rigorous (90% overlap with AI Act Article 11)

- Risk management processes mature due to safety-critical nature

- High confidence (85%+) that current system would pass conformity assessment

- BUT: Novel bias/fairness requirements in AI Act less familiar than traditional safety engineering

- Customer Requirements:

- Utility customers REQUIRE clarity on regulatory compliance before procurement

- Will not accept “probably compliant” – need definitive conformity certificates

- Prefer waiting for standards-backed certification over regulatory uncertainty

Code & Clause Recommendation:

Dual-Track Strategy:

Track 1: Legacy Market Position (Summer 2026)

- Launch current system version to existing customers (who know product and trust company)

- Position as “bridge solution” while standards finalize

- Lock in ability to provide updates to current version

- Target: Existing customer renewals and expansions

Track 2: Standards-Compliant “New Generation” (Q3 2027)

- Develop enhanced version incorporating harmonized standards once available

- Full conformity assessment and certification

- Premium positioning as “certified under harmonized standards”

- Target: New customer acquisitions and competitive tenders

Rationale:

- Protects existing revenue base with legacy provision

- Addresses customer requirement for regulatory certainty with certified new version

- Reduces risk: If legacy version has issues, certified version provides migration path

- Aligns with utility procurement cycles (existing customers renew faster than new procurement)

Investment Required:

- Track 1: €200K (accelerate current version launch, documentation)

- Track 2: €1.2M (standards implementation, conformity assessment, certification)

- Total: €1.4M phased over 18 months

Scenario 6: The Financial Services AI Platform

Company Profile:

- 650 employees, €95M annual revenue

- Primary products:

- Credit scoring AI (high-risk – Annex III: access to essential private services)

- Fraud detection AI (explicitly NOT high-risk per Article 6 exception)

- Customer service chatbot (limited risk – transparency obligations only)

- Sector: Heavily regulated financial services with existing compliance infrastructure

- Geographic focus: 12 EU Member States, considering expansion to 15

Current State:

- Existing compliance with GDPR, PSD2, consumer credit directives

- Large legal and compliance team (45 people)

- Multiple AI systems with different risk classifications

- Complex because: Same underlying technology, different risk classifications based on use case

Digital Omnibus Impact:

Multi-Classification Complexity:

This company perfectly illustrates AI Act complexity:

System 1: Credit Scoring (HIGH-RISK)

- Timeline: Conditional, long-stop December 2, 2027

- Requirements: Full Chapter III compliance (Articles 8-15)

- Authority: National market surveillance authority

- Conformity assessment: Third-party notified body

- Customer impact: Banks require conformity certificates to use

System 2: Fraud Detection (NOT HIGH-RISK)

- Timeline: No AI Act-specific timeline (general safety requirements apply)

- Requirements: Minimal – basic transparency, human oversight as good practice

- Authority: Financial services regulators (existing framework)

- Conformity assessment: Self-assessment

- Customer impact: Banks focus on efficacy, not AI Act compliance

System 3: Customer Chatbot (LIMITED RISK)

- Timeline: Already applies (transparency requirements in effect)

- Requirements: Article 52 transparency – users informed they’re interacting with AI

- Authority: National market surveillance authority

- Conformity assessment: Self-assessment with documentation

- Customer impact: Simple disclosure requirement

Strategic Challenge:

How to manage product portfolio where:

- Technical infrastructure is shared (same ML platforms, data pipelines)

- Regulatory requirements are dramatically different

- Customer conversations become confusing (“Why is credit scoring complex but fraud simple?”)

- Sales teams need clear messaging about what’s “AI Act compliant”

Code & Clause Recommendation:

Portfolio Management Approach:

Phase 1: Segregate Compliance Tracks (Q1 2026)

- Establish separate product compliance teams for each risk category

- Credit scoring: Dedicated high-risk compliance project (10 FTE)

- Fraud detection: Baseline documentation, focus on efficacy

- Chatbot: Simple transparency implementation (already done)

Phase 2: Credit Scoring Priority (Q2-Q4 2026)

- Focus resources on highest-risk, highest-revenue product

- Engage notified body for preliminary conformity assessment feedback

- Decision point Q3 2026: Legacy launch vs. wait for standards

Phase 3: Standards-Based Implementation (2027)

- Implement harmonized standards as they become available

- Full conformity assessment and certification

- Market positioning: “First certified credit scoring AI in Europe”

Customer Communication Strategy:

Problem: Banks asking “Are you AI Act compliant?”

Answer Framework:

- “We have three product lines with different AI Act requirements”

- “Our credit scoring system (high-risk) is undergoing conformity assessment targeting [date]”

- “Our fraud detection system (not high-risk) meets AI Act’s limited requirements and focuses on financial services regulation”

- “Our chatbot (limited risk) already complies with transparency requirements”

- “We’re happy to provide detailed compliance documentation for your procurement team”

Investment Allocation:

- Credit scoring (high-risk): €2.5M over 24 months (largest investment)

- Fraud detection: €200K (baseline documentation)

- Chatbot: €50K (already substantially complete)

- Shared infrastructure: €800K (governance, monitoring, documentation platforms)

- Total: €3.55M for portfolio-wide AI Act readiness

Key Insight: Don’t treat “AI Act compliance” as single initiative. Different products have radically different requirements and timelines. Portfolio approach prevents over-investment in low-risk systems and under-investment in high-risk ones.

Your Updated 2026-2028 Roadmap

Immediate Actions (December 2024 – March 2025)

Strategic Planning (All Organizations):

Action 1: Compliance Timeline Assessment

- Review all AI systems in your portfolio

- Classify by risk category (prohibited, high-risk Annex III, high-risk Annex I, limited risk, minimal risk)

- Map to new conditional timelines and long-stop dates

- Identify which systems face December 2027 vs. August 2028 deadlines

Deliverable: Portfolio risk matrix with updated compliance deadlines

Action 2: Standards Readiness Monitoring Setup

- Assign responsibility for tracking CEN-CENELEC standardization committees

- Subscribe to European Commission AI Office updates

- Join relevant industry associations tracking standards development

- Establish monthly standards monitoring review process

Deliverable: Standards monitoring dashboard and responsible owner

Action 3: Stakeholder Communication

- Prepare board/executive briefing on Digital Omnibus changes

- Update investor materials with revised compliance timelines

- Brief product teams on strategic implications for roadmaps

- Communicate to customers (if B2B) regarding timing of conformity certification

Deliverable: Stakeholder communication packages by audience

Action 4: Legacy Launch Decision Framework

- Assess compliance confidence level for each high-risk system (scale 1-10)

- Evaluate competitive pressure (are competitors likely to pursue legacy launches?)

- Determine risk tolerance (financial impact of potential non-compliance)

- Apply decision matrix: Launch legacy vs. wait for standards

Deliverable: Go/no-go decision by product line with supporting rationale

For High-Risk AI Providers (Annex III Specific Actions):

Action 5: Preliminary Conformity Assessment

- Engage conformity assessment body for preliminary review (if high budget allows)

- OR conduct rigorous internal self-assessment against known AI Act requirements

- Identify gaps between current system and Articles 8-15 requirements

- Estimate remediation effort and timeline for identified gaps

Deliverable: Gap analysis report with remediation plan

Action 6: Documentation Acceleration

- Compile existing technical documentation

- Map to Annex IV requirements (technical documentation for high-risk AI)

- Identify documentation gaps

- Establish documentation completion timeline

Deliverable: Technical documentation at 70%+ completion by Q2 2025

For Product-Embedded AI (Annex I Specific Actions):

Action 7: Product Regulation Coordination

- Review existing product compliance (MDR, machinery directive, automotive type approval, etc.)

- Identify overlaps between product requirements and AI Act requirements

- Coordinate with notified bodies handling product certification

- Plan integrated conformity assessment approach

Deliverable: Integrated compliance roadmap addressing both product regulation and AI Act

Q2-Q3 2025 Actions

Decision Execution Phase:

For Organizations Pursuing Legacy Launch:

Action 8: Accelerated Market Entry

- Finalize current product version for market launch

- Complete technical documentation for current version

- Prepare market surveillance dossier (evidence that system was lawfully placed on market before deadlines)

- Execute go-to-market strategy

Target: System on market by June-August 2026 (before potential early Commission decision)

Action 9: Version Control Strategy

- Establish clear version numbering for “legacy” system vs. future enhanced versions

- Document exactly what design elements are “unchanged” (preserving legacy status)

- Plan enhancement roadmap for post-standards versions

- Prepare customer communication on version evolution

Deliverable: Product version strategy and customer communication plan

For Organizations Waiting for Standards:

Action 10: Compliance Infrastructure Building

- Continue developing risk management systems per Article 9

- Implement data governance frameworks per Article 10

- Build quality management systems per Article 17

- Establish post-market monitoring per Article 72

Key principle: Use extended timeline to build excellent compliance, not just adequate

Action 11: Regulatory Sandbox Application

- Evaluate EU-level or national sandbox opportunities

- Prepare sandbox application materials

- Develop controlled testing protocols

- Engage with regulatory authorities

Target: Sandbox participation by Q4 2025 or Q1 2026

Universal Actions (All Organizations):

Action 12: Vendor Compliance Verification

- Audit all AI system vendors and third-party components

- Verify vendors’ Digital Omnibus response strategies

- Update procurement contracts with compliance representations

- Identify vendor dependencies and risks

Deliverable: Vendor compliance matrix and contract updates

Action 13: Cross-Functional Governance

- Establish or refresh AI compliance steering committee

- Define decision-making authority for compliance vs. market timing trade-offs

- Implement regular (monthly minimum) compliance status reviews

- Ensure engineering, legal, product, and executive alignment

Deliverable: Governance charter and meeting cadence

Q4 2025 – Q2 2026 Actions

Timeline Monitoring Phase:

Action 14: Commission Standards Declaration Watch

- Intensify monitoring of Commission communications

- Track CEN-CENELEC standardization progress

- Assess likelihood of early Commission decision on standards adequacy

- Update internal timeline projections monthly

Key milestone: If Commission announces standards adequacy by Q2 2026, Annex III enforcement could begin as early as Q4 2026 (6 months later)

Action 15: Competitive Intelligence

- Monitor competitor product launches

- Track which competitors pursuing legacy launch strategy vs. waiting

- Assess market share implications of different strategies

- Adjust strategy if competitive dynamics shift significantly

For Organizations with Legacy Systems on Market:

Action 16: Post-Launch Documentation

- Maintain comprehensive evidence that system was placed on market before deadlines

- Document all sales, deployment dates, and customer communications

- Preserve technical specifications of “legacy” version

- Prepare for potential market surveillance inquiries

Critical: Legacy provision requires proof system was lawfully on market. Documentation is essential.

Action 17: Standards-Compliant Version Planning

- Begin development of enhanced version incorporating harmonized standards as they emerge

- Maintain parallel tracks: legacy version support + new version development

- Plan customer migration strategy from legacy to certified version

Q3 2026 – Q4 2027 Actions

Standards Implementation Phase:

Action 18: Harmonized Standards Adoption

- Implement harmonized standards as published in Official Journal

- Update technical documentation to demonstrate standards conformance

- Conduct internal testing against standards requirements

- Engage conformity assessment bodies

Timeline assumption: Assuming standards available Q3 2026 – Q2 2027 timeframe

-> CRITICAL: THIS IS NOT DEREGULATION

The Digital Omnibus adjusts WHEN compliance is required, not WHAT compliance entails.

All requirements remain equally stringent:

- ✓ Risk management (Article 9) – unchanged

- ✓ Data governance (Article 10) – unchanged

- ✓ Technical documentation (Article 11) – unchanged

- ✓ Transparency, oversight, robustness (Articles 13-15) – unchanged

- ✓ Prohibited AI practices enforcement – unchanged

- ✓ GPAI obligations – unchanged

This is timeline adjustment to align with standards readiness, NOT permission to delay compliance preparation.

Organizations waiting for standards still need systems capable of meeting these obligations once standards clarify specific technical implementations.

Action 19: Conformity Assessment

- Select conformity assessment pathway:

- Internal assessment (if allowed for your use case)

- Third-party assessment by notified body

- Integrated product+AI assessment (for Annex I systems)

- Complete conformity assessment procedure

- Obtain EU declaration of conformity

Target: Conformity assessment complete 3-6 months before applicable deadline

Action 20: Market Surveillance Preparation

- Prepare comprehensive technical documentation packages

- Establish post-market monitoring systems (Article 72)

- Implement incident reporting procedures (Article 73)

- Set up continuous compliance monitoring

Action 21: Long-Stop Date Preparation

- For Annex III systems: Ensure compliance by December 2, 2027 regardless of standards status

- For Annex I systems: Ensure compliance by August 2, 2028 regardless of standards status

- Build contingency plan if standards still incomplete (rely on Commission guidance and common specifications)

Critical: Long-stop dates are absolute. Even if standards aren’t complete, requirements apply.

2028+ Actions

Continuous Compliance Phase:

Action 22: Ongoing Monitoring and Updates

- Maintain risk management system reviews (Article 9: continuous process)

- Update technical documentation as system evolves (Article 11)

- Monitor system performance and incidents (Article 72)

- Adapt to evolving regulatory guidance and enforcement patterns

Action 23: Multi-Jurisdictional Coordination

- Monitor non-EU AI regulations (US states, UK, Canada, etc.)

- Maintain compliance across multiple jurisdictions

- Leverage EU compliance work for other regimes where applicable

- Participate in international AI governance discussions

What to Monitor: Your Compliance Dashboard

Critical Monitoring Indicators

1. European Commission Standards Declarations

What to watch:

- Official Journal publications of harmonized standards

- Commission implementing acts on common specifications

- AI Office public statements on implementation readiness

Where to monitor:

- Official Journal of the European Union (EUR-Lex): https://eur-lex.europa.eu

- European Commission Digital Strategy website: https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

- AI Act Service Desk: https://ai-act-service-desk.ec.europa.eu

Monitoring frequency: Weekly

Red flag indicator: Commission announcement of “adequate measures in support of compliance” triggers 6-12 month countdown to enforcement

2. CEN-CENELEC-ETSI Standardization Progress

What to watch:

- Technical committee (TC) meeting outcomes

- Draft standards publication for public comment

- Final standards adoption announcements

Where to monitor:

- CEN (European Committee for Standardization): https://www.cencenelec.eu

- ETSI (European Telecommunications Standards Institute): https://www.etsi.org

- Industry association updates (many consolidate standardization tracking)

Key technical committees:

- CEN-CENELEC JTC 21 (AI)

- ISO/IEC JTC 1/SC 42 (Artificial Intelligence)

Monitoring frequency: Monthly

What to look for: Movement from “working draft” to “committee draft” to “draft standard” to “published standard” stages

3. Member State Implementation Status

What to watch:

- National competent authority designations (market surveillance and notifying authorities)

- National AI strategies and implementation roadmaps

- Conformity assessment body notifications and authorizations

- National guidance documents

Where to monitor:

- AI Act Implementation Tracker: https://artificialintelligenceact.eu/national-implementation-plans/

- Individual Member State government websites (relevant ministries)

- Trade associations operating in your target Member States

Monitoring frequency: Quarterly

Why it matters: Uneven implementation across Member States could create compliance complexity. Some authorities may be more/less stringent or faster/slower than others.

4. AI Office Guidance and Enforcement Actions

What to watch:

- AI Office published guidelines and FAQs

- Codes of practice updates (especially GPAI Code of Practice)

- Enforcement decisions and precedents

- Public consultation outcomes

Where to monitor:

- AI Office website: https://digital-strategy.ec.europa.eu/en/policies/ai-office

- AI Pact (voluntary compliance initiative): https://digital-strategy.ec.europa.eu/en/policies/ai-pact

- Legal databases tracking enforcement actions (once enforcement begins)

Monitoring frequency: Monthly

Red flag indicator: First enforcement actions against companies in your sector or with similar AI systems

5. Conformity Assessment Body Landscape

What to watch:

- Which conformity assessment bodies are getting notified and authorized

- Sectoral expertise of notified bodies (which specialize in your AI use cases)

- Conformity assessment timelines and backlogs

- Published conformity assessment methodologies

Where to monitor:

- NANDO (New Approach Notified and Designated Organisations) database (will be updated with AI Act notified bodies)

- Individual notified body websites and publications

- Industry association compilations

Monitoring frequency: Quarterly, increasing to monthly when approaching conformity assessment

Why it matters: Limited notified body capacity could create bottlenecks. Early engagement may be critical.

6. Competitive Intelligence

What to watch:

- Competitor product launches (potential legacy systems before deadlines)

- Competitor marketing claims about AI Act compliance

- Competitor partnerships with conformity assessment bodies

- Customer RFPs and procurement requirements mentioning AI Act

Where to monitor:

- Competitor websites and press releases

- Industry trade publications

- Customer communications and RFPs

- Conference presentations and speaking engagements

Monitoring frequency: Monthly

Strategic value: Understand if competitors pursuing legacy launch strategy vs. waiting for standards

7. Legal and Policy Developments

What to watch:

- European Parliament and Council discussions on potential AI Act amendments

- Court cases challenging AI Act provisions or interpretations

- Academic and expert legal analysis

- Industry advocacy positions

Where to monitor:

- EUR-Lex (official legal portal)

- European Parliament and Council websites

- Major law firm publications (many publish regular AI Act updates)

- Academic journals and conferences

Monitoring frequency: Monthly

Why it matters: Digital Omnibus itself must go through trilogue negotiations. Further amendments possible.

Practical Monitoring Setup

Recommended Team Structure:

Regulatory Monitoring Lead (0.5 FTE minimum):

- Aggregates updates from all sources

- Produces monthly monitoring summary report

- Flags urgent developments requiring immediate action

- Maintains monitoring dashboard

Cross-Functional Review Team:

- Legal: Interprets regulatory implications

- Engineering: Assesses technical implementation impact

- Product: Evaluates timeline and market implications

- Executive: Makes strategic decisions based on developments

Monthly Monitoring Rhythm:

- Week 1: Regulatory monitoring lead compiles monthly report

- Week 2: Cross-functional team reviews report

- Week 3: Strategic decisions and action planning

- Week 4: Implementation of decisions

Monitoring Dashboard Template:

| Indicator | Current Status | Change from Last Month | Impact Level | Action Required |

|---|---|---|---|---|

| Commission Standards Declaration | No announcement | No change | 🟡 Medium | Continue monitoring |

| CEN-CENELEC Standards Progress | 3 draft standards published for comment | +2 from last month | 🟢 Low | Review drafts when finalized |

| Member State Implementation | 18 of 27 designated authorities | +3 from last month | 🟢 Low | Monitor lagging states |

| AI Office Guidance | New FAQ published on risk assessment | New guidance | 🟡 Medium | Review FAQ, update internal guidance |

| Conformity Assessment Bodies | 5 notified in our sector | +2 from last month | 🟢 Low | Evaluate for preliminary engagement |

| Competitive Intelligence | Competitor X announced Q2 2026 launch | Potential legacy strategy | 🔴 High | Assess impact on our strategy |

| Legal Developments | Digital Omnibus in trilogue | Ongoing negotiations | 🟡 Medium | Monitor for further amendments |

Color coding:

- 🔴 High impact: Requires immediate strategic discussion

- 🟡 Medium impact: Requires attention but not urgent

- 🟢 Low impact: Track but no immediate action needed

Conclusion: Strategic Positioning in Regulatory Uncertainty

The New Compliance Paradigm

The Digital Omnibus on AI fundamentally changes the nature of AI Act compliance from a fixed-date engineering project to an adaptive strategic business decision. This shift creates both challenges and opportunities:

Challenges:

- Planning complexity: Multiple timeline scenarios require contingency planning

- Resource allocation: Difficult to budget and staff when deadlines are conditional

- Competitive uncertainty: Unclear which competitors will pursue legacy launches

- Market confusion: Customers may struggle to understand compliance status

Opportunities:

- Extended preparation time: Organizations can build superior compliance infrastructure

- Strategic differentiation: Choice of strategy (legacy vs. wait) becomes competitive positioning

- Risk-adjusted approaches: Flexibility to match strategy to risk tolerance and market position

- Standards-based excellence: Organizations waiting for standards can build compliance from clear technical specifications

Three Strategic Archetypes

Organizations are clustering into three strategic approaches:

1. The Aggressive First-Mover

Profile:

- High compliance confidence (85%+ certainty current implementation would pass)

- Significant competitive pressure (market share at risk during delays)

- Risk-tolerant leadership willing to accept potential non-compliance costs

- Strong customer relationships enabling trust despite regulatory uncertainty

Strategy:

- Pursue legacy launch before mid-2026

- Lock in market position before competitors

- Plan standards-compliant “v2.0” for 2027-2028

Example sectors: HR tech, marketing AI, some financial services

2. The Cautious Standards-Waiter

Profile:

- Moderate to low compliance confidence (uncertainty about specific technical requirements)

- Lower competitive pressure (market timing less critical)

- Risk-averse leadership prioritizing compliance certainty

- Customers requiring explicit conformity certification before procurement

Strategy:

- Delay market entry until standards available (late 2027)

- Build compliance from clear technical specifications

- Position as “certified under harmonized standards” for premium market position

Example sectors: Critical infrastructure, some medical devices, public sector sales

3. The Hybrid Dual-Tracker

Profile:

- Mixed portfolio of AI systems (some high confidence, some uncertain)

- Diverse customer base (some accepting uncertainty, some requiring certification)

- Sophisticated compliance capabilities enabling parallel strategies

- Sufficient resources to pursue multiple approaches simultaneously

Strategy:

- Launch legacy versions for existing customers and early adopters

- Simultaneously develop standards-compliant versions for broader market

- Provide customer choice: immediate deployment vs. certified solution

Example sectors: Enterprise software platforms, multi-product AI companies

What Excellence Looks Like

As the regulatory landscape evolves through 2026-2028, leading organizations will demonstrate:

1. Adaptive Strategy

- Regular (quarterly minimum) strategic reviews adjusting for new information

- Scenario planning addressing multiple timeline possibilities

- Decision frameworks that balance risk, opportunity, and compliance

- Willingness to pivot strategy as standards and competitive dynamics evolve

2. Superior Documentation

- Comprehensive technical documentation exceeding minimum requirements

- Rigorous record-keeping of design decisions and risk assessments

- Audit trails demonstrating continuous compliance efforts

- Documentation practices that serve multiple purposes (conformity assessment, market surveillance, customer assurance, internal learning)

3. Proactive Engagement

- Active participation in standardization processes (through industry associations)

- Early engagement with conformity assessment bodies for preliminary feedback

- Regulatory sandbox participation where applicable

- Constructive dialogue with national authorities and AI Office

4. Competitive Intelligence

- Systematic monitoring of competitor strategies

- Understanding of sector-wide compliance approaches

- Awareness of enforcement patterns as they emerge

- Adaptation based on market learnings

5. Organizational Alignment

- Executive leadership understanding compliance as strategic business decision

- Cross-functional governance ensuring engineering, legal, product, and business alignment

- Resource commitment proportional to risk and business importance

- Culture viewing compliance as competitive advantage, not administrative burden

The Bigger Picture: AI Governance Maturity

The Digital Omnibus timeline adjustments reflect a broader reality: AI governance is evolving in real-time. The organizations that thrive won’t be those that achieve minimal compliance at the latest possible moment. They’ll be those that:

Build Governance as Competitive Advantage:

- Develop robust risk management practices that improve product quality, not just satisfy regulators

- Use transparency requirements to build customer trust and market differentiation

- Leverage human oversight requirements to create better user experiences

- Turn fairness and bias mitigation into product features customers value

Embrace Regulatory Engagement:

- View regulators as partners in responsible AI development, not adversaries

- Provide constructive feedback on proposed standards and guidance

- Share learnings and best practices that inform better regulation

- Build relationships with authorities that enable faster, smoother conformity assessment

Invest in Foundational Capabilities:

- Data governance infrastructure that serves both compliance and AI system quality

- Documentation practices that accelerate development cycles, not just satisfy auditors

- Testing and validation frameworks that improve models while demonstrating compliance

- Monitoring systems that catch issues proactively rather than reactively

Final Thought: Uncertainty as Strategic Opportunity

The conditional timelines and long-stop dates create genuine strategic choices. Organizations that view this uncertainty as opportunity—rather than obstacle—will emerge stronger when standards arrive.

The question is not: “When must we comply?”

The question is: “How can we use this extended timeline to build AI systems that are not just compliant, but genuinely trustworthy, safe, and valuable?”

The Digital Omnibus doesn’t change the destination: trustworthy, compliant AI systems that respect fundamental rights and demonstrate technical excellence. It changes the route. Organizations that use this recalibration period to build stronger compliance foundations—and genuinely better AI systems—will lead the industry when August 2027 or December 2028 arrives.

The race is not to the fastest, but to the most strategic.

Share this on:

Legal Disclaimer

This article provides general guidance based on Regulation (EU) 2024/1689 and publicly available regulatory materials. It does not constitute legal advice. EU AI Act compliance requirements depend on specific organizational contexts, use cases, system characteristics, and regulatory interpretations that may evolve over time.

Startups should consult qualified legal counsel with EU AI Act expertise for compliance decisions specific to their circumstances. The authors and Code & Clause disclaim all liability for actions taken or not taken based on information in this article.

Regulatory requirements and interpretations continue evolving as national competent authorities issue guidance and enforcement actions establish precedent. Organizations should monitor official guidance from the European Commission AI Office and relevant national authorities.

Frequently Asked Questions

General Understanding

Is the Digital Omnibus on AI law yet, or is it still just a proposal?