Give critical EU AI Act mistakes consistently emerge in the compliance implementation: incorrect classification, superficial risk assessment, inadequate documentation, weak data governance, and absent monitoring. Each represents preventable compliance failures with significant regulatory consequences. This analysis provides systematic remediation strategies grounded in specific Article requirements, conformity assessment frameworks, and real-world implementation experience.

Share this on:

EU AI Act enforcement begins in earnest with high-risk system requirements fully applicable from August 2026¹. Organizations rushing to achieve compliance face a critical challenge: audit failures increasingly stem from preventable implementation mistakes rather than technical deficiencies. The European Commission’s AI Office has signaled that conformity assessments will scrutinize not just technical capabilities, but systematic evidence of compliance processes.

The European Commission’s February 2025 Guidelines on Prohibited AI Practices and the Notified Body Coordination Group’s 2025 Conformity Assessment Framework reveal common patterns in preliminary assessments: organizations frequently misunderstand fundamental requirements, implement superficial compliance measures, or overlook critical documentation obligations². These mistakes carry significant consequences—penalties up to €35 million or 7% of global annual turnover for the most serious violations, and market access denial for systems failing conformity assessment³.

This analysis examines five critical mistakes that consistently emerge in EU AI Act implementation, drawn from regulatory guidance, conformity assessment frameworks, and early compliance experiences. Each mistake includes specific remediation strategies grounded in actual regulatory requirements rather than theoretical compliance approaches.

Understanding these common failures enables organizations to avoid costly remediation cycles, regulatory scrutiny, and implementation delays as enforcement timelines approach.

Mistake #1: Incorrect System Risk Classification

The Classification Error

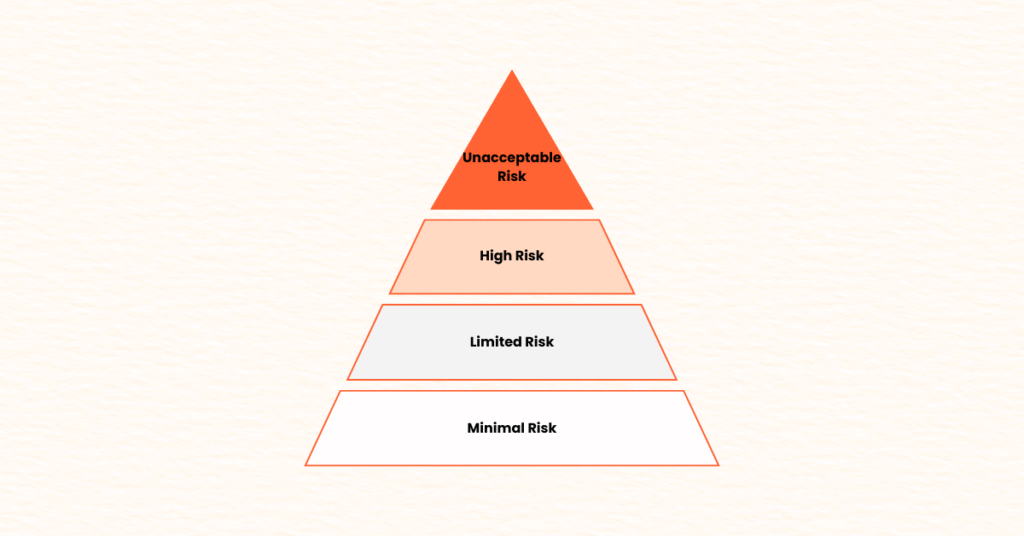

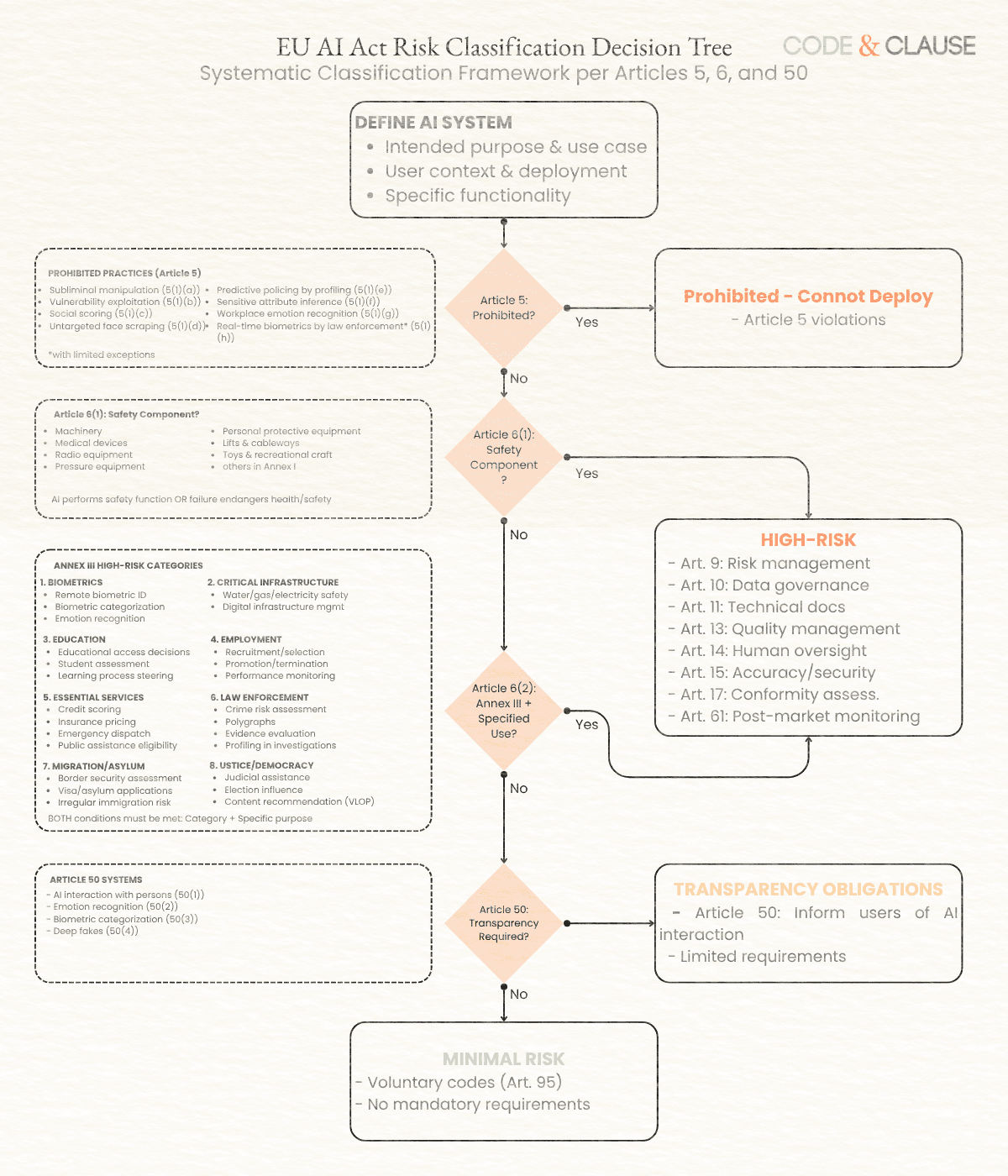

The most fundamental and consequential mistake involves misclassifying AI systems under the EU AI Act’s risk-based framework. Organizations frequently underestimate system risk levels, particularly when AI systems fall into Annex III high-risk categories through subtle use case applications rather than obvious regulatory triggers.

Annex III defines eight high-risk categories including biometrics, critical infrastructure, education/employment, essential services, law enforcement, migration/asylum, justice, and democratic processes⁴. However, classification complexity arises from use case interpretation rather than technology type. An AI system performing facial recognition becomes high-risk only when used for specific purposes outlined in **Article 6(2)**⁵.

Why Classification Mistakes Occur

Ambiguous Use Case Boundaries: Organizations struggle to determine whether their specific application falls within Annex III scope. A recruitment screening tool may or may not constitute high-risk employment decision-making depending on implementation details and human oversight levels.

Component vs. System Classification: Confusion exists around whether individual AI components or integrated systems require classification. Article 6(1) addresses safety components of products covered by specific Union harmonization legislation⁶, creating complexity for AI integrated into regulated products.

Dynamic Use Case Evolution: Systems initially deployed for limited risk applications may evolve toward high-risk use cases without triggering classification review. Progressive feature additions can inadvertently cross risk thresholds.

Classification Failure Consequences

Incorrect classification creates cascading compliance failures. Underclassifying a high-risk system as limited risk means:

- Missing Article 9 risk management system requirements

- Inadequate technical documentation per Article 11

- Absent quality management systems required by Article 13

- No conformity assessment per Article 17

- Potential operation of non-compliant high-risk system (Article 5 violation)

The European Commission’s guidance emphasizes that classification disputes default toward higher risk categories when use cases demonstrate potential for significant harm to fundamental rights⁷.

Evidence-Based Classification Framework

Implement systematic classification through documented decision-making:

Step 1: Use Case Analysis Document precisely how the AI system will be used, including:

- Specific decisions made by or with AI system assistance

- Level of human oversight and decision-making authority

- Population affected and potential impacts on individuals

- Integration with other systems or processes

Step 2: Annex III Mapping Systematically evaluate each Annex III category:

- Review official EU guidance on category interpretation

- Analyze comparable systems classified by regulatory authorities

- Document why each category applies or does not apply

- Seek legal counsel for ambiguous classifications

Step 3: Cross-Functional Validation Classification requires input from:

- Legal counsel familiar with EU AI Act requirements

- Technical teams understanding system capabilities

- Business stakeholders defining use cases and purposes

- Risk management professionals assessing potential impacts

Step 4: Documentation Requirements Maintain classification rationale documentation including:

- Detailed use case descriptions with examples

- Annex III category analysis with supporting reasoning

- Alternative classification considerations evaluated

- Legal counsel review and approval

- Regular classification review schedule (minimum annually or upon significant system changes)

Organizations should reference our complete risk classification framework for detailed guidance on implementing systematic classification procedures.

Mistake #2: Superficial Risk Assessment Implementation

The Risk Assessment Gap

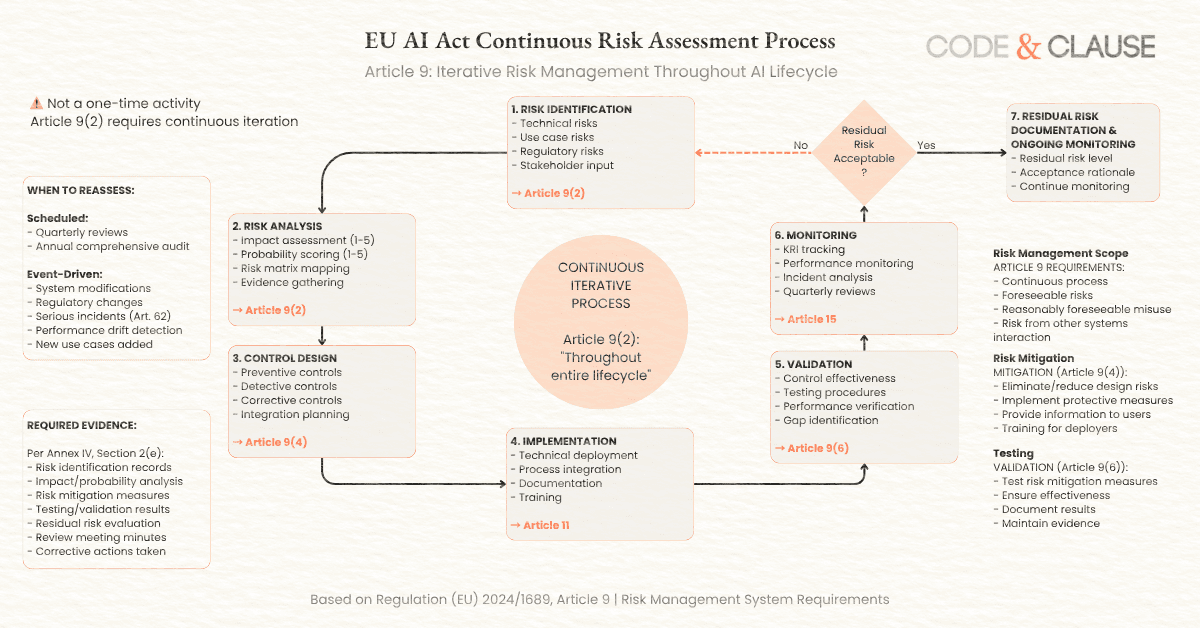

Organizations frequently treat Article 9 risk management as a one-time documentation exercise rather than implementing the required continuous, iterative risk management system throughout the AI system lifecycle⁸. This fundamental misunderstanding manifests in superficial risk assessments that satisfy neither regulatory requirements nor operational risk management needs.

Article 9(2) explicitly requires risk management systems to be “a continuous iterative process run throughout the entire lifecycle of a high-risk AI system”⁹. Conformity assessments evaluate not just risk documentation existence, but evidence of systematic risk identification, analysis, mitigation, and monitoring over time.

Common Risk Assessment Deficiencies

Generic Risk Templates: Organizations adopt generic risk assessment templates without customizing for their specific AI system architecture, use cases, and operational context. These assessments identify theoretical risks without analyzing actual system implementation and deployment environment.

Absent Technical Risk Analysis: Risk assessments focus on business and compliance risks while overlooking technical risks specific to AI systems: model drift, adversarial attacks, training data quality degradation, edge case performance, and system integration failures.

Missing Control Effectiveness Validation: Organizations document risk mitigation controls without implementing validation procedures demonstrating control effectiveness. Article 9(6) requires testing to ensure risk mitigation measures function as intended¹⁰.

No Continuous Monitoring Integration: Risk assessments remain static documents disconnected from operational monitoring systems. Article 15 post-market monitoring requirements necessitate connecting risk assessment to ongoing performance evaluation¹¹.

⚠️ Critical Consideration: Human Oversight Requirements

Article 14 mandates human oversight for high-risk AI systems²⁴, yet superficial implementation represents a commonly overlooked compliance gap. Effective oversight requires:

- Trained personnel with technical competency to understand AI system outputs and limitations

- Decision-making authority to override, disregard, or reverse AI system decisions

- Access to complete information enabling informed oversight rather than blind acceptance

- Clear procedures defining when and how human oversight intervenes

“Human-in-the-loop” without genuine authority and competency fails Article 14 requirements. Organizations must demonstrate that human oversight provides meaningful control rather than procedural formality.

Real-World Risk Assessment Case Study

A European fintech company developing AI credit scoring systems initially conducted basic risk assessment documenting discrimination risks and mitigation through “diverse training data.” Preliminary conformity assessment identified critical deficiencies:

- No quantitative bias metrics or measurement procedures

- Absent documentation of actual data diversity characteristics

- Missing ongoing bias monitoring in production systems

- No procedures for detecting and responding to bias indicators

The company implemented systematic risk assessment including:

- Quantitative fairness metrics across protected demographic groups

- Statistical analysis of training data representativeness

- Automated bias monitoring in production with defined thresholds

- Incident response procedures for bias detection

- Quarterly risk assessment review incorporating production performance data

This revised approach satisfied conformity assessment requirements and provided operational risk management value beyond compliance obligations.

Implementing Systematic Risk Assessment

Build comprehensive risk management per Article 9 requirements:

Risk Identification Framework:

Technical Risk Analysis:

- Data quality and representativeness risks

- Model architecture vulnerabilities (adversarial attacks, edge cases)

- Integration risks with external systems

- Infrastructure availability and performance risks

- Update and maintenance risks

Use Case Risk Analysis:

- Individual harm potential (discrimination, privacy, safety)

- Group impacts and fairness considerations

- Societal implications and fundamental rights impacts

- Economic consequences and competitive effects

Regulatory Compliance Risk Analysis:

- Specific EU AI Act requirement gaps

- GDPR data protection compliance

- Sector-specific regulatory requirements

- Multi-jurisdictional compliance obligations

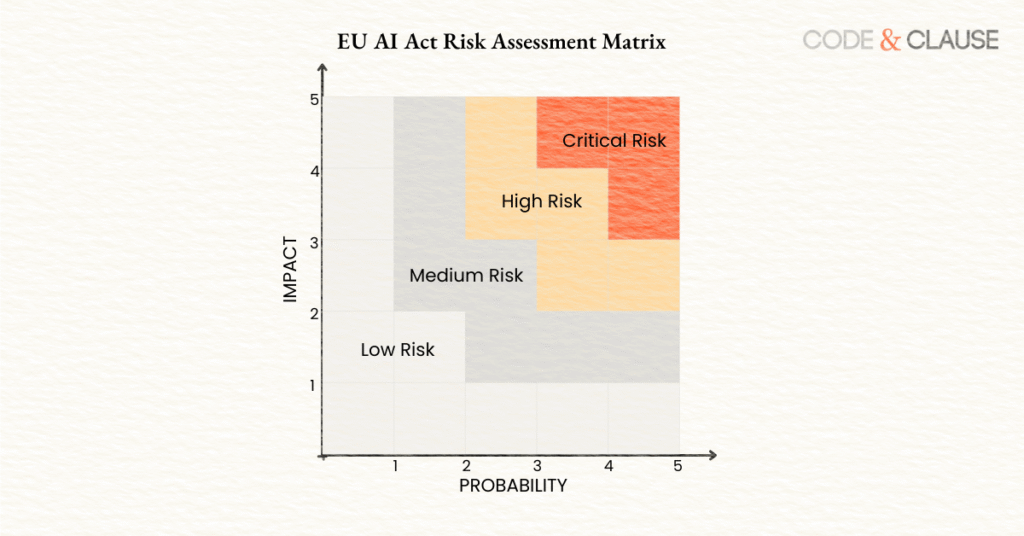

Impact and Probability Methodology:

Develop evidence-based scoring systems:

Impact Assessment (1-5 scale):

- Severity of potential harm to individuals

- Scope of affected population

- Reversibility of negative outcomes

- Duration of impact

Probability Assessment (1-5 scale):

- Historical incident rates for similar systems

- Technical testing and validation results

- Operational monitoring data

- Expert judgment for novel risks

Risk Score = Impact × Probability, creating 1-25 scale for prioritization.

Control Implementation and Validation:

For each identified risk, document:

- Specific technical controls implemented (preventive, detective, corrective)

- Control implementation evidence (code, configurations, procedures)

- Testing results demonstrating control effectiveness per Article 9(6)

- Monitoring procedures for ongoing control performance

- Residual risk assessment after control implementation

Continuous Risk Management:

Establish systematic procedures for:

- Quarterly risk assessment reviews incorporating operational data

- Risk reassessment triggered by system modifications

- Incident-driven risk analysis and assessment updates

- Annual comprehensive risk management system audit

Organizations should reference our comprehensive risk assessment implementation guide for detailed frameworks, templates, and systematic implementation procedures.

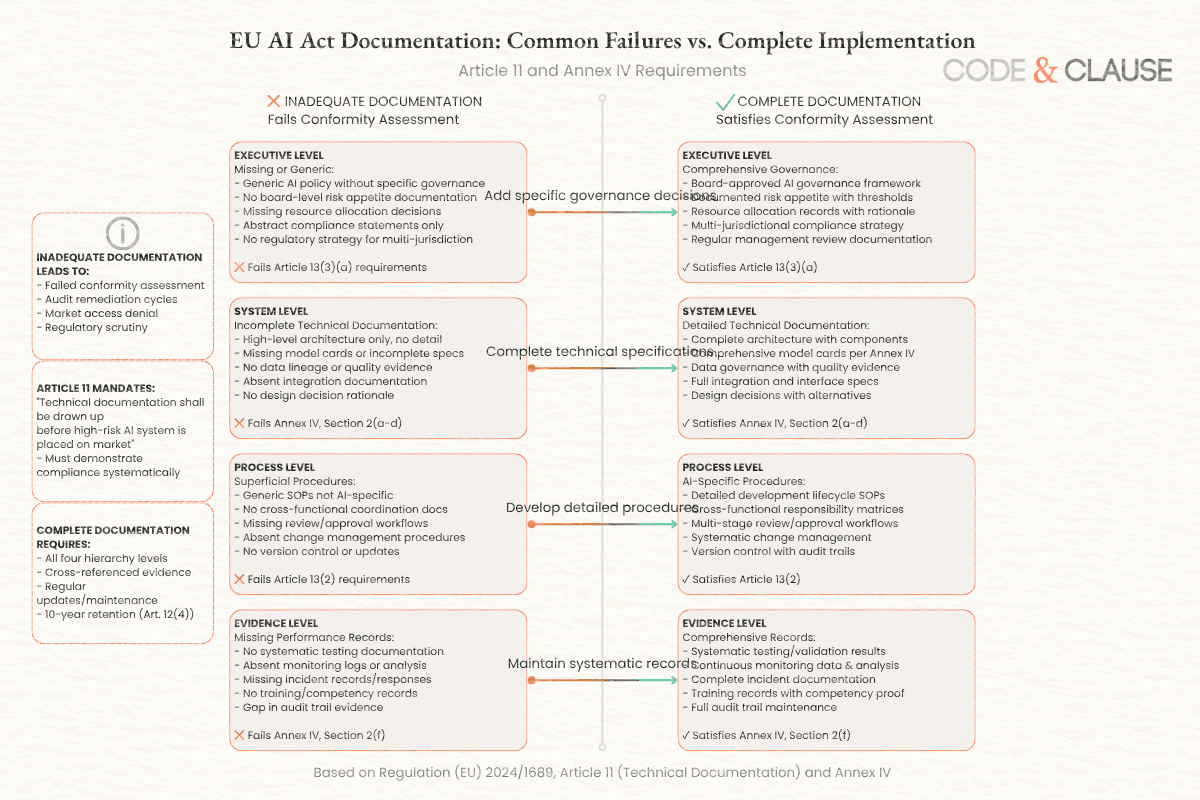

Mistake #3: Inadequate Technical Documentation

The Documentation Deficiency

Article 11 technical documentation requirements for high-risk AI systems represent one of the most comprehensive regulatory documentation obligations in EU product regulation. Organizations consistently underestimate documentation scope, detail, and maintenance requirements, producing documentation that fails conformity assessment scrutiny.

Annex IV specifies minimum technical documentation content including system design, data governance, model specifications, risk management, quality management, and operational procedures¹². However, the regulation’s principle-based approach requires organizations to determine what constitutes “appropriate” documentation for their specific systems, creating interpretation challenges.

Common Documentation Failures

Incomplete System Architecture Documentation: Organizations provide high-level architecture descriptions without detailed component specifications, integration documentation, or technical decision rationale. Annex IV Section 2(a) requires “general description of the AI system” including detailed design choices¹³.

Absent Model Card Information: AI model documentation lacks comprehensive performance characteristics, limitations, intended use cases, and validation results. Conformity assessments increasingly scrutinize model cards as primary evidence of system capabilities and constraints.

Missing Data Governance Evidence: While organizations document training data characteristics, they fail to provide evidence of data quality validation, bias assessment procedures, data lineage tracking, and ongoing data governance per Article 10 requirements¹⁴.

Static Documentation Without Version Control: Documentation represents system state at initial deployment without systematic update procedures reflecting system modifications, performance monitoring findings, or operational changes.

No Cross-Reference to Risk Management: Technical documentation exists separately from risk assessment documentation, preventing auditors from verifying that identified risks have corresponding technical mitigations implemented.

Documentation Structure Framework

Implement hierarchical documentation architecture per Article 11 and Annex IV:

Level 1: Executive Documentation

- AI governance policy and framework

- Quality management system overview

- Risk management approach

- Regulatory compliance strategy

Level 2: System Documentation

- Complete system architecture with component specifications

- AI model technical specifications and model cards

- Data governance framework and procedures

- Integration architecture and interface specifications

Level 3: Process Documentation

- Development lifecycle procedures

- Testing and validation procedures

- Deployment and monitoring procedures

- Incident response and corrective action procedures

Level 4: Evidence Documentation

- Testing and validation results

- Performance monitoring data

- Incident reports and resolutions

- Training records and competency assessments

Article 11 Documentation Checklist

Ensure documentation addresses all Annex IV requirements:

General Description (Annex IV, Section 1):

- Intended purpose and use cases with examples

- Provider identification and contact information

- System versions and configuration management

- Relationship to other AI systems or products

System Design and Architecture (Annex IV, Section 2(a)):

- Complete system architecture diagrams

- Component specifications and interactions

- Hardware requirements and dependencies

- Software architecture and frameworks

- Decision-making logic and algorithms

Data and Data Governance (Annex IV, Section 2(b-c)):

- Training data characteristics and sources per Article 10(3)

- Data preprocessing and transformation procedures

- Validation and testing data descriptions

- Data quality measures and validation procedures

- Bias assessment procedures and results per Article 10(2)(f)

Model Specifications (Annex IV, Section 2(d)):

- AI model type and learning approach

- Model architecture and configuration

- Training procedures and hyperparameters

- Performance metrics and evaluation results

- Known limitations and failure modes

Risk Management Documentation (Annex IV, Section 2(e)):

- Risk management system description per Article 9

- Identified risks with impact and probability assessment

- Implemented mitigation measures

- Residual risk evaluation

- Risk monitoring procedures

Testing and Validation (Annex IV, Section 2(f)):

- Testing procedures and protocols

- Validation results and performance metrics

- Conformity with accuracy, robustness, cybersecurity per Article 15

- Pre-determined changes testing per Article 13(3)(d)

Quality Management System (Annex IV, Section 3):

- Quality management procedures per Article 13

- Compliance monitoring and assessment

- Corrective action procedures

- Management review processes

Documentation Maintenance Procedures

Establish systematic documentation currency:

Regular Review Cycles:

- Monthly operational documentation updates

- Quarterly technical documentation review

- Annual comprehensive documentation audit

Change-Triggered Updates:

- System modification documentation within 30 days

- Regulatory change incorporation within 60 days

- Incident-driven documentation updates immediately

Version Control Requirements:

- Semantic versioning for all documentation

- Change logs with rationale and approval

- Historical version retention for 10 years per Article 12(4)¹⁵

Organizations should reference our complete technical documentation guide for comprehensive documentation frameworks, templates, and systematic implementation procedures.

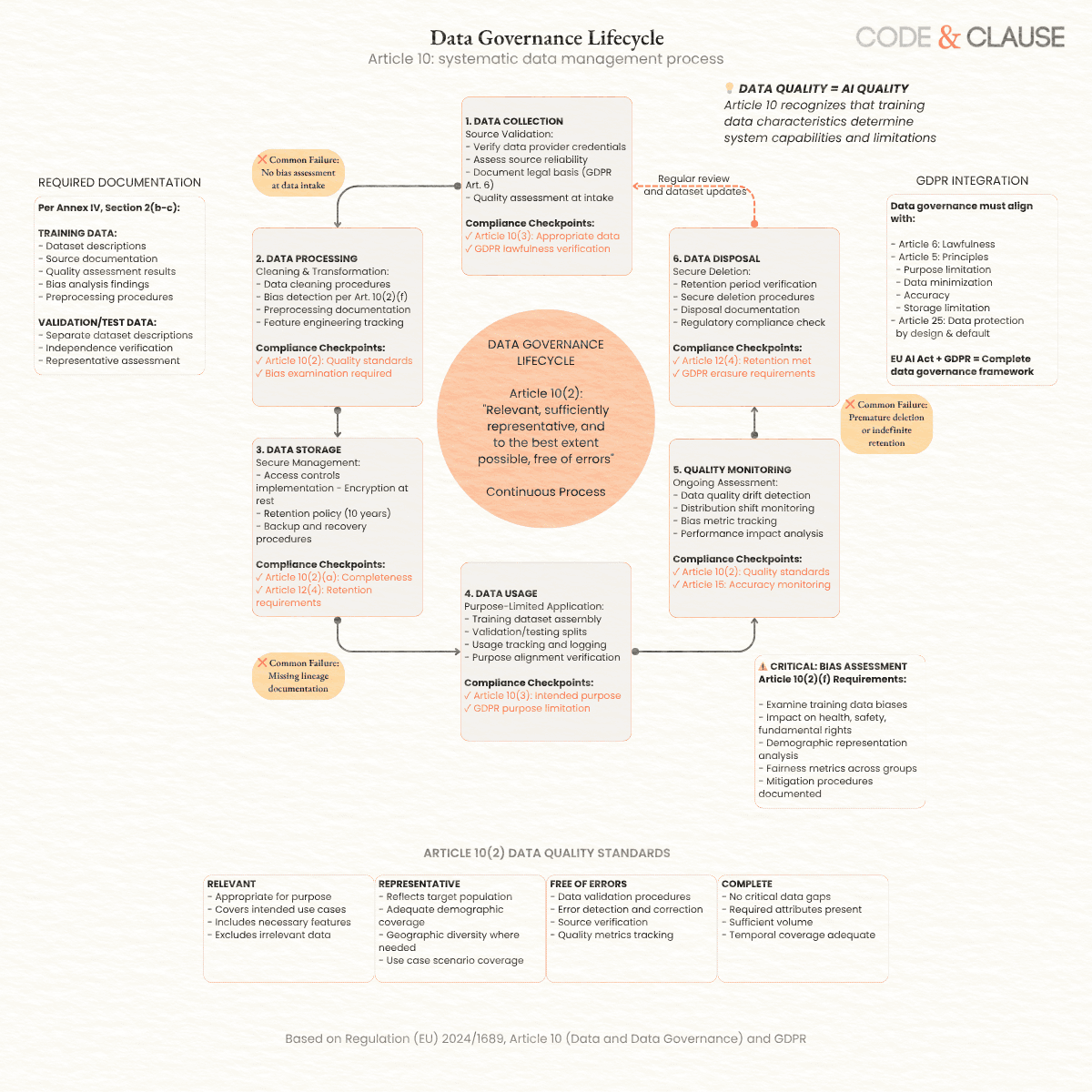

Mistake #4: Inadequate Data Governance and Bias Mitigation Implementation

The Data Governance Gap

Article 10 establishes comprehensive data governance requirements for training, validation, and testing datasets used in high-risk AI systems¹⁶. Organizations frequently underestimate these requirements, treating data governance as a one-time training data collection activity rather than implementing systematic data lifecycle management.

The regulation’s emphasis on data quality, representativeness, bias assessment, and statistical properties reflects recognition that AI system performance and fairness fundamentally depend on training data characteristics. Conformity assessments scrutinize data governance as primary indicator of whether organizations have implemented adequate measures to prevent discrimination and ensure system reliability.

Common Data Governance Deficiencies

Absent Data Quality Frameworks: Organizations lack systematic data quality measurement, validation, and maintenance procedures. Article 10(2)(a) requires data to be “relevant, sufficiently representative, and to the best extent possible, free of errors and complete with regard to the properties or elements that are material for the intended purpose”¹⁷.

Superficial Bias Assessment: Data bias assessment consists of reviewing demographic distributions without analyzing whether training data enables fair performance across all relevant demographic groups. Article 10(2)(f) requires examining “possible biases that are likely to affect the health and safety of persons, have a negative impact on fundamental rights or lead to discrimination”¹⁸.

Missing Data Lineage Documentation: Organizations cannot trace training data from original sources through preprocessing, transformation, and integration into training datasets. This prevents validation of data quality claims and impact analysis when data quality issues emerge.

No Ongoing Data Quality Monitoring: Data governance focuses on initial training data without establishing procedures for monitoring data quality degradation, distribution shift, or emerging bias patterns in production data.

Data Governance Framework Implementation

Implement systematic data governance per Article 10 requirements:

Data Collection and Acquisition:

Source Validation:

- Document all data sources with reliability assessment

- Verify data collection legal basis per GDPR Article 6¹⁹

- Establish data provider agreements with quality requirements

- Implement source reputation and credibility evaluation

Collection Procedures:

- Define data collection methodologies and protocols

- Establish quality control measures during collection

- Document consent mechanisms where required

- Implement collection audit trails and logging

Data Processing and Preparation:

Preprocessing Documentation:

- Document all data cleaning procedures with rationale

- Specify data transformation and normalization methods

- Define feature engineering procedures and logic

- Maintain preprocessing code with version control

Quality Validation:

- Implement automated data quality checks per Article 10(2)

- Define quality metrics: completeness, accuracy, consistency, timeliness

- Establish quality thresholds and acceptance criteria

- Document quality validation results and corrective actions

Bias Assessment and Mitigation:

Systematic Bias Analysis per Article 10(2)(f):

Representation Analysis:

- Evaluate demographic group representation in training data

- Compare data distributions to target population distributions

- Identify underrepresented groups and data gaps

- Document representativeness assessment methodology

Performance Fairness Evaluation:

- Test model performance across demographic groups

- Measure fairness metrics: demographic parity, equalized odds, equal opportunity

- Identify performance disparities requiring mitigation

- Document fairness evaluation procedures and results

Bias Mitigation Strategies:

- Data augmentation to address underrepresentation

- Resampling techniques for distribution balancing

- Fairness-aware model training procedures

- Post-processing bias mitigation where necessary

Data Governance Processes:

Data Lineage Tracking:

- Document data flow from sources through preprocessing to model training

- Maintain transformation history with version control

- Enable impact analysis when data quality issues emerge

- Support reproducibility for validation and auditing

Ongoing Data Quality Management:

- Implement continuous data quality monitoring in production

- Establish data quality review cycles (monthly minimum)

- Define data quality incident response procedures

- Maintain data quality metrics trending and analysis

Data Retention and Disposal:

- Implement retention schedules aligned with Article 12(4) requirements

- Define secure data disposal procedures

- Document retention and disposal activities

- Maintain disposal records for audit purposes

Data Governance Documentation Requirements

Maintain comprehensive data governance evidence per Annex IV, Section 2(b-c):

Training Data Documentation:

- Dataset descriptions: sources, size, characteristics, time periods

- Data collection procedures and methodologies

- Data quality assessment results and validation evidence

- Bias assessment procedures and findings

- Preprocessing and transformation documentation

- Representativeness analysis and validation

Validation and Testing Data Documentation:

- Separate validation/testing dataset descriptions

- Independence verification from training data

- Quality and representativeness assessment

- Usage procedures and results

Data Management Procedures:

- Data governance roles and responsibilities

- Data quality standards and measurement procedures

- Data lifecycle management procedures

- Data security and access control measures

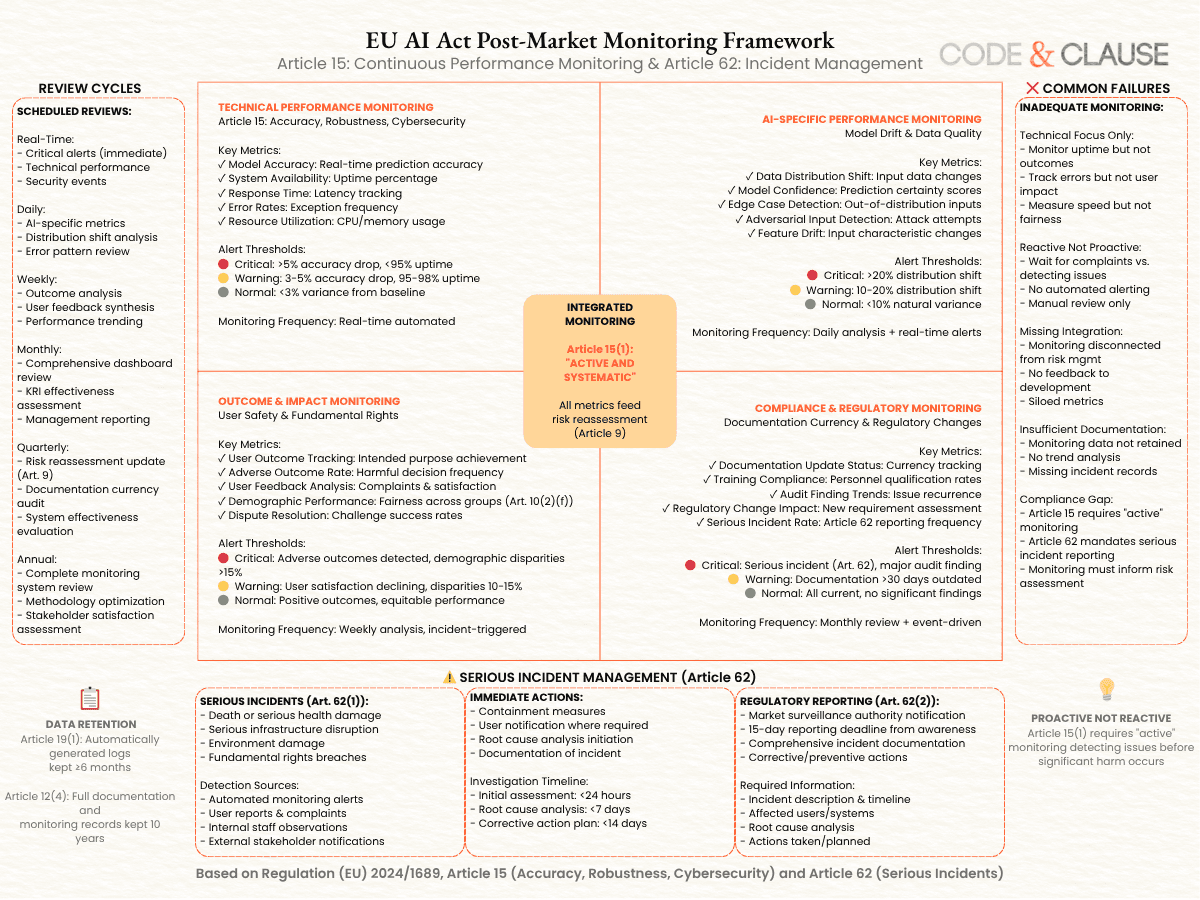

Mistake #5: Absent Post-Market Monitoring System

The Monitoring Oversight

Article 15 requires high-risk AI system providers to establish post-market monitoring systems documenting, investigating, and reporting serious incidents throughout system operational lifetime²⁰. Organizations frequently treat deployment as compliance completion, failing to implement systematic monitoring procedures that conform to regulatory requirements.

Post-market monitoring serves dual purposes: ensuring continued system performance and safety, and providing early warning of emerging risks requiring corrective action. Conformity assessments increasingly scrutinize whether organizations have implemented effective monitoring systems capable of detecting system degradation, performance drift, or harmful outcomes.

Common Monitoring Deficiencies

Reactive Rather Than Proactive Monitoring: Organizations respond to identified problems without implementing systematic monitoring detecting issues before significant harm occurs. Article 15(1) requires “active” monitoring systems, not passive problem response²¹.

Technical Metrics Without Outcome Monitoring: Monitoring focuses on system uptime, latency, and technical performance without tracking whether the AI system achieves intended outcomes and avoids harmful impacts on users.

Missing Incident Reporting Procedures: Organizations lack systematic procedures for identifying, documenting, and reporting serious incidents per Article 62 requirements²². This creates regulatory exposure and prevents learning from operational issues.

No Systematic Performance Review: Monitoring data accumulates without regular review cycles translating monitoring findings into system improvements, documentation updates, or risk reassessment.

Post-Market Monitoring Framework

Implement comprehensive monitoring per Article 15 requirements:

Monitoring System Architecture:

Technical Performance Monitoring:

- System availability and uptime tracking

- Response time and latency measurement

- Error rates and exception monitoring

- Resource utilization and capacity tracking

- Integration point performance and reliability

AI-Specific Performance Monitoring:

- Model prediction accuracy on production data

- Distribution shift detection for input data

- Model confidence score distributions

- Edge case detection and handling

- Adversarial input identification

Outcome and Impact Monitoring:

- User outcome tracking for intended purposes

- Adverse outcome identification and analysis

- User feedback collection and analysis

- Complaint and dispute monitoring

- Demographic performance disparities per Article 10(2)(f)

Key Risk Indicator (KRI) Framework:

Define measurable indicators signaling risk materialization:

Technical KRIs:

- Model accuracy degradation beyond defined thresholds

- Data distribution shift exceeding established baselines

- System error rates or unavailability exceeding targets

Business and Compliance KRIs:

- User satisfaction score declines or complaint rate increases

- Adverse outcome rate changes or regulatory inquiry frequency

- Documentation currency gaps or audit finding trends

Incident Management Procedures:

Serious Incident Identification per Article 62(1):

Article 62(1) defines serious incidents as those leading to death, serious damage to health, serious disruption to infrastructure or environment, or breaches of fundamental rights obligations²³. Establish systematic identification:

Incident Detection:

- Automated monitoring alert triggers

- User report evaluation procedures

- Internal staff incident reporting channels

- External stakeholder notification assessment

Incident Classification:

- Severity assessment using defined criteria

- Fundamental rights impact evaluation

- Causality analysis connecting incident to AI system

- Regulatory reporting threshold determination

Incident Response:

- Immediate containment measures implementation

- Root cause analysis procedures

- Corrective action planning and implementation

- Market surveillance authority notification per Article 62(2) timelines

Documentation Requirements:

- Comprehensive incident records

- Investigation findings and analysis

- Corrective actions taken

- Preventive measures implemented

- Authority communication records

Monitoring Review and Action:

Regular Review Cycles:

- Weekly monitoring data review for critical systems

- Monthly comprehensive monitoring assessment

- Quarterly trend analysis and pattern identification

- Annual monitoring system effectiveness evaluation

Review Outputs:

- Monitoring findings summary with trends

- Risk reassessment based on operational experience

- System modification recommendations

- Documentation update requirements

- Training and process improvement needs

Corrective and Preventive Actions:

- Issue prioritization based on severity and frequency

- Corrective action planning for identified problems

- Preventive action implementation addressing systemic issues

- Effectiveness validation for implemented actions

Monitoring Documentation

Maintain comprehensive monitoring evidence:

Monitoring System Documentation:

- Monitoring architecture and procedures per Article 15(3)

- KRI definitions with measurement methodologies

- Alert threshold definitions and escalation procedures

- Review cycle schedules and responsibilities

Monitoring Records:

- Performance data trending and analysis

- Incident reports and investigations

- Review meeting minutes and decisions

- Corrective action implementation tracking

Reporting Documentation:

- Serious incident reports to authorities per Article 62

- Market surveillance coordination records

- Management reporting and escalation documentation

Everything You Need to Know About EU AI Act Compliance Mistakes

Conclusion: EU AI Act Mistakes Correction And Avoiding Compliance Failures

The five critical mistakes outlined—incorrect classification, superficial risk assessment, inadequate documentation, weak data governance and bias mitigation, and absent monitoring—share a common root cause: treating EU AI Act compliance as documentation project rather than implementing systematic quality and risk management throughout AI system lifecycle.

Note on General-Purpose AI Models: Organizations deploying general-purpose AI models face additional obligations under Article 53, including transparency requirements (technical documentation, instructions for use) and systemic risk management for models with systemic risk²⁵. Extend monitoring frameworks to address GPAI-specific requirements including copyright summary provision and adversarial testing where applicable.

Global Compliance Context: While this article focuses on EU AI Act requirements, organizations operating globally should note alignment opportunities with complementary frameworks including the US NIST AI Risk Management Framework and UK sectoral AI governance approaches. Systematic implementation of EU AI Act requirements often satisfies core elements of these frameworks, enabling efficient multi-jurisdictional compliance.

Successful compliance requires:

Systematic Implementation: Follow structured frameworks addressing all regulatory requirements comprehensively rather than focusing on perceived high-priority areas while neglecting others.

Cross-Functional Coordination: Integrate legal, technical, business, and risk management expertise throughout implementation rather than delegating compliance to single function.

Continuous Process Integration: Embed compliance requirements into development, deployment, and operational processes rather than creating parallel compliance documentation divorced from actual practices.

Evidence-Based Validation: Demonstrate compliance through objective evidence of systematic processes producing measurable outcomes rather than asserting compliance through policy statements.

Ongoing Commitment: Maintain compliance through regular reviews, updates, and improvements rather than treating initial implementation as completion.

Organizations approaching August 2026 high-risk system requirements face implementation urgency. However, rushing implementation without systematic approach creates compliance gaps requiring costly remediation during conformity assessment. The investment in comprehensive implementation now prevents audit failures, regulatory scrutiny, and potential market access denial later.

Share this on:

Immediate Next Steps

Conduct Gap Assessment: Systematically evaluate current state against each mistake category:

- Review risk classification documentation and rationale

- Assess risk assessment comprehensiveness and continuity

- Audit technical documentation completeness per Annex IV

- Evaluate data governance procedures and evidence

- Examine post-market monitoring system implementation

Prioritize Remediation: Address gaps based on regulatory risk and implementation effort:

- Classification errors require immediate correction given cascading impacts

- Risk assessment and monitoring provide operational value beyond compliance

- Documentation and data governance enable conformity assessment success

Implement Systematic Frameworks: Adopt structured approaches rather than addressing issues ad hoc:

- Use classification decision frameworks with documented rationale

- Implement continuous risk management per Article 9 requirements

- Establish documentation hierarchies addressing all Annex IV elements

- Deploy comprehensive data governance covering full data lifecycle

- Build active monitoring systems with incident management integration

Access Implementation Resources:

- Complete Risk Classification Framework

- Risk Assessment Implementation Guide

- Technical Documentation Requirements

Get Expert Implementation Support: Organizations facing complex implementation challenges, multi-jurisdictional requirements, or accelerated timelines can access specialized consulting: Contact Us

🔒 Legal Disclaimer This article provides general guidance based on Regulation (EU) 2024/1689 and publicly available regulatory guidance. It is not legal advice. Compliance requirements depend on specific organizational contexts, use cases, and regulatory interpretations. Always consult qualified legal counsel for compliance decisions. Last updated: October 2025.

References:

1. Regulation (EU) 2024/1689, Article 113 (Application dates)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e8583-1-1

2. European Commission, “Guidelines on Prohibited AI Practices under the AI Act,” February 2025; Notified Body Coordination Group, “EU AI Act Conformity Assessment Framework,” 2025

3. Regulation (EU) 2024/1689, Article 99 (Penalties)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e8111-1-1

4. Regulation (EU) 2024/1689, Annex III (High-risk AI systems)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e38-143-1

5. Regulation (EU) 2024/1689, Article 6(2) (Classification rules for high-risk AI systems)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e1652-1-1

6. Regulation (EU) 2024/1689, Article 6(1)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e1652-1-1

7. European Commission, “AI Act Implementation Guidance: Risk Classification,” 2025

8. Regulation (EU) 2024/1689, Article 9 (Risk management system)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2082-1-1

9. Regulation (EU) 2024/1689, Article 9(2)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2082-1-1

10. Regulation (EU) 2024/1689, Article 9(6)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2082-1-1

11. Regulation (EU) 2024/1689, Article 15 (Accuracy, robustness and cybersecurity)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2653-1-1

12. Regulation (EU) 2024/1689, Annex IV (Technical documentation)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e38-146-1

13. Regulation (EU) 2024/1689, Annex IV, Section 2(a)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e38-146-1

14. Regulation (EU) 2024/1689, Article 10 (Data and data governance)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2197-1-1

15. Regulation (EU) 2024/1689, Article 12(4) (Record-keeping)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2434-1-1

16. Regulation (EU) 2024/1689, Article 10

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2197-1-1

17. Regulation (EU) 2024/1689, Article 10(2)(a)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2197-1-1

18. Regulation (EU) 2024/1689, Article 10(2)(f)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2197-1-1

19. Regulation (EU) 2016/679 (GDPR), Article 6 (Lawfulness of processing)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32016R0679#d1e1888-1-1

20. Regulation (EU) 2024/1689, Article 15 (Post-market monitoring)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2653-1-1

21. Regulation (EU) 2024/1689, Article 15(1)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2653-1-1

22. Regulation (EU) 2024/1689, Article 62 (Reporting of serious incidents)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e5086-1-1

23. Regulation (EU) 2024/1689, Article 62(1)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e5086-1-1

24. Regulation (EU) 2024/1689, Article 14 (Human oversight)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e2575-1-1

25. Regulation (EU) 2024/1689, Article 53 (Obligations for providers of general-purpose AI models)

https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R1689#d1e4517-1-1