Share this on:

Most AI teams are misclassifying their systems under the EU AI Act. Here’s the definitive framework to get it right.

Developed by our expert team combining AI engineering and regulatory insights. Our practical framework helps mid-market teams classify AI systems with confidence.

The EU AI Act’s August 2026 deadline is approaching fast. Before you can build compliant systems, you must answer one critical question regarding the EU AI Act risk assessment:

Is your AI system actually “high-risk” under the regulation?

Getting this wrong has serious consequences:

- Over-classifying → Wasted resources, slowed development.

- Under-classifying → Missed requirements, regulatory penalties.

This guide gives you a complete classification framework to make the right call.

⚠️ Note: This is general guidance only and not legal advice. See full disclaimer at the end.

Understanding the EU AI Act risk assessment is essential for accurate classification.

Why So Many Teams Get Classification Wrong

Three common misconceptions lead to misclassification:

- “If it uses AI, it’s high-risk.” ❌ Wrong. Most AI systems are minimal or limited risk.

- “We’re not in healthcare or finance, so we’re safe.” ❌ Wrong. High-risk categories cut across all industries.

- “Our lawyers will figure it out.” ❌ Dangerous. Classification requires deep technical understanding of your system.

The stakes: Misclassification can mean €10M fines or 2% of global turnover.

Navigating the EU AI Act Risk Assessment Process

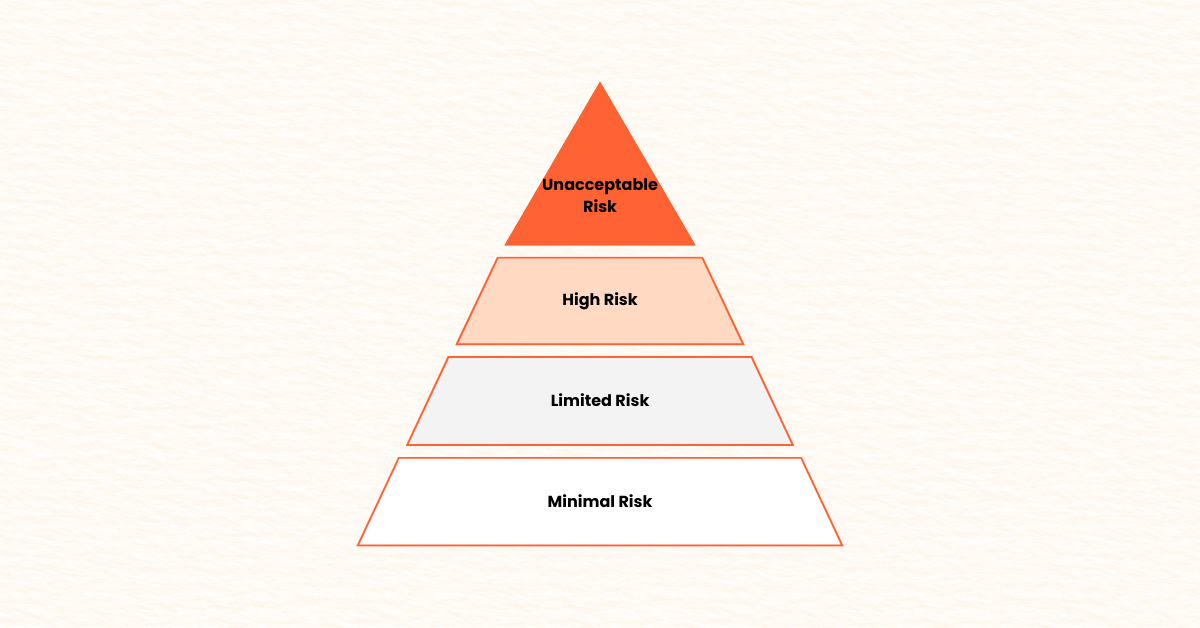

Understanding EU AI Act Risk Categories

- Unacceptable Risk – Prohibited (e.g., social scoring, manipulative AI).

- High Risk – Strict requirements (focus of this article).

- Limited Risk – Transparency obligations (e.g., chatbots).

- Minimal or No Risk – No specific restrictions (most AI systems).

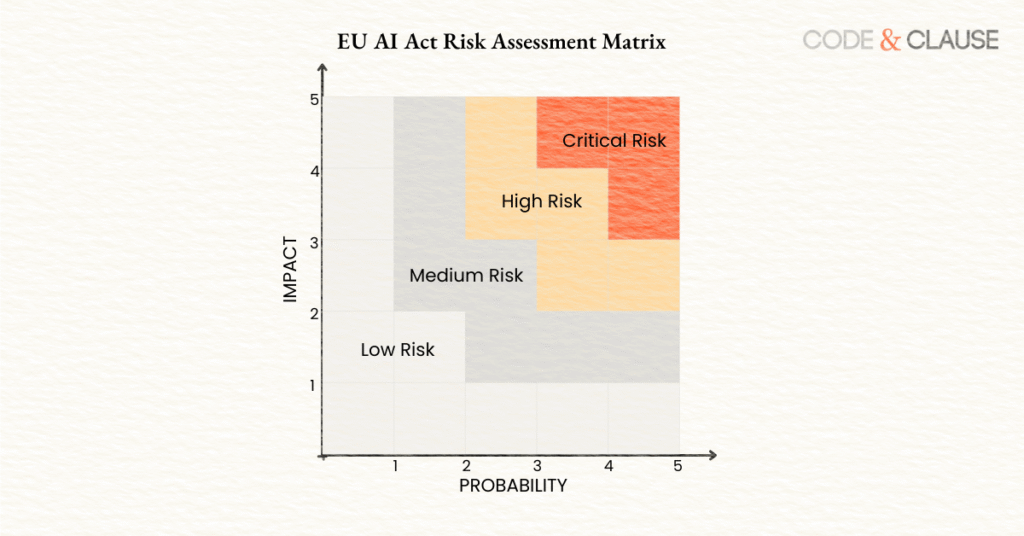

Step-by-Step Risk Assessment Process

The Two Paths to High-Risk Classification

The EU AI Act defines two routes where an AI system becomes high-risk:

- Annex I: Safety components in regulated products.

- Annex III: Standalone AI systems in specific high-risk use cases.

Let’s break them down.

Path 1: Annex I – AI as Safety Components in Regulated Products

If your AI system functions as a safety component in a product already regulated by EU legislation, it’s automatically high-risk.

Industries & Examples:

| Industry | Regulated Products | AI Examples |

|---|---|---|

| Healthcare | Medical devices | AI diagnostics, drug discovery |

| Automotive | Vehicles | Autonomous driving, collision avoidance |

| Aviation | Aircraft | Flight management, predictive maintenance |

| Industrial | Machinery | AI-controlled manufacturing equipment |

| Energy | Electrical equipment | Smart grid, power plant optimization |

The Test:

- Is your AI a safety component?

- Is the overall product regulated?

- Does it require third-party conformity assessment?

✅ If all three = High-Risk (Annex I).

Path 2: Annex III – Standalone High-Risk Use Cases

Even if not embedded in a product, your AI may be high-risk if it falls under Annex III categories:

- Biometrics – Remote ID, categorisation by sensitive attributes, emotion recognition.

- Critical Infrastructure – Safety in digital infrastructure, traffic, energy, water.

- Education & Training – Admissions, exam monitoring, grading.

- Employment & HR – Recruitment, worker monitoring, task allocation.

- Access to Services – Credit scoring, insurance, benefits eligibility.

- Law Enforcement – Risk assessment, predictive policing, evidence analysis.

- Migration & Border Control – Asylum/visa screening, border checks.

- Justice & Democracy – Legal decision support, election influence detection.

Key Rule: If your system profiles natural persons, it’s always high-risk.

Exemptions & Edge Cases

Not every system in Annex III is automatically high-risk.

- Exemption 1: No material influence → Dashboards or analytics that inform but don’t decide.

- Exemption 2: Category-specific exclusions → e.g., fraud detection in finance.

⚠️ If claiming exemption, you must document your reasoning for regulators.

Your 3-Step Classification Framework

Step 1: Product Safety Test

- Embedded in regulated product?

- Functions as safety component?

- Third-party conformity required?

→ ✅ Yes = High-Risk (Annex I)

Step 2: Use Case Test

- Operates in Annex III category?

- Influences individual decisions?

- Profiles persons?

→ ✅ Yes = High-Risk (Annex III)

Step 3: Exemption Test

- Does it materially influence outcomes?

- Do exemptions apply?

- Have you documented it?

→ ✅ If claiming exemption, prepare evidence.

Common Misclassification Mistakes

❌ “B2B tools aren’t high-risk.”

Reality: HR tech, business credit scoring, and biometrics often are.

❌ “Our AI is simple, so low risk.”

Reality: Risk depends on use case, not complexity.

❌ “We just provide the model, so not responsible.”

Reality: If you know it’s used in high-risk applications, obligations may apply.

❌ “Humans in the loop = not high-risk.”

Reality: If humans typically follow AI outputs, that’s still material influence.

Practical Classification Examples

Resume Screening Tool → High-Risk (Annex III – Employment)

Customer Service Chatbot → Limited Risk (Transparency only)

Medical Imaging AI → High-Risk (Annex I + Annex III)

What High-Risk Means in Practice

If classified as High-Risk, you must:

Before Market Launch

- Risk management system

- Technical documentation

- Testing for accuracy, bias, robustness

- Human oversight mechanisms

- Registration in EU database

Ongoing

- Post-market monitoring

- Incident reporting

- Regular updates & reviews

Taking Action

Immediate Steps for Teams

- Classify your system → Use our High-Risk Classification Checklist (free Excel tool).

- Document your decision → Regulators will ask.

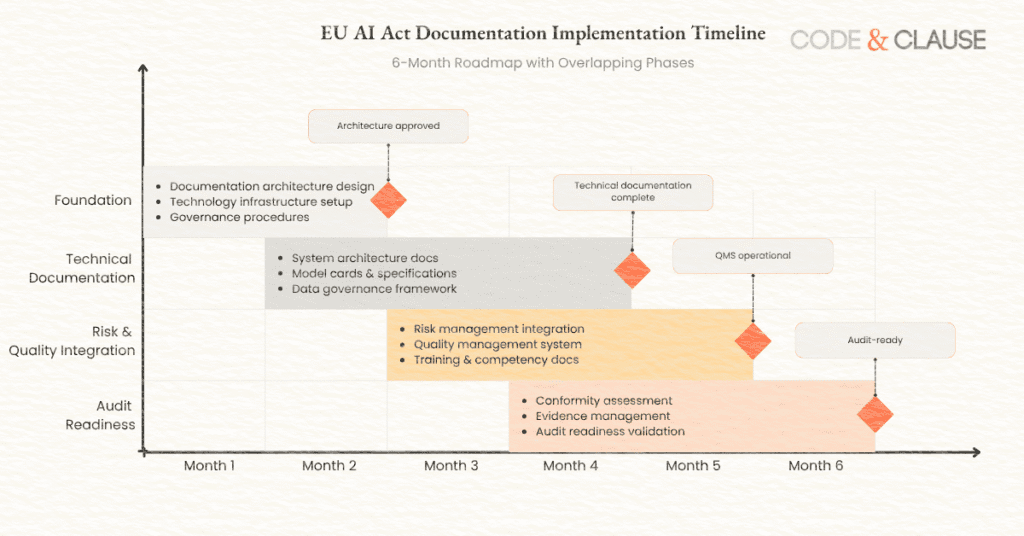

- Plan compliance → Start early; compliance can add 3–6 months to launch.

- Stay updated → Regulations evolve.

Tailored Actions

- AI PMs: Factor classification into product planning.

- Engineers: Build compliance into architecture early.

- Startups: Use our $29 Startup Compliance Mini-Template.

- Enterprises: Access our $97 Executive Compliance Briefing.

FAQ

Final Thoughts

Getting classification right is the foundation of AI Act compliance. It impacts:

- Resources & costs

- Launch timelines

- Market access

- Competitive positioning

The EU AI Act is already in force (since August 2024). Full compliance applies from August 2026.

Legal Disclaimer

This article provides general guidance only based on publicly available EU AI Act sources. It is not legal advice. Compliance varies by use case and jurisdiction. Always consult qualified legal counsel and regulatory updates before making decisions. We disclaim liability for actions taken based on this content.

Last updated: August 2025 | Based on EU AI Act (Regulation 2024/1689).

This is Part 1 of our 3-Part EU AI Act Implementation Series

- Article 1: Risk Classification Framework ← You are here

- Article 2: Step-by-Step Risk Assessment Implementation

- Article 3: Technical Documentation That Passes Audits (coming in 2 weeks)

Subscribe to get notified when the full series is published.

Share this on: